Model fusion involves merging multiple deep models into one. One intriguing potential benefit of model interpolation is its potential to enhance researchers’ understanding of the features of neural networks’ mode connectivity. In the context of federated learning, intermediate models are typically sent across edge nodes before being merged on the server. This process has sparked significant interest among researchers due to its importance in various applications. The primary goal of model fusion is to enhance generalizability, efficiency, and robustness while preserving the original models’ capabilities.Â

The method of choice for model fusing in deep neural networks is coordinate-based parameter averaging. At the same time, federated learning aggregates local models from edge nodes, and mode connectivity research uses linear or piecewise interpolation between models. Parameter averaging has some good qualities. However, it might not work well in more complicated training situations, such as when dealing with Non-Independent and Identically Distributed (Non-I.I.D.) data or different training conditions. For instance, due to the inherent heterogeneity of local node data caused by NonI.I.D. data in federated learning, model aggregation experiences diverging update orientations. Studies also show that neuron misalignment further increases the difficulty of model fusion by the permutation invariance trait that neural networks possess. So, approaches to solving the problem have been put up that aim to regularize elements one by one or reduce the impact of permutation invariance. However, only some of these approaches have considered how different model weight ranges affect model fusion.Â

A new study by researchers at Nanjing University explores merging models under different weight scopes and the impact of training conditions on weight distributions (referred to as ‘Weight Scope’ in this study). This is the first work that officially investigates the influence of weight scope on model fusion. After conducting multiple experiments under different data quality and training hyper-parameter circumstances, the researchers identified the phenomenon as a ‘weight scope mismatch’. They found that the converged models’ weight scopes differ significantly. Despite all distributions being approximated by Gaussian distributions, the work shows that there are considerable changes in the model weight distributions under different training settings. In particular, the parameters from models using the same optimizer are shown in the top five sub-figures, while models using various optimizers are shown in the bottom ones. Weight range inconsistency impacts model fusion, as is seen from the poor linear interpolation caused by the mismatched weight scope. The researchers explain that it is easier to aggregate parameters with similar distributions than with distinct ones, and merging models with dissimilar parameters can be a real pain.

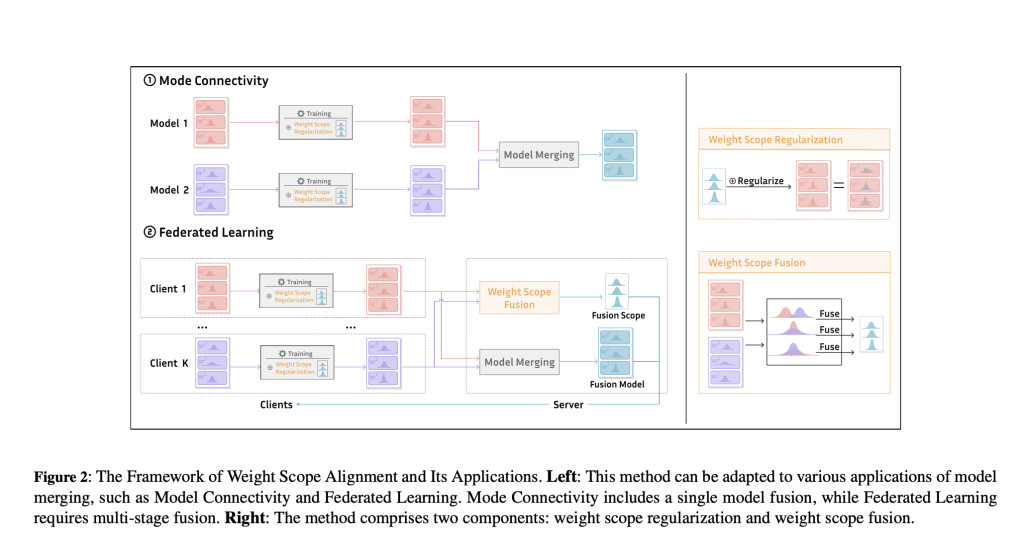

Every layer’s parameters adhere to a straightforward distribution—the Gaussian distribution. The simple distribution inspires a new and easy method of parameter alignment. The researchers use a target weight scope to direct the training of the models to ensure that the weights and scopes of the merged models are in sync. They aggregate the goal weight scope statistic with the mean and variance of the parameter weights in the to-be-merged models for more complicated multi-stage fusion. Weight Scope Alignment (WSA) is the name of the suggested approach; weight scope regularization and weight scope fusion are the names of the two processes above.Â

The team studies the benefits of WSA in comparison to related technologies by implementing it in mode connectivity and federated learning situations. By training the weights to be as near to a given distribution as possible, the suggested WSA optimizes for successful model fusion while balancing specificity and generality. It effectively addresses the drawbacks of existing methods and competes with other similar regularization methods such as the proximal term and weight decay, providing valuable insights for researchers and practitioners in the field.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Weight Scope Alignment Method that Utilizes Weight Scope Regularization to Constrain the Alignment of Weight Scopes during Training appeared first on MarkTechPost.

Source: Read MoreÂ