Researchers from the University of Wisconsin-Madison addressed the critical challenge of performance variability in GPU-accelerated machine learning (ML) workloads within large-scale computing clusters. Performance variability in these environments arises due to several factors, including hardware heterogeneity, software optimizations, and the data-dependent nature of ML algorithms. This variability can result in inefficient resource utilization, unpredictable job completion times, and reduced overall cluster performance, making it difficult to optimize GPU-rich clusters for ML workloads effectively.

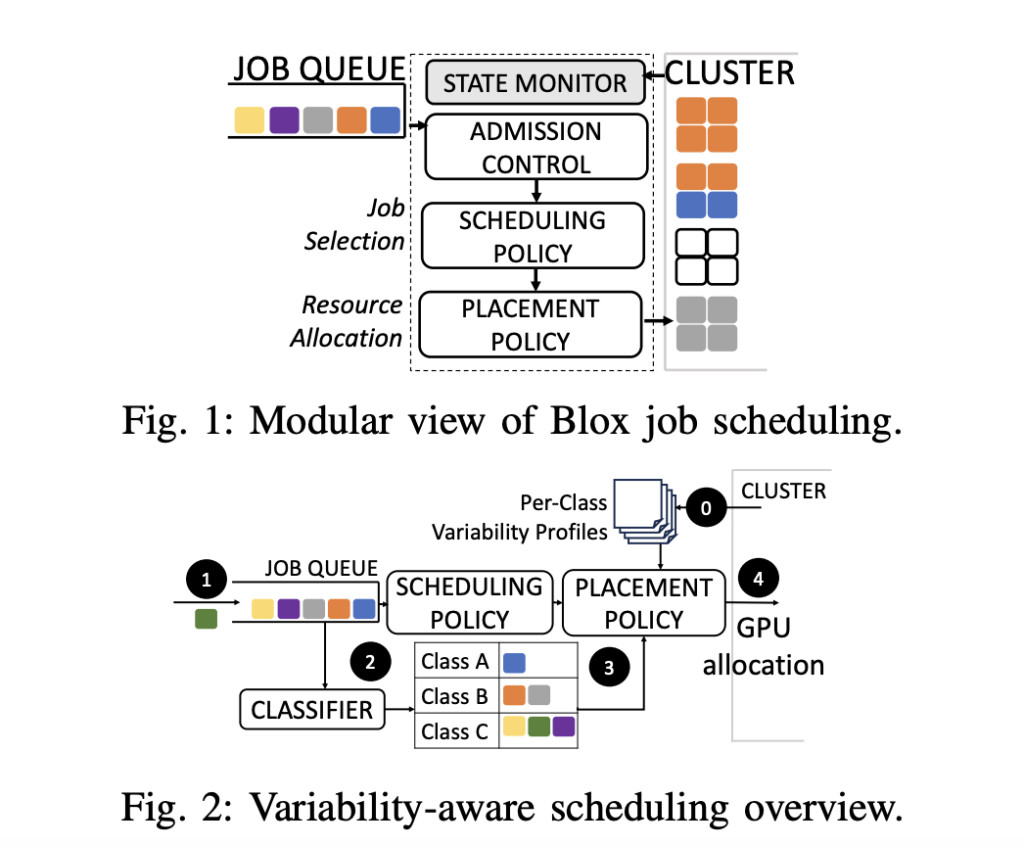

Current cluster schedulers, such as SLURM and Kubernetes, are designed to manage and allocate resources across clusters. These methods often struggle to handle the performance variability inherent in ML workloads. They typically don’t account for the fluctuations in performance caused by hardware and workload-specific factors, leading to suboptimal resource allocation and inefficiencies. The researchers propose a novel scheduler called PAL (Performance-Aware Learning). PAL is designed to embrace and mitigate the effects of performance variability in GPU-rich clusters. The key innovation of PAL lies in its ability to profile both jobs and nodes, enabling it to make informed scheduling decisions that account for the variability of performance. By doing so, PAL aims to improve job completion times, resource utilization, and overall cluster efficiency.

PAL operates in two primary phases: performance profiling and scheduling decision-making. In the performance profiling phase, PAL collects detailed metrics on GPU utilization, memory bandwidth, and execution time for each job, as well as performance characteristics for individual nodes. This profiling allows PAL to estimate the performance variability of each job and node. In the scheduling decision-making phase, PAL uses the collected profiles to estimate performance variability and select the most suitable node for each job. PAL considers both the expected performance and resource availability of nodes while balancing locality to minimize communication overhead between nodes. This adaptive approach enables PAL to place jobs on nodes where they are likely to perform best, thereby reducing job completion times and improving resource utilization.

Some experiments were conducted to test PAL against existing state-of-the-art schedulers across various ML workloads, including image, language, and vision models. The results demonstrate that PAL significantly outperforms these schedulers, achieving a 42% improvement in geomean job completion time, a 28% increase in cluster utilization, and a 47% reduction in makespan. These improvements highlight PAL’s effectiveness in mitigating performance variability and optimizing GPU-rich cluster scheduling.

In conclusion, PAL represents a significant advancement in performance variability in GPU-accelerated ML workloads. By leveraging detailed performance profiling and adaptive scheduling, PAL effectively reduces job completion times, enhances resource utilization, and improves overall cluster performance. This makes PAL a valuable tool for optimizing large-scale computing systems, especially those increasingly reliant on GPUs for ML and scientific applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post PAL: A Novel Cluster Scheduler that Uses Application-Specific Variability Characterization to Intelligently Perform Variability-Aware GPU Allocation appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)