Text-to-image generation has evolved rapidly, with significant contributions from diffusion models, which have revolutionized the field. These models are designed to produce realistic and detailed images based on textual descriptions, which are vital for applications ranging from personalized content creation to artistic endeavors. The ability to precisely control the style of these generated images is paramount, especially in domains where the visual presentation must align closely with specific stylistic preferences. This level of control is essential for enhancing user experience and ensuring that generated content meets the exacting standards of various creative industries.

A critical challenge in the field has been the effective blending of content from one image with the style of another. This process must ensure that the resulting image retains the semantic integrity of the original content while adopting the desired stylistic attributes. However, achieving this balance is complex, particularly due to the need for comprehensive datasets specifically tailored for style transfer. With such datasets, it becomes easier to train models that can perform end-to-end style transfer, leading to results that often must catch up to the desired quality. The inadequacy of existing datasets forces researchers to rely on training-free approaches, which, although useful, are limited in their ability to deliver high-quality and consistent results.

Existing methods for style transfer have typically relied on approaches like DDIM (Denoising Diffusion Implicit Models) inversion and carefully tuned feature injection layers in pre-trained models. These methods, while innovative, come with significant drawbacks. For instance, DDIM inversion can lead to content loss, as it struggles to maintain the fine details of the original image during the transfer process. Similarly, the feature injection methods often increase the time required for inference, making them less practical for real-time applications. Moreover, LoRA (Low-Rank Adaptation) methods require extensive fine-tuning for each image, undermining their stability and generalizability. These limitations highlight the need for more robust and efficient approaches to handle the complexities of style transfer without compromising speed or quality.

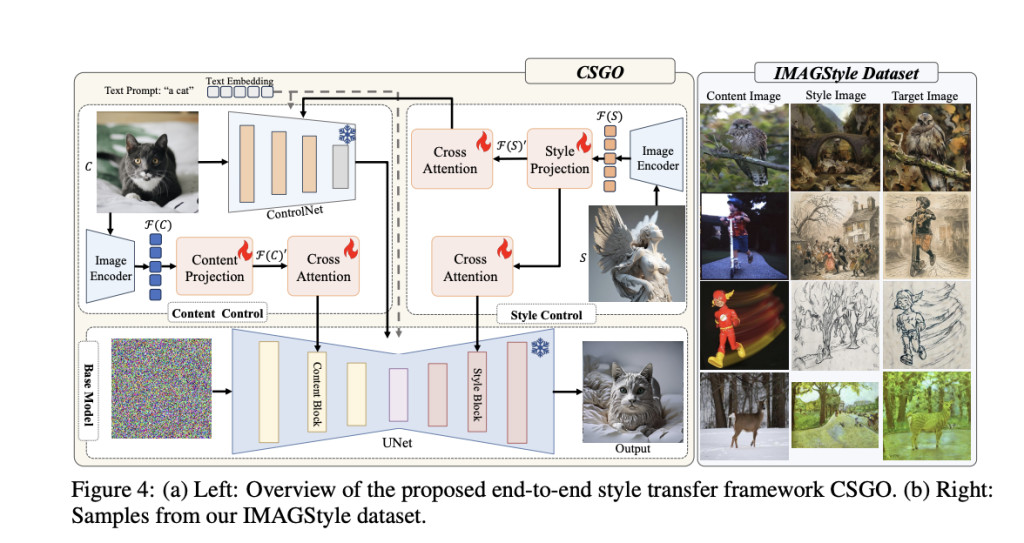

Researchers from Nanjing University of Science and Technology and the InstantX team have developed a new model called CSGO. This model introduces a novel approach to style transfer by leveraging a newly created dataset, IMAGStyle. The IMAGStyle dataset significantly contributes to the field, containing 210,000 content-style-stylized image triplets. This large-scale dataset is designed to address the deficiencies of previous approaches by providing a comprehensive resource that can be used to train models in an end-to-end manner. The CSGO model itself is built on the principle of explicitly decoupling content and style features, a departure from previous methods that relied on implicit separation. This explicit decoupling allows the model to apply style transfers more accurately and efficiently.

The CSGO framework uses independent feature injection modules for content and style, which are then integrated into a unified model. This approach allows the framework to maintain the original semantics, layout, and other critical features of the content image while the style features are controlled. The model supports various types of style transfer, including image-driven, text-driven, and text editing-driven synthesis. This versatility is one of the key strengths of CSGO, making it applicable across a wide range of scenarios, from artistic creations to commercial applications where style precision is crucial.

Extensive experiments conducted by the research team have demonstrated the superior performance of the CSGO model compared to existing methods. The model achieved a Content Style Distance (CSD) score of 0.5146, the highest among the methods tested, indicating its effectiveness in preserving content while applying the desired style. The Content Alignment Score (CAS), a metric used to evaluate how well the content of the original image is retained, was measured at 0.8386 for CSGO, further validating its ability to maintain content integrity. These results are particularly noteworthy given the complex tasks involved, such as transferring styles in scenarios involving natural landscapes, face images, and artistic scenes.

In conclusion, the research on the CSGO model and the IMAGStyle dataset represents a significant milestone in style transfer. The explicit decoupling of content and style features in the CSGO framework addresses many of the challenges of the earlier methods, offering a robust solution that enhances both the quality and efficiency of style transfers. The large-scale IMAGStyle dataset enables the model to perform these tasks effectively, providing a valuable resource for future research. These innovations have led to a model that achieves state-of-the-art results and sets a new benchmark for style transfer technology.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post CSGO: A Breakthrough in Image Style Transfer Using the IMAGStyle Dataset for Enhanced Content Preservation and Precise Style Application Across Diverse Scenarios appeared first on MarkTechPost.

Source: Read MoreÂ