Deep neural network training can be sped up by Fully Quantised Training (FQT), which transforms activations, weights, and gradients into lower precision formats. The training procedure is more effective with the help of the quantization process, which enables quicker calculation and lower memory utilization. FQT minimizes the numerical precision to the lowest possible level while preserving the training’s efficacy. Researchers have been studying the viability of 1-bit FQT in an endeavor to explore these constraints.

The study initially analyses FQT theoretically, concentrating on well-known optimization algorithms such as Adam and Stochastic Gradient Descent (SGD). A crucial finding emerges from the analysis, which is that the degree of FQT convergence is highly dependent on the variance of the gradients. Put differently, and especially when low-bitwidth precision is used, the variations in gradient values can impact the training process’s success. Building more efficient low-precision training techniques requires an understanding of the link between gradient variance and convergence.

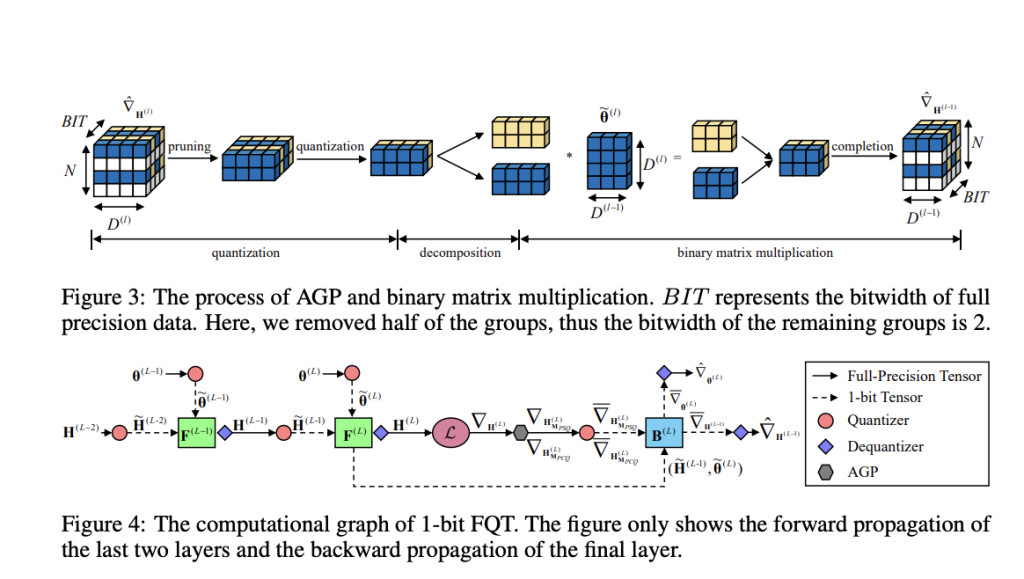

Expanding upon these theoretical understandings, the researchers have introduced a unique approach known as Activation Gradient Pruning (AGP). The reality that not all gradients are equally significant is used by the AGP method. AGP is able to reallocate resources to improve the precision of the most critical gradients by identifying and pruning the less informative gradients or those that make less of a contribution to the model’s learning process. This method guarantees that the training process stays stable even at very low precision levels and helps to lessen the detrimental effects of gradient variance.

The researchers have also suggested a method known as Sample Channel joint Quantisation (SCQ) in addition to AGP. Weight gradients and activation gradients are computed using several quantization techniques in SCQ. This customized method significantly improves the training process efficiency by guaranteeing that both kinds of gradients are processed efficiently on low-bitwidth hardware.

In order to verify their methodology, the team has created a structure that enables the application of their algorithm in real-world situations. They have experimented with their approach by optimizing popular neural network models, like VGGNet-16 and ResNet-18, using various datasets. The algorithm’s accuracy gain over conventional per-sample quantization techniques was significant, averaging about 6%. Not only that but compared to full-precision training, the training process was about 5.13 times faster.

In conclusion, this study is a major advance in the field of fully quantized training, especially in terms of lowering the acceptable threshold for numerical precision without compromising performance. This study can eventually result in even more effective neural network training techniques, especially if low-bitwidth hardware becomes more widely used.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post This AI Research from China Introduces 1-Bit FQT: Enhancing the Capabilities of Fully Quantized Training (FQT) to 1-bit appeared first on MarkTechPost.

Source: Read MoreÂ