The field of large language models (LLMs) has seen tremendous advancements, particularly in expanding their memory capacities to process increasingly extensive contexts. These models can now handle inputs with over 100,000 tokens, allowing them to perform highly complex tasks such as generating long-form text, translating large documents, and summarizing extensive data. However, despite these advancements in processing capability, there remains a critical limitation in generating outputs of equivalent length. Most current models need help to produce coherent texts that exceed 2,000 words, which poses significant challenges for tasks that require comprehensive and detailed content generation.

One of the key problems these models face is their inability to maintain coherence and relevance in extended outputs. While LLMs have been fine-tuned on large datasets, these datasets often contain only short outputs. As a result, models are inherently limited by the examples they have encountered during training, capping their maximum output length at around 2,000 words. This limitation becomes particularly evident when users require detailed content, such as writing research papers, generating lengthy reports, or creating in-depth analyses. The ability to exceed this word limit by losing coherence or repeating information has been a significant hurdle in applying LLMs in fields requiring extensive written content.

Existing approaches to overcoming this limitation have yet to address the root cause of the problem successfully. Although some methods, such as iterative fine-tuning and synthesized training data, have been employed, they have yet to extend the output lengths significantly. These methods still rely on datasets that do not exceed the 2,000-word output limit, thereby inheriting the same constraints. This means that even with advanced fine-tuning techniques, the models can only generate longer texts if they encounter issues like content truncation or a lack of coherence in the generated text.

A Tsinghua University and Zhipu AI research team introduced an innovative solution known as AgentWrite. This novel agent-based pipeline is designed to decompose ultra-long writing tasks into smaller, more manageable subtasks, enabling existing LLMs to generate coherent outputs that exceed the 20,000-word mark. By breaking down the task, AgentWrite allows off-the-shelf models to manage and generate long-form content without compromising quality. This method represents a significant departure from traditional approaches that have attempted to stretch output length by merely fine-tuning existing short-output datasets.

AgentWrite begins crafting a detailed writing plan based on the user’s input. This plan outlines the structure of the text and specifies the target word count for each paragraph or section. Following this plan, the model generates content for each part sequentially, ensuring the output remains coherent and logically structured. The research team validated AgentWrite’s effectiveness through experiments, demonstrating its capability to produce high-quality outputs of up to 20,000 words. This approach leverages the inherent capabilities of existing LLMs, thus avoiding the need to develop entirely new models, which can be both time-consuming and resource-intensive.

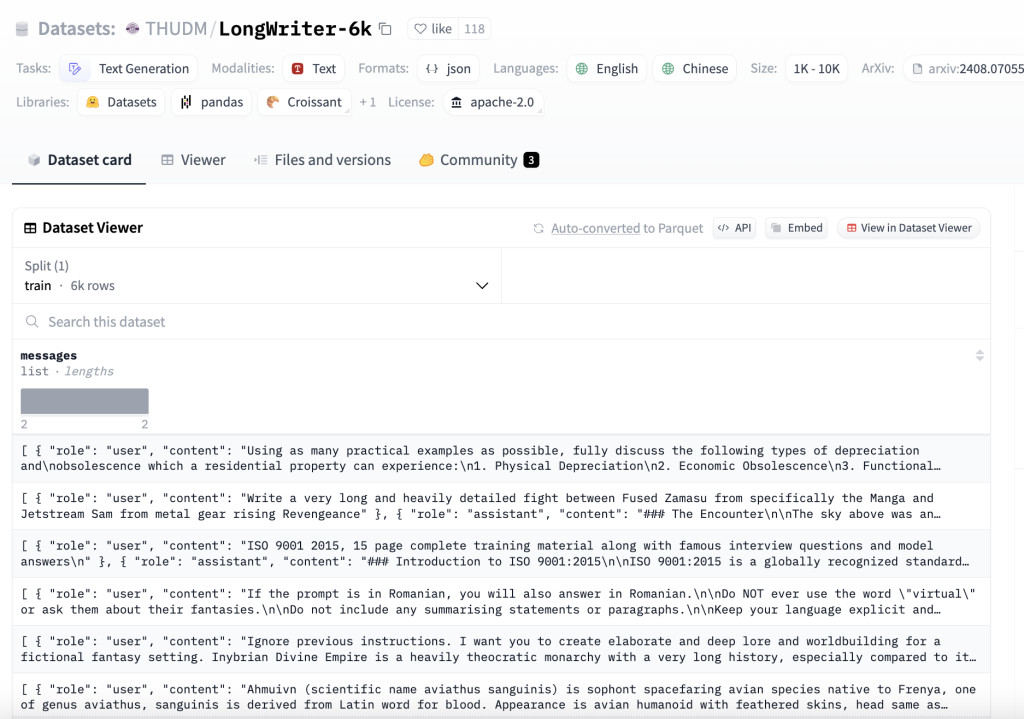

The researchers further enhanced this method by introducing a LongWriter-6k dataset comprising 6,000 supervised fine-tuning (SFT) data entries with output lengths ranging from 2,000 to 32,000 words. Incorporating this dataset into the training of LLMs has proven to be a game-changer, allowing the models to generate well-structured outputs that exceed 10,000 words. This dataset addresses the scarcity of long-output examples in existing SFT datasets and successfully scales the output length while maintaining the high quality of the generated text. The team also developed a benchmark named LongBench-Write, specifically designed to evaluate the ultra-long generation capabilities of these models. The 9-billion parameter model trained using this approach achieved state-of-the-art performance on LongBench-Write, surpassing even larger proprietary models.

The impact of this research is significant, as it demonstrates that the primary factor limiting the output length of long-context LLMs is the constraint imposed by the SFT data. By introducing AgentWrite and LongWriter-6k, the researchers have effectively unlocked the potential of existing LLMs to generate ultra-long outputs. This method extends the output window size of these models to over 10,000 words and ensures that the output quality is not compromised. Direct Preference Optimization (DPO) further enhances the model’s ability to follow long writing instructions and generate higher-quality content.

In conclusion, the introduction of AgentWrite and LongWriter-6k offers a practical and scalable solution for generating ultra-long outputs, paving the way for the broader application of LLMs in areas that require extensive written content. By overcoming the 2,000-word barrier, this work opens up new possibilities for using LLMs in academic writing, detailed reporting, and other fields where long-form content is essential.

Check out the Dataset Card. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post LongWriter-6k Dataset Developed Leveraging AgentWrite: An Approach to Scaling Output Lengths in LLMs Beyond 10,000 Words While Ensuring Coherent and High-Quality Content Generation appeared first on MarkTechPost.

Source: Read MoreÂ