The usage of multimedia (images and documents) data types has become common across various modern-day applications and is continuously increasing. This type of data requires considerable database storage space and cost. Moreover, this data is present in database backups and snapshots, further adding to the total storage space required. In this post, we demonstrate an architecture pattern in which we migrate BLOB column data from Amazon Relational Database Service (Amazon RDS) for Oracle tables to Amazon Simple Storage Service (Amazon S3). This solution allows you to choose the specific columns and rows containing BLOB data that you want to migrate to Amazon S3. It uses Amazon S3 integration, which enables you to copy data between an RDS for Oracle instance and Amazon S3 using SQL.

Benefits of using Amazon S3 integration with Amazon RDS for Oracle

The Amazon S3 integration with Amazon RDS for Oracle feature acts as a bridge to copy data between an RDS for Oracle instance and Amazon S3 and vice versa using built-in SQL functions. The following are some use cases that demonstrate the benefits of using the Amazon S3 integration with Amazon RDS for Oracle:

Database storage optimization

Amazon S3 is a service for storing object data that offers industry leading durability, availability, performance, security, and virtually unlimited scalability at very low costs. In cases where your BLOB data can be permanently deleted from a database and moved to Amazon S3, you can optimize the storage costs. This also has a cascading effect on other copies of this data, like read replicas and database backups, thereby reducing storage costs even further. You can further optimize storage costs in Amazon S3 by applying Amazon S3 lifecycle policies depending on data usage.

Secured data exchange

Your application at times may need to share certain files with different teams for auditing or other purposes. By copying this data to Amazon S3, you can give secured access to selected application users to the required object data in Amazon S3 without giving them permissions within the database. You might also need to receive data from other teams and applications, which they can upload to Amazon S3. With the Amazon S3 integration feature, you can copy that data from Amazon S3 into your RDS instance and upload it to the appropriate tables.

In this post, we focus on database storage optimization by migrating existing BLOB data in an RDS for Oracle database to Amazon S3. The following sections elaborate the solution details.

Solution overview

To free up database storage space, you can migrate existing BLOB data in your database to Amazon S3 and only store the corresponding object metadata and Amazon S3 URL in the database. The solution to do so consists of three high-level steps:

Copy existing BLOB data from the RDS for Oracle database to Amazon S3.

Modify your application so it can read and write from Amazon S3 instead of the database for BLOB data.

Delete the data that has been migrated to Amazon S3 from the database to free up space.

If a particular step is not feasible due to some dependencies, you can use a subset of these recommendations that serve your needs.

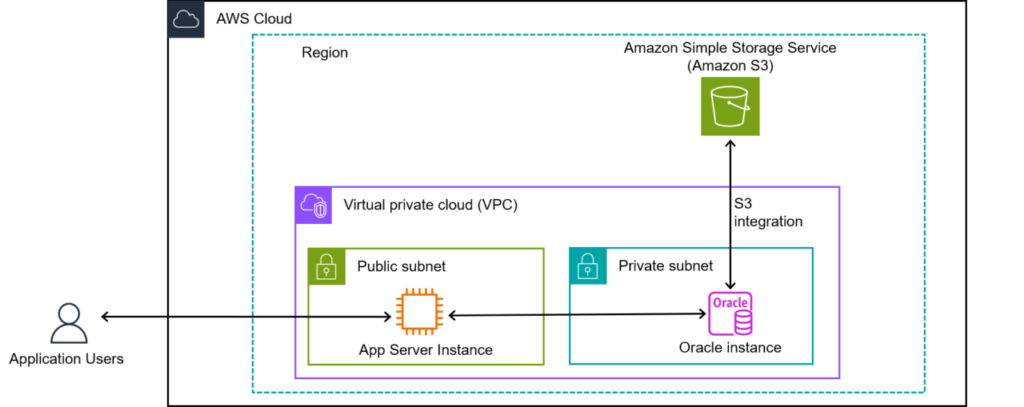

The following diagram illustrates our solution architecture, which consists of an RDS for Oracle instance, typically in a private subnet. The application users interact with the web server, which in turns interacts with the database. Direct user access to the database is restricted as a best practice. The database integrates with an S3 bucket in the same AWS Region, so that objects transfer between the two seamlessly using Amazon S3 integration.

The following sections provide the detailed steps to establish the integration between Amazon S3 and the RDS for Oracle instance to copy object files across these services.

Prerequisites

Before you start the data transfer, complete the following steps to create the necessary resources (if they don’t already exist) and configure them:

Create an S3 bucket in the same Region as the RDS for Oracle DB instance.

Connect to your DB instance with an SQL client.

Create an AWS Identity and Access Management (IAM) role for your RDS for Oracle instance to access Amazon S3, and attach the IAM role to the RDS for Oracle instance.

Associate the S3_INTEGRATION option to your DB instance to integrate Amazon RDS for Oracle with Amazon S3.

Copy existing BLOB data from the RDS for Oracle DB instance table to Amazon S3

In this section, we demonstrate how to extract BLOB data from your existing tables and upload it to Amazon S3 using the Amazon S3 integration. For this post, we use the tablesample_employee_uploadsin an existing database, which has three columns (File_ID INTEGER, File_name varchar2(150), and File_data_blob). The column File_data_blob contains BLOB data. Complete the following steps to extract this data from the table and upload it to Amazon S3:

Connect to your RDS for Oracle database using your preferred SQL client.

Create a directory on your RDS instance where you want extract the BLOB column data into files. The following command creates a directory in your RDS for Oracle instance:

You can verify the newly created directory path using the following statement:

You can view the files and contents of the directory with the following statement:

Next, you extract the BLOB data into its corresponding object files. Depending on the file type, it could be a PDF document, image, or some other object file. The following sample code extracts the BLOB data into JPEG images. For the sake of convenience and reusability, we define the BLOB-to-file conversion utility as a procedure. This uses the inputs like BLOB data, directory name on your instance, and file name, and produces an output in the form of an object file in the given location. We can call this later in a different script and get our files extracted from the respective tables.

This is a just one pattern of sample code for data extraction, but there could be other code patterns as well that you can use or modify for the same output.

Create a reusable procedure to extract the data from the BLOB column:

Now that the procedure is compiled, run the following script to extract the BLOB data from the sample table sample_employee_uploads. This script uses a cursor so that you can filter the records that meet the criteria for extracting selective BLOB data vs. the entire table. Next, you upload the extracted files (for example, SampleFilename.jpg) to your desired S3 bucket (YourBucketName) with the S3 prefix (ImagesExtractedFromTable) with the help of the function rdsadmin.rdsadmin_s3_tasks.upload_to_s3. As the objects are uploaded, you capture their corresponding Amazon S3 URL in a new column added to the table. See the following code:

In the preceding script, we updated the S3_URL for all the records that have processed. This gives an idea of how many records were successfully copied to Amazon S3. If you want to capture additional details like timestamp of processing, you can add additional status columns in the same table or a different table as required. This can help you keep track of records that are copied successfully when you choose to do this on a periodic basis.

You can tally the counts of S3_url in the database with the number of objects in your S3 bucket. You can also verify a few sample objects in Amazon S3 to confirm the files look good.

The following query gives you the count of S3_url populated in the table:

To get the count of objects in the S3 bucket, you need to sign in to the Amazon S3 console and navigate to your bucket. When you open your bucket to view objects, you get a count of objects displayed in the overview.

Alternatively, if you have the AWS Command Line Interface (AWS CLI) configured on your machine, you can use the following AWS CLI command to check the count of objects in your bucket:

This command lists all objects recursively and then counts the number of lines in the output, which corresponds to the number of objects.

You can delete the extracted object data file in your DB instance directory with the following scripts:

This completes your first step, in which you copied BLOB data to Amazon S3 and captured its metadata in the database so that the objects can be referenced from Amazon S3. The following section elaborates how you can modify the application to read, write, and store any new incoming BLOB data directly to Amazon S3 instead of the database.

Modify the application to read and write BLOB data from Amazon S3

As the second step of the solution, you modify your application UI layer architecture to read and write BLOB data directly from Amazon S3 and only its object URL and metadata from the database. This eliminates the need for storing the BLOB data in the database, making it suitable to delete. After you modify the UI layer, the database will not receive new BLOB data—it will be durably stored in Amazon S3. You need to change the application UI layer to implement the data flow as illustrated in the following diagrams for reads and writes.

The following diagram illustrates the data flow for reads.

The flow consists of the following steps:

A user sends a request to view a record.

The application pulls the metadata and its corresponding object URL from the database.

It fetches the object from Amazon S3 based on the URL retrieved.

It presents the entire record to the user for viewing.

The following diagram illustrates the data flow for writes.

The flow consists of the following steps:

A user enters information from the application.

The BLOB objects are stored in Amazon S3.

Their reference URL is collected.

The reference URL and other metadata are sent for writing to the database.

The database only contains the Amazon S3 reference URL of the object, thereby saving on database storage.

In this post, we focused on the architectural modification for the data flow in the UI layer to read and write BLOB data from Amazon S3. Because the UI layer technology may vary based on the application, the actual UI code change snippets are out of scope of this post.

So far, you have copied the existing BLOB data in the database to Amazon S3 using the Amazon S3 integration for Amazon RDS for Oracle and modified the application architecture to read and write from Amazon S3. Now you can free up data from the database, as demonstrated in following section.

Delete BLOB data from the RDS for Oracle database

Before you delete the data from the database, you can verify if there is still any data in the table that needs to be copied to Amazon S3:

If there are any records returned, you need to rerun the first step of this solution that copies over the data to Amazon S3 and populates its object URL in the database. Repeat the verification steps to validate if the newly copied data looks good.

After you verify the data copied to Amazon S3, you can delete the corresponding BLOB data from the table:

Use the MOVE command move the table to a different location within the same tablespace or to a different tablespace. This command also reorganizes the table’s storage, which can help reclaim space by rewriting data in a contiguous manner, reducing fragmentation and potentially improving I/O performance. After shrinking the table, you should rebuild the indexes to optimize performance. See the following code:

Run the output of the following queries to rebuild indexes and update statistics for the Oracle optimizer to have accurate information about the table and its indexes:

Find out where LOB segments are located by querying the DBA_SEGMENTS view:

Confirm if your tablespace is empty and no longer needed so you can drop it:

If the tablespace is empty, you can drop it:

Use the following command to check the table space usage and resize if necessary:

If there is free space in the tablespace, you can resize the data files to reclaim unused space:

You have now optimized the storage space required for your data on the instance. You can now downsize the instance storage attached to your RDS instance to achieve cost savings. To achieve this, take a snapshot of your existing instance. Restore the snapshot to a new DB instance, with a reduced storage volume size as required. Verify the new instance and the data, and make necessary changes in the application to point to the new database connection string. You can test it thoroughly end to end and then delete the old instance because it’s no longer required.

You have successfully freed up storage space from your RDS for Oracle database and achieved cost savings by reducing the instance storage size.

Conclusion

In this post, we showed you the use cases and benefits of migrating BLOB data from an RDS for Oracle database to Amazon S3. We demonstrated the steps to set up the required integration, prerequisites, and configuration to test the data flow between Amazon RDS for Oracle and Amazon S3. You can use this solution to migrate all or selective data based on your specific use case, share data securely with verified stakeholders, and optimize storage space and costs. You can optimize your application architecture to directly reference Amazon S3 for object storage to keep the database even leaner.

To learn more about RDS for Oracle DB instance management tasks, refer to Performing miscellaneous tasks for Oracle DB instances. For a deep dive into the Amazon S3 integration, see Amazon S3 integration.

Let us know if you have any comments or questions. We value your feedback!

About the Author

Rajul Savla is a Solutions Architect at AWS, and works closely with customers towards developing scalable solutions using AWS. He has experience in creating, debugging, and running relational database workloads using AWS services. He enjoys working on technical challenges and is passionate about learning from and sharing knowledge with his teammates and AWS customers.

Source: Read More