This post is co-written by Krishnan Narayan, Distinguished Engineer at Palo Alto Networks.

Palo Alto Network’s Prisma Cloud is a leading cloud security platform protecting enterprise cloud adoption from code to cloud workflows. Palo Alto Networks has customers operating in the cloud at scales generating tens of thousands of updates per second and standard alerting systems would generate too much noise. Prisma Cloud must be able to analyze both [near-]real-time signals and signals generated by analyzing patterns across resources and time. Importantly, it needed to be able to correlate and intelligently stitch together those groups of signals to provide both an actionable and a manageable set of signals to its customers. This solution is offered to customers as the Infinity Graph on the Prisma Cloud platform, uncovering the power of combining best-in-class security modules. Palo Alto Networks chose Amazon Neptune Database and Amazon OpenSearch Service as the core services to power its Infinity Graph.

In this post, we discuss the scale Palo Alto Networks requires from these core services and how we were able to design a solution to meet these needs. We focus on the Neptune design decisions and benefits, and explain how OpenSearch Service fits into the design without diving into implementation details.

Amazon Neptune and Amazon OpenSearch Service Overview

Neptune is a fast, reliable, fully managed graph database service that makes it straightforward to build and run applications that work with highly connected datasets. The core of Neptune is a purpose-built, high-performance graph database engine. This engine is optimized for storing billions of relationships and querying the graph with milliseconds latency. Neptune Database supports the popular property-graph query languages Apache TinkerPop Gremlin and openCypher, and the W3C’s RDF query language, SPARQL. Neptune powers graph use cases such as recommendation engines, fraud detection, knowledge graphs, drug discovery, and network security.

Amazon OpenSearch Service makes it easy for you to perform interactive log analytics, real-time application monitoring, website search, and more. OpenSearch is an open source, distributed search and analytics suite derived from Elasticsearch. Amazon OpenSearch Service offers the latest versions of OpenSearch, support for 19 versions of Elasticsearch (1.5 to 7.10 versions), as well as visualization capabilities powered by OpenSearch Dashboards and Kibana (1.5 to 7.10 versions). Amazon OpenSearch Service currently has tens of thousands of active customers with hundreds of thousands of clusters under management processing trillions of requests per month. For more information, see the OpenSearch documentation.

Amazon OpenSearch Service provisions all the resources for your OpenSearch cluster and launches it. It also automatically detects and replaces failed OpenSearch Service nodes, reducing the overhead associated with self-managed infrastructures. You can scale your cluster with a single API call or a few clicks on the OpenSearch Service console.

Solution overview

Prisma Cloud handles more than a billion assets, or workloads, that are continuously monitored across its customer base, available in at least 17 AWS Regions worldwide. The platform also deals with more than 500 million cloud audit events and over 10 billion flow log records per day to identify incidents and uncover potential risks in the cloud. On the code side, the code application security (CAS) modules cover hundreds of repositories and millions of events a day on code changes that invoke a scan on the pipeline. We also scan hundreds of millions of container images on a regular basis as part of the Cloud Workload Protection (CWP) module.

Prisma Cloud is optimized for risk visibility and remediation so the ‘time to visibility’ is key. Those events and signals power downstream applications adding security value, so it must handle several tens of thousands of updates per second on the assets and workloads being monitored for our customers, and we need to invest in technologies able to handle that scale. Further complicating this workload are assets that are ephemeral in nature due to events like autoscaling and on-demand jobs, and the resources identified may not exist perpetually. Infinity Graph connects the dots between data points and resources enabling security teams to visualize and analyze complex, interconnected security incidents more efficiently and enabling immediate prioritization and actionability.

We accomplish all this in the Prisma Cloud platform using a domain-driven architecture with prescriptive API integration patterns between modules and between third-party clients. We define a strong domain model with assets, findings, vulnerabilities and relationships to enable modules to define, update, report and close objects they discover that belong to those entities. The relationship domain is the key element forming the connective tissue between the assets and their reported findings and security issues. With code-to-cloud security, we observe as we proceed from code platforms generating raw code, through continuous integration and delivery (CI/CD) systems producing deployment artifacts from that code, all the way to cloud workloads running in the cloud. The scope and accuracy of the relationships discovered become the key facets of the Infinity Graph.

The following use cases are very difficult, if not impossible, to deliver without our Infinity Graph approach:

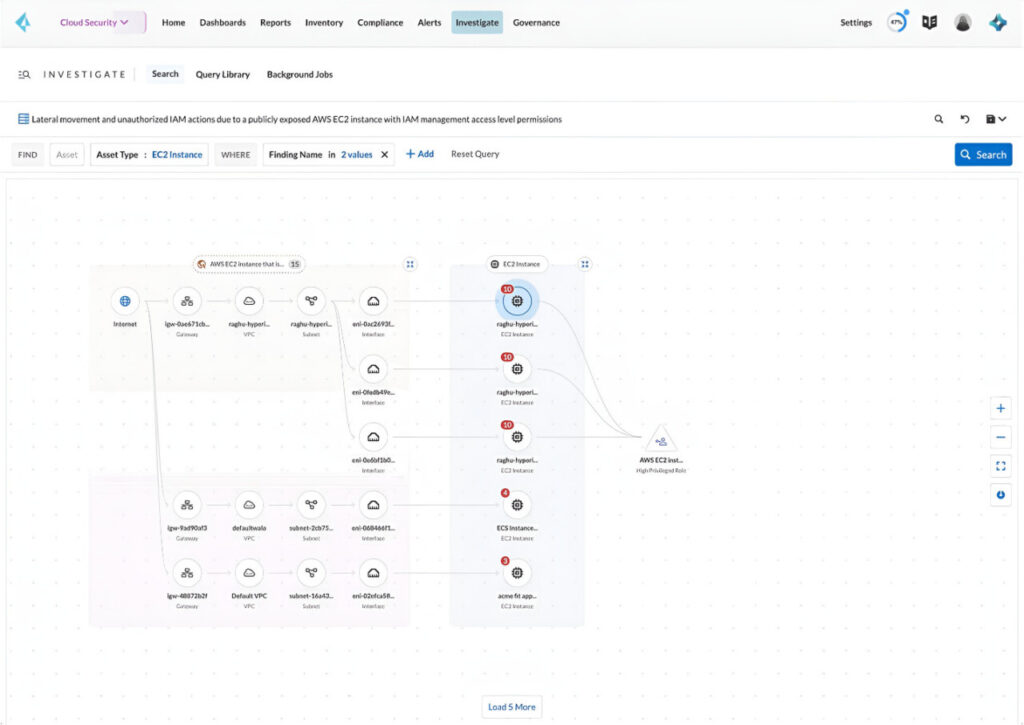

Free form search and investigate – We enable threat hunters to search their environments for new threats and risks and enable them to save those as policies for continuous monitoring. Without a system that can allow relationship traversal across assets, filtering, sorting and aggregation across numerous attributes of the domain objects, and full text search support for key attributes of the domains, we wouldn’t be able to deliver the power of the combined platform. This screenshot shows how our tool enables threat hunters to perform this analysis.

Code-to-cloud traceability – The platform graph engine is able to freely expand to supporting relationships between the code-to-cloud spectrum, for example, tracing a code repo all the way to the container in the cloud. Having a foundational and flexible Infinity Graph enables inferences like code-to-cloud tracing (see the following screenshot). This was a key use case as Prisma Cloud established the vision for its platform.

Fix in code compared to fix in cloud – Remediation in the cloud is generally best addressed by code where a repeatable infrastructure pattern is followed. For efficiency and security, codifying deployments using Terraform or AWS CloudFormation templates is key. Infinity Graph’s ability to trace to code enables innovative features like being able to fix the issue in code permanently as opposed to adding a one-off fix in the cloud (as shown in the following screenshot). The Prisma Cloud CAS module enables the automation of the fix given its deep visibility into the code base. This feature showcases the powerful nature of what a truly integrated code-to-cloud platform can offer. This image shows how Infinity Graph allows both fix in code and fix in cloud remediation.

The architecture to achieve such advanced capabilities focuses on combining the database capabilities of Neptune and analytical and search capabilities of OpenSearch Service, but also uses other AWS services such as Amazon Elastic Kubernetes Service (Amazon EKS), AWS Glue, and Amazon Managed Workflows for Apache Airflow (Amazon MWAA). The following diagram demonstrates the full architecture of Infinity Graph. On the data ingestion side, the raw data flows through several extract, transform, and load (ETL) processes in AWS Glue and Kubernetes (described in detail in the next section). On the consumption side, the Graph API is the entry point for all APIs and clients. Requests flow into the query engine, which queries OpenSearch Service and Neptune, and returns the results to the caller. We discuss this process in more detail later this post.

Data ingestion

The modules for code-to-cloud security collect data from the customer environment to produce deep security inputs for assets, findings and vulnerability information. Modules also exist to provide relationship information but we focus here on our graph entity model instead of topics like normalization and convergence between modules.

In building our Infinity Graph, we had an important design decision: whether to use a single data store for everything or rely on multiple purpose built-databases. The following items factored into our decision:

Looking at our production data, we found that our graphs are much sparser than typical graph database use cases. For some customers, over 60% of the dataset isn’t connected, and a graph would provide minimal benefit.

Graph databases are optimized for relationships between data elements and not large-scale attribute storage within single elements. A distributed search and analytics suite like OpenSearch Service would be better suited to manage this data.

In addition to graph traversals, we also needed support for use cases like aggregations and full text search at an extremely large scale, which OpenSearch Service was well proven to handle.

As a result, our architecture uses Neptune to index attributes necessary for our high-performance traversals and stores those attributes and aggregations not important to the traversals within OpenSearch Service. The resulting architecture focuses on domain-centric platform objects, each serving several dedicated use cases and managing their own data to achieve those use cases. The data in each domain is brought together through a set of ETL pipelines that the platform manages at scale. This ETL process consists of three stages: hydration of platform objects to our delta lake, delta live table upkeep, and hydration of search and graph indexes.

Stage 1: Hydration of platform objects to the in-house delta lake

We use hydration pipelines powered by AWS Glue to connect to the domain objects’ data stores and extract data into delta format to Amazon Simple Storage Service (Amazon S3). Where required, a dedicated replica cluster was set up to offload the impact of this hydration from the mainline production traffic. The hydration jobs were split into a seed (a one-time/initial dump) model and a delta (incremental changes) model. The goal was to use the seed model only at customer onboarding and then only process deltas moving forward, either by listening to the datastores’ CDC output or querying the data store based on time deltas.

After the first stage is complete, resulting in the data being hydrated in Amazon S3, the Airflow managed job starts the second stage: delta live table upkeep.

Stage 2: Delta live table upkeep

In this stage, we use the delta file format and specifications to implement a custom delta live table management job. Like stage 1, this is implemented as a Spark job on AWS Glue. This live table flattens the entire set of attributes across assets, findings and vulnerability domains by correlating by the asset ID. The live table handles any mutation to the entities at scale.

Data is partitioned in Amazon S3 by the customer using key prefixes and account IDs (generally the next partitioning candidate after the customer ID), further breaking the partition into smaller chunks. The delta metadata table uses this attribute to help with load balancing as mutations are made to specific segments of the files based on received updates.

The pipeline image in the following diagram illustrates how the various stages are linked together.

Stage 3: Hydration of search and graph indexes

At this stage, we have data in the delta live table and it’s mutating, so we optimize those mutations for index hydration.

First, we extract the data essential for relationship tracking and graph traversal from the live table. These relationships are stored with the assets, with each instance containing the first degree of relationships for each asset row. These records are extracted and formatted for the Neptune bulk loader.

Neptune’s bulk loading API has been a huge enabler for us. When new customer environments are onboarded, our pipeline logic uses the bulk loader API implementation to load a large snapshot of customer data. The bulk loader is asynchronous, so we launch the job and poll the status until it’s complete. When making incremental delta updates, we have a dedicated AWS Glue job running Java code that uses the Neptune open source Gremlin client for Java to invoke the update requests in batches. The batch size was made configurable to make sure we have control based on the stress the cluster would go through.

One challenge we face is asset node relationships mutate rapidly due to the ephemeral nature of assets in the cloud. The concurrency of multiple threads simultaneously loading data periodically causes race conditions causing unnecessary round trips to the database, resulting in ConcurrentModificationExeptions. To minimize occurrences of this, we apply optimizations like removing only the inbound edges to a node on delete so we don’t waste hydration time in cleaning up all the edges. We compensated this with query time safeguards to make sure we never query a node that was deleted. This led to over 30% reduction in our graph update times. We also ran into API rate limit throttling due to the cluster topology discovery done by the Java client to route traffic to read replicas. Our application uses a command and query responsibility segregation (CQRS) architecture, so we could disable this feature in the driver because we were only writing data and never accessing the read replicas. This led to significantly better reliability. In addition, this stage collects statistics to help the query engine make intelligent decisions for query plan generation.

In parallel, another set of jobs hydrate our Amazon OpenSearch Service index. Most of our filter, aggregation and full text search jobs use this index. For this post, we focus on how we use Neptune.

An architecture that enables forking of hydration using a single ETL pipeline enhances tackling mutations at scale, and parallelizing indexing helps trade off high performance, reliability and scalability for lack of consistency and atomic behavior. In the next section, we describe how our query engine mitigates and compensates for these trade-offs.

At the time of writing, this pipeline scales today to over 400 million nodes and over 300 million edges daily to Neptune in our busiest Region. We’ve observed as high as a million mutations in nodes and edges during spikes in a customer environment during a single 30-minute interval delta job.

Query engine

The query engine for the Infinity Graph platform enables free movement between graph traversal, full text search, sort and filtering, and aggregation use cases within a single API. This API is used by our web interface as well as customer integrations with their own APIs and clients. The interface is powered using an opinionated grammar using ANTLR. The following is a sample schema.

The query engine is implemented as a set of horizontally scaled Java services that decompose requests into a set of optimized steps calling Neptune and OpenSearch Service as appropriate. The query engine was built and is maintained in-house with a goal similar to a database query optimizer but optimizing execution across both the database and analytical services. It generates an intelligent query plan spanning both services and combines the results much like how an individual database service would use its own indexes. Furthermore, it’s aware of the features available in each service, such as the many caching features available in Neptune for workloads like pagination and range queries.

One complication we encountered was significant time spent serializing nodes in large datasets. Working with the Neptune team, we understood the cost of serializing a node and all of its properties was greater than IDs alone at scale. In many scenarios we only needed the node ID and not the full node and were able to mitigate the performance impact. Our design benefitted from this pattern because we kept the attribute footprint minimal in Neptune while achieving our filtering and search use cases using OpenSearch Service.

The statistics generated as part of the ETL pipeline for hydration-enabled optimizations are used in the query planning stages to determine whether the query requires relationship traversal, and whether the assets being sought have relationships. This allows us to route queries to Neptune when appropriate or to OpenSearch Service if we’re just retrieving information without needing to consider relationships. This feature allows our query engine to achieve p95 latency under 1 second and p99 latency under 2 seconds end to end.

Several implementation adjustments were involved in tuning the performance, such as the following:

We minimized the scale of index lookup patterns using techniques such as granular labels within Neptune when performance is important. Neptune simplifies index management, but that can result in very large indexes when the cardinality is high. At very large cardinality for a single index entry, Neptune will approach log scale and can impact very high-performance needs.

High volume deletion of nodes and edges can impact latency due to database locks, resource consumption, and undo log management. We found querying OpenSearch Service for active nodes to be much more efficient and it allowed us to mark deleted Neptune records temporarily to be removed later (also known as tombstoning) instead of deleting them in real-time. We eventually clean those deleted nodes during times of lower usage, which is especially important for nodes with a large number of edges. This pattern improved performance significantly.

In tuning our Gremlin Java driver usage, we set the maxInProcessPerConnection attribute to a much lower value than maxConnectionPoolSize because most of the queries required heavy data exchange.

We also found that using TinkerPop Gremlin queries in bytecode mode performed much better than string mode.

We set timeouts on a per-query basis instead of a single universal value. For scenarios where our statistics showed we had to check a large number of assets on both the left and right side of a relationship, we set this timeout dynamically based on the cardinality of both sides.

Overall, we noticed the query engine scaled more meaningfully for our use case when scaling vertically instead of horizontally. We use horizontal scaling when warranted for increased concurrency, not performance. This is contrary to typical API design but given the purpose-built nature of the API to be an Infinity Graph federation API, it should be expected that it will behave more like a database than a lightweight API.

Infinity Graph on Prisma Cloud use cases

Prisma Cloud seeks to offer the most comprehensive code to cloud security for our customers. The best way from preventing security issues from happening again is to shift left in the development pipeline and fixing issues at the source.

The technology vision is to build the platform in a way that can support and enable extension of the vision above. We broadly divide our technical challenge into implicit and explicit search and discovery.

Implicit search and discovery leverages the platform to follow known search paths to support use cases including:

Customer wants to trace a vulnerability found in a virtual machine (VM) image at run-time all the way to the code repository.

Customer needs to see the impact of a fix in code and its manifestation in alert reduction across build, deploy and run stages.

Customer wants to know which secrets are most shared across nodes and the impact across applications if they are compromised.

The CBDR (code-build-run-deploy) graph powered by the Infinity Graph, shown in the following screenshot, is an implicit use case where a customer can investigate a specific vulnerability across their development pipeline. This provides a clear impact graph of how fixing in code could have mitigated the volume of alerts/signals generated at run time and enabling a permanent fix to the issue at source.

Explicit search and discovery allows the customer to freely explore their ecosystem, including their workloads and Prisma Cloud discovered issues, to identify potential security issues. These use cases include:

Customer clones an existing security control “Publicly exposed virtual machines exposed to the internet and have access to sensitive data†and narrows it down to specific AWS accounts or tags, driving more accountability within the organization.

Customer starts with a high level search from query builder such as “Show me all assets with exploitable VMs†and then drills down into specific clauses like “and also contains secrets†to discover more dangerous posture issues.

Here is an example of such a query.

Internally, the search API computes a plan that determines which sets of queries must be delegated to Amazon OpenSearch Service compared to Amazon Neptune.

The query engine workflow operates as follows for the previous example query:

Analyze query and generate query plan — the plan involves setting up stages optimized to leverage the Search Index in Amazon OpenSearch Service and the Graph Index in Amazon Neptune.

Optimize the query plan – the engine leverages statistics on relationship counts as part of Amazon Neptune hydration to determine if there are options to circuit break quickly. For example, if there are no Kubernetes services identified with valid images in the system then querying OpenSearch Service is avoided at this point.

Collect statistics and aggregations — stats are gathered from OpenSearch Service to determine the number of results and compare that with the optimization stats gathered in the last step. This step utilizes every unique source, relationship type and target combination to get counts.

Sorting and ordering — the sort order is collected from OpenSearch Service results driven by several factors present in the asset property such as severity.

Parallelization and scatter gathering — the engine performs a scatter gather in parallel to farm out pages of data from OpenSearch Service to the graph (as needed) to fill the pages needed after additional filtering.

Search experience optimization — results are streamed back in real time from the scatter gather operation, providing cursor based pagination and enabling infinite scrolling interfaces. This allows yielding of results before the completion of the entire scatter gather operation and enables low latency responses (sub second for p99 case).

In this example, OpenSearch Service is leveraged to perform full text search (FTS) for the name match, filtering on region and the finding name match with an opinionated sort order. Another sub-query to OpenSearch Service is created as part of analyzing the “Container images that have vulnerabilities†clause as a part of the relationship condition. The results for each page from the OpenSearch Service results are then queried against Amazon Neptune with the “containers that have vulnerabilities†nodes from the second query to Amazon OpenSearch Service.

Leveraging the approach above – we are able to get the best of both worlds, high speed inverted index supporting FTS, Aggregations and Ordering requirements with OpenSearch Service and also the graph traversal flexibility with Amazon Neptune.

Observability and monitoring

With deployments and usage at this scale, observability is key. We rely on several Neptune metrics, including the following:

BufferCacheHitRatio – This metric confirms cache miss trends and informs us when degraded performance is expected due to cold cache or cache swapping

ClusterReplicaLag – This metric is key because our query engine uses replicas and, given the fact that our stats generated from hydration informed the query engine, we needed to make sure the replica lag for eventual consistency was extremely low

TotalRequestsPerSec – This helped us correlate that the Java Gremlin client making topology validation calls was repeatedly tripping the rate limits from our AWS Glue jobs.

TotalClient/ServerErrorsPerSec – These metrics monitor the total errors on either the client or the server, allowing us to catch emerging issues early

Conclusion

In this post, Palo Alto Networks described how we built our Infinity Graph platform using a “better together†approach with two AWS purpose-built services, Neptune and OpenSearch Service. Developing a platform graph engine on top of AWS enabled us to make giant strides that otherwise would have taken us months to perfect and scale. With a small team, we were able to deliver a hugely differentiated offering to our customers at an industry-first scale that was reliable, performant and highly adaptable. The net outcome of this effort resulted in monumental benefits to our customers, delivering significant reduction in overall alert noise from point solutions. In addition, this platform now enables us to provide several other value-added services like code-to-cloud tracing, the ability to fix in code, and other advanced graph analytics use cases.

Do you use Neptune or OpenSearch Service? Did any of the tricks and tips Palo Alto Networks learned improve your Neptune solutions? Give it a try and let us know your feedback in the comments section.

About the Authors

Brian O’Keefe is a Principal Specialist Solutions Architect at AWS focused on Neptune. He works with customers and partners to solve business problems using Amazon graph technologies. He has over two decades of experience in various software architecture and research roles, many of which involved graph-based applications.

Krishnan Narayan is a Technical leader and Distinguished engineer at Palo Alto Networks leading architecture, design and execution of key cloud security solutions and platform at scale securing some of the most demanding public cloud environments for customers. He has over a decade of experience in leading and building market leading cybersecurity solutions across data center, mobile and cloud ecosystems.

Source: Read More