Deep learning models typically represent knowledge statically, making adapting to evolving data needs and concepts challenging. This rigidity necessitates frequent retraining or fine-tuning to incorporate new information, which could be more practical. The research paper “Towards Flexible Perception with Visual Memory†by Geirhos et al. presents an innovative solution that integrates the symbolic strength of deep neural networks with the adaptability of a visual memory database. By decomposing image classification into image similarity and fast nearest neighbor retrieval, the authors introduce a flexible visual memory capable of adding and removing data seamlessly.Â

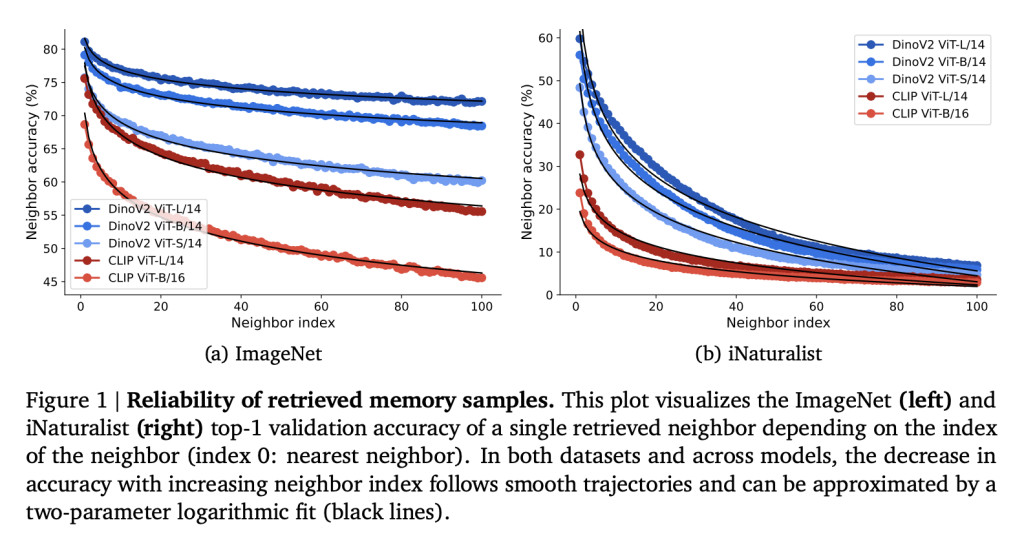

Current methods for image classification often rely on static models that require retraining to incorporate new classes or datasets. Traditional aggregation techniques, such as plurality and softmax voting, can lead to overconfidence in predictions, particularly when considering distant neighbors. The authors propose a retrieval-based visual memory system that builds a database of feature-label pairs extracted from a pre-trained image encoder, such as DinoV2 or CLIP. This system allows for rapid classification by retrieving the k nearest neighbors based on cosine similarity, enabling the model to adapt to new data without retraining.

The methodology consists of two main steps: constructing the visual memory and performing nearest neighbor-based inference. Visual memory is created by extracting and storing features from a dataset in a database. When a query image is presented, its features are compared to those in the visual memory to retrieve the nearest neighbors. The authors introduce a novel aggregation method called RankVoting, which assigns weights to neighbors based on rank, outperforming traditional methods and enhancing classification accuracy.

The proposed visual memory system demonstrates impressive performance metrics. The RankVoting method effectively addresses the limitations of existing aggregation techniques, which often suffer from performance decay as the number of neighbors increases. In contrast, RankVoting improves accuracy with more neighbors, stabilizing performance at higher counts. The authors report achieving an outstanding 88.5% top-1 ImageNet validation accuracy by incorporating Gemini’s vision-language model to re-rank the retrieved neighbors. This surpasses the baseline performance of both the DinoV2 ViT-L14 kNN (83.5%) and linear probing (86.3%).

The flexibility of the visual memory allows it to scale to billion-scale datasets without additional training, and it can also remove outdated data through unlearning and memory pruning. This adaptability is crucial for applications requiring continuous learning and updating in dynamic environments. The results indicate that the proposed visual memory not only enhances classification accuracy but also offers a robust framework for integrating new information and maintaining model relevance over time, providing a reliable solution for dynamic learning environments.

The research highlights the immense potential of a flexible visual memory system as a solution to the challenges posed by static deep learning models. By enabling the addition and removal of data without retraining, the proposed method addresses the need for adaptability in machine learning. The RankVoting technique and the integration of vision-language models demonstrate significant performance improvements, paving the way for the widespread adoption of visual memory systems in deep learning applications and inspiring optimism for their future applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post Google DeepMind Researchers Propose a Dynamic Visual Memory for Flexible Image Classification appeared first on MarkTechPost.

Source: Read MoreÂ