Large Language Models (LLMs) have achieved remarkable progress in the ever-expanding realm of artificial intelligence, revolutionizing natural language processing and interaction. Yet, even the most sophisticated LLMs, like LLaMa 3, face substantial challenges in tasks requiring multi-step reasoning and decision-making in dynamic, interactive environments. Traditional training methodologies, heavily reliant on static datasets, must prepare these models for real-world applications, particularly in web navigation, where adaptability and complex reasoning are paramount. MultiOn researchers introduced Agent Q, a groundbreaking autonomous web agent that has been developed to address these challenges. Built upon the foundation of LLaMa 3, Agent Q combines advanced search techniques, self-critique, and reinforcement learning, transforming how LLMs navigate and interact with the web. By pushing the boundaries of autonomous agents, Agent Q sets a new standard for real-world AI applications.Â

Traditional approaches to training LLMs for dynamic tasks typically involve supervised fine-tuning on curated datasets. While effective in controlled scenarios, these methods often must improve in complex environments that demand multi-step reasoning and adaptive learning. The main issue lies in their tendency to produce suboptimal results due to compounding errors and limited exploration.Â

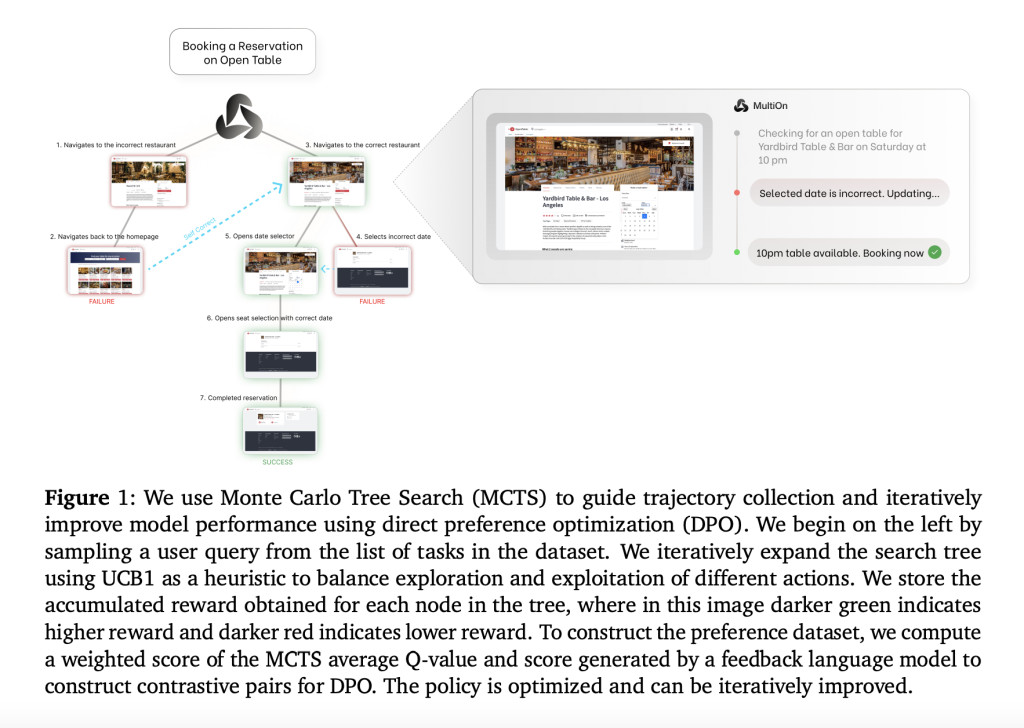

Agent Q is a cutting-edge framework designed to overcome these challenges by integrating advanced search techniques, self-critique mechanisms, and reinforcement learning. Unlike conventional methods that rely heavily on supervised fine-tuning, Agent Q employs a combination of guided Monte Carlo Tree Search (MCTS) and an off-policy variant of the Direct Preference Optimization (DPO) algorithm. This approach allows LLM agents to learn from successful and unsuccessful trajectories, significantly improving their generalization capabilities in complex, multi-step reasoning tasks. By leveraging these methodologies, Agent Q addresses the shortcomings of existing models and sets a new benchmark for autonomous web agents.

The innovative architecture of Agent Q consists of several key components that enhance its performance in interactive environments. Guided MCTS plays a crucial role by autonomously exploring different actions and web pages, effectively balancing exploration and exploitation. This technique generates diverse and optimal trajectories essential for training robust agents. Additionally, the self-critique mechanism provides real-time feedback at each decision-making step, allowing the agent to refine its reasoning process. This feedback loop is particularly important for long-horizon tasks, where sparse rewards can hinder learning. Furthermore, the DPO algorithm fine-tunes the model by constructing preference pairs from the data generated during MCTS, enabling the agent to learn effectively from both successful and sub-optimal actions.

The results of Agent Q’s application in real-world scenarios are nothing short of extraordinary. In a series of booking experiments on OpenTable, Agent Q improved the baseline zero-shot performance of LLaMa 3 from 18.6% to an astounding 81.7% after just one day of autonomous data collection. With further online search, this success rate climbed to 95.4%, representing a 340% improvement. These impressive results highlight Agent Q’s ability to autonomously improve and adapt, setting a new standard for autonomous web agents.

In conclusion, Agent Q represents a monumental leap forward in developing autonomous web agents. By addressing the limitations of traditional LLM training methodologies, Agent Q introduces a novel framework that combines advanced search techniques, AI self-critique, and reinforcement learning. This approach enhances the agent’s decision-making capabilities and allows it to improve continuously in real-world, dynamic environments. With its impressive performance and potential for further development, Agent Q sets a new benchmark for what is possible in autonomous web navigation, paving the way for more intelligent and adaptable AI agents.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post Agent Q: A New AI Framework for Autonomous Improvement of Web-Agents with Limited Human Supervision- with a 340% Improvement over LLama 3’s Baseline Zero-Shot Performance appeared first on MarkTechPost.

Source: Read MoreÂ