The research field of log parsing is a critical component of software performance analysis and reliability. It transforms vast amounts of unstructured log data, often spanning hundreds of gigabytes to terabytes daily, into structured formats. This transformation is essential for understanding system execution, detecting anomalies, and conducting root-cause analyses. Traditional log parsers, which rely on syntax-based methods, have served this purpose for years. However, these methods often must improve when logs deviate from predefined rules, decreasing accuracy and efficiency. Recent advancements in large language models (LLMs) have opened new avenues for strengthening log parsing accuracy, particularly in handling the semi-structured nature of logs.

The primary challenge in log parsing is the sheer volume & complexity of the data generated by real-world software systems. These logs, which contain a mix of static text and dynamically generated variables, are vital for developers to understand and debug their systems. However, directly analyzing these logs is difficult due to their semi-structured nature. Traditional log parsers like Drain and AEL attempt to transform these logs into structured templates using predefined rules or heuristics. While effective in some cases, these parsers often need help with logs that fit neatly into these rules, resulting in lower accuracy. Using commercial LLMs like ChatGPT for log parsing introduces privacy risks, as logs often contain sensitive information. The cost of using these models, especially when dealing with large volumes of data, also poses a significant barrier to their widespread adoption.

Syntax-based parsers, like AEL and Drain, use heuristics and predefined rules to extract log templates, identifying common components in the logs. However, these methods are limited by their dependence on the structure of the input logs, often leading to reduced accuracy when logs have complex structures. Semantic-based parsers, which leverage the capabilities of LLMs, focus on the textual content within logs to distinguish between static and dynamic segments. These parsers, such as LILAC and LLMParserT5Base, typically require manual labeling of log templates for fine-tuning, which adds significant labor and cost. Using commercial LLMs for these tasks raises concerns about data privacy and the high operational costs of processing large datasets.

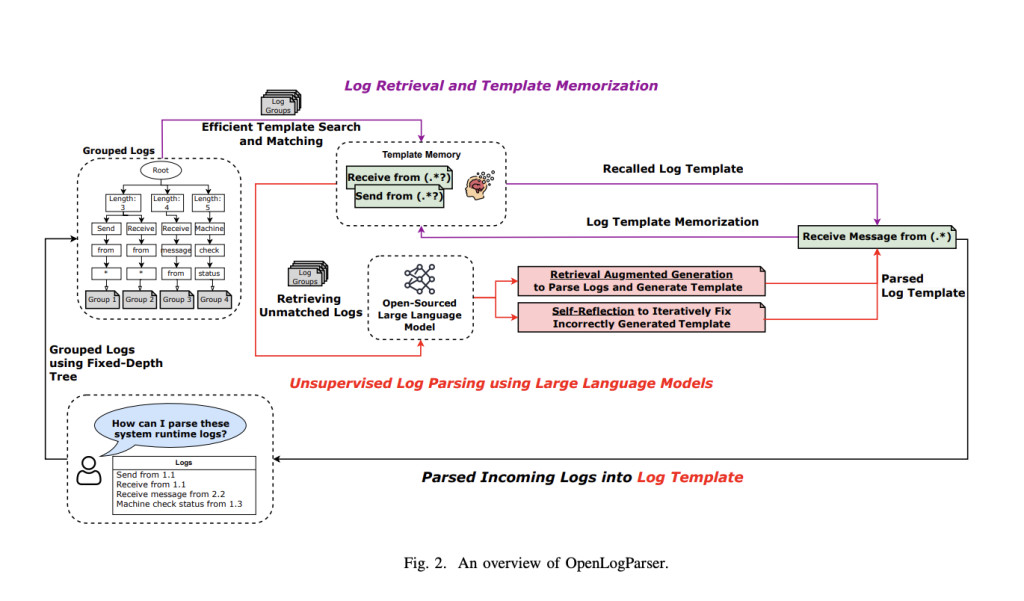

Researchers from Concordia University and DePaul University have introduced OpenLogParser, an unsupervised log parsing approach that utilizes open-source LLMs, specifically the Llama3-8B model. This approach addresses the privacy concerns associated with commercial LLMs using an open-source model, thereby reducing operational costs. OpenLogParser employs a fixed-depth grouping tree to cluster logs that share similar static text but differ in dynamic variables. This method enhances both accuracy and efficiency in parsing logs. The parser’s design includes several innovative components: a retrieval-augmented generation technique that selects diverse logs within each group based on Jaccard similarity, helping the LLM distinguish between static and dynamic content; a self-reflection mechanism that iteratively refines log templates to improve parsing accuracy; and a log template memory that stores parsed templates to reduce the need for repeated LLM queries. This combination of techniques allows OpenLogParser to achieve state-of-the-art performance while maintaining the privacy and cost-efficiency of open-source solutions.

OpenLogParser’s technology is built on three core components: log grouping, unsupervised LLM-based parsing, and log template memory. The log grouping process clusters logs based on shared syntactic features, significantly reducing the complexity of subsequent parsing steps. The unsupervised LLM-based parsing technique then uses a retrieval-augmented approach to separate static and dynamic components within the logs accurately. Finally, the log template memory stores the generated log templates, which can be reused for future parsing tasks, thereby minimizing the number of LLM queries and enhancing overall efficiency. This architecture allows OpenLogParser to process logs 2.7 times faster than other LLM-based parsers, with an average parsing accuracy improvement of 25% over the best-performing existing parsers. The parser’s ability to handle over 50 million logs from the LogHub-2.0 dataset showcases its robustness and scalability.

Compared to other state-of-the-art parsers, such as LILAC and LLMParserT5Base, OpenLogParser consistently outperformed them across various metrics. The parser achieved a grouping accuracy (GA) of 87.2% and a parsing accuracy (PA) of 85.4%, significantly higher than the 67.8% PA of LILAC and the 75.1% PA of LLMParserT5Base. Additionally, OpenLogParser processed the entire LogHub-2.0 dataset in just 5.94 hours, far surpassing LILAC’s 16 hours and LLMParserT5Base’s 258 hours. This efficiency is primarily due to OpenLogParser’s innovative grouping and memory mechanisms, which reduce the frequency of LLM queries while maintaining high accuracy. These results highlight OpenLogParser’s potential to revolutionize log parsing by combining the accuracy of LLMs with the cost-efficiency and privacy benefits of open-source tools.

In conclusion, leveraging open-source LLMs addresses the critical challenges of privacy, cost, and accuracy that have plagued previous approaches. Its innovative combination of log grouping, unsupervised LLM-based parsing, and log template memory enhances efficiency and sets a new standard for accuracy in log parsing. The parser’s impressive performance on large-scale datasets like LogHub-2.0 underscores its scalability and practical applicability.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post OpenLogParser: A Breakthrough Unsupervised Log Parsing Approach Utilizing Open-Source LLMs for Enhanced Accuracy, Privacy, and Cost Efficiency in Large-Scale Data Processing appeared first on MarkTechPost.

Source: Read MoreÂ