Optical Character Recognition (OCR) converts text from images into editable data, but it often produces errors due to issues like poor image quality or complex layouts. While OCR technology is valuable for digitizing text, achieving high accuracy can be challenging and typically requires ongoing refinement.

Large Language Models (LLMs), such as the ByT5 model, offer a promising potential for enhancing OCR post-correction. These models are trained on extensive text data and can understand and generate human-like language. By leveraging this capability, LLMs can potentially correct OCR errors more effectively, improving the overall accuracy of the text extraction process. Fine-tuning LLMs on OCR-specific tasks has shown that they can outperform traditional methods in correcting errors, suggesting that LLMs could significantly refine OCR outputs and enhance text coherence.

In this context, a researcher from the University of Twente recently performed a new work to explore the potential of LLMs for improving OCR post-correction. This study investigates a technique that leverages the language understanding capabilities of modern LLMs to detect and correct mistakes in OCR outputs. By applying this approach to modern customer documents processed with the Tesseract OCR engine and historical documents from the ICDAR dataset, the research evaluates the effectiveness of fine-tuned character-level LLMs, such as ByT5, and generative models like Llama 7B.

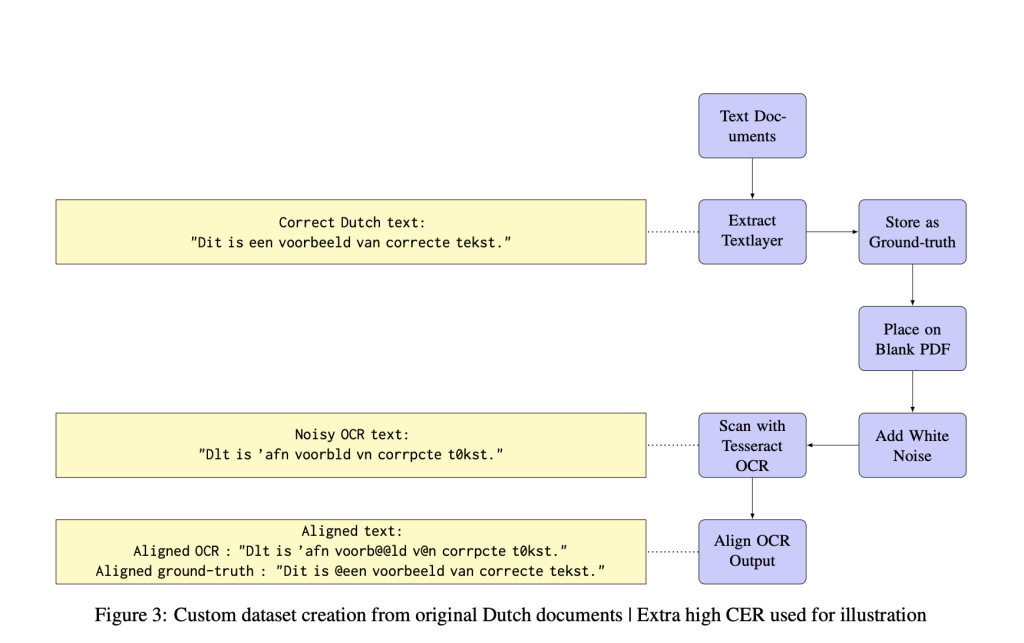

The proposed approach involves fine-tuning LLMs specifically for OCR post-correction. The methodology starts with selecting models suited for this task: ByT5, a character-level LLM, is fine-tuned on a dataset of OCR outputs paired with ground-truth text to enhance its ability to correct character-level errors. Additionally, Llama 7B, a general-purpose generative LLM, is included for comparison due to its large parameter size and advanced language understanding.

Fine-tuning adjusts these models to the specific nuances of OCR errors by training them on this specialized dataset. Various pre-processing techniques, such as lowercasing text and removing special characters, are applied to standardize the input and potentially improve the models’ performance. The fine-tuning process includes training ByT5 in both its small and base versions, while Llama 7B is used in its pre-trained state to provide a comparative baseline. This methodology uses character-level and generative LLMs to enhance OCR accuracy and text coherence.

The evaluation of the proposed method involved comparing it against non-LLM-based post-OCR error correction techniques, using an ensemble of sequence-to-sequence models as a baseline. The performance was measured using Character Error Rate (CER) reduction and precision, recall, and F1 metrics. The fine-tuned ByT5 base model with a context length of 50 characters achieved the best results on the custom dataset, reducing the CER by 56%. This result significantly improved compared to the baseline method, which achieved a maximum CER reduction of 48% under the best conditions. The higher F1 scores of the ByT5 model were primarily due to increased recall, showcasing its effectiveness in correcting OCR errors in modern documents.

In conclusion, this work presents a novel approach to OCR post-correction by leveraging the capabilities of Large Language Models (LLMs), specifically a fine-tuned ByT5 model. The proposed method significantly improves OCR accuracy, achieving a 56% reduction in Character Error Rate (CER) on modern documents, surpassing traditional sequence-to-sequence models. This demonstrates the potential of LLMs in enhancing text recognition systems, particularly in scenarios where the text quality is critical. The results highlight the effectiveness of using LLMs for post-OCR error correction, paving the way for further advancements in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post Large Language Models LLMs for OCR Post-Correction appeared first on MarkTechPost.

Source: Read MoreÂ