Artificial intelligence, particularly natural language processing (NLP), has become a cornerstone in advancing technology, with large language models (LLMs) leading the charge. These models, such as those used for text summarization, automated customer support, and content creation, are designed to interpret and generate human-like text. However, the true potential of these LLMs is realized through effective prompt engineering. This process involves crafting precise instructions that guide the models to produce the desired outcomes. This critical and complex task requires a deep understanding of the model’s capabilities and the nuances of human language.

One of the main challenges in prompt engineering is the significant expertise and time required to design effective prompts. When dealing with creative or varied outputs, crafting prompts that accurately convey the task and capture the user’s expectations can be especially challenging. This task is further complicated because many current methods rely on labeled datasets to refine prompts, which are often difficult to obtain. Users typically need to manually create seed prompts, which can be cumbersome and may not always result in optimal prompts for specific tasks. These issues highlight a substantial barrier to leveraging the full potential of LLMs, particularly for those without extensive experience in prompt engineering.

Traditionally, tools available for prompt engineering have not adequately addressed these challenges. Most existing methods assume access to labeled data, a significant limitation for many users. These tools often operate in a zero-shot mode, relying on a single initial prompt without iterative refinement based on user feedback. This approach lacks flexibility and fails to cater to tasks that require more detailed and specific outputs. Some platforms offer marketplaces where users can purchase pre-designed prompts, but these too often require expertise many users do not possess. As a result, there is a clear need for more accessible and user-friendly tools that can assist users in crafting effective prompts without requiring deep technical knowledge or extensive manual effort.

Researchers from IBM Research have introduced a groundbreaking approach known as Conversational Prompt Engineering (CPE). CPE is designed to simplify the process of prompt engineering by eliminating the need for labeled data and seed prompts. Instead, it uses a chat-based interface that allows users to interact directly with an advanced chat model. This interaction helps users articulate their needs clearly, with the model guiding them through creating and refining prompts. The system is particularly effective for tasks that require repetitive processing of large volumes of text, such as summarizing email threads or generating personalized advertising content.

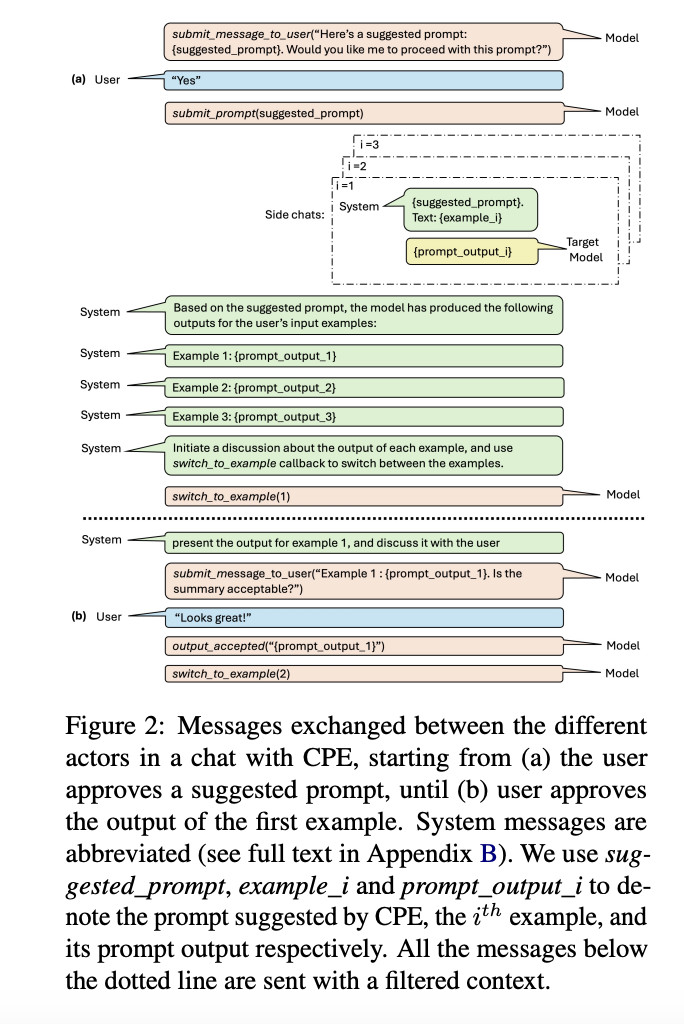

CPE operates through a structured workflow that involves several key stages. Initially, the system analyzes the user’s small set of unlabeled examples. This analysis generates questions that help clarify the task’s requirements and the user’s expectations. As the user responds to these questions, the model uses the information to draft an initial prompt. This prompt is then used to generate outputs, which the user can further refine through additional interactions with the model. The process continues iteratively until the user is satisfied with the prompt, resulting in a few-shot prompt that is highly personalized and tailored to the specific task at hand. The process is designed to be intuitive and user-friendly, making it accessible even to those without extensive experience in prompt engineering.

The effectiveness of CPE was demonstrated through a user study involving 12 participants who engaged with the system to develop prompts for summarization tasks. The study revealed that, on average, it took 32 turns of interaction to reach a final prompt, with some participants achieving satisfactory prompts in as few as four turns. In 67% of cases, the initial prompt was refined through multiple iterations, indicating the value of the iterative process in improving the quality of the final prompt. The final prompts created through CPE were used to generate summaries that the participants then evaluated. These summaries were consistently rated as high quality, with participants expressing satisfaction with the prompts’ ability to meet their specific needs.

In conclusion, conversational prompt engineering (CPE) simplifies the process and makes it more accessible, addressing the significant challenges associated with traditional methods. The system generates high-quality, personalized prompts through an intuitive, chat-based interface, making it a valuable tool for various applications. The user study’s results underscore CPE’s effectiveness in reducing the time and effort required to create prompts while maintaining or improving the output quality.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post IBM Research Introduced Conversational Prompt Engineering (CPE): A GroundBreaking Tool that Simplifies Prompt Creation with 67% Improved Iterative Refinements in Just 32 Interaction Turns appeared first on MarkTechPost.

Source: Read MoreÂ