The protein structure and sequence analysis field is critical in understanding how proteins function at a molecular level. Proteins are essential molecules composed of sequences of amino acids that fold into specific 3D shapes and structures, determining their functions in biological systems. Understanding the precise relationship between these sequences and their resulting structures is vital for numerous applications, including drug discovery, disease research, and synthetic biology. Advances in computational techniques have enabled researchers to predict protein structures from sequences alone, offering insights into the vast diversity of proteins and allowing for the design of proteins with specific tasks. This predictive capability is increasingly important as it supports exploring and manipulating the protein universe, essential for advancing biological research and biotechnology.

However, a significant challenge in this area is the imbalance between available protein sequence data and structural data. While databases like UniRef50 contain over 63 million protein sequences, structural databases like the Protein Data Bank (PDB) hold just over 218,000 structures. This disparity makes it difficult to develop accurate models that can predict the structure of proteins based solely on their sequence. Although capable of predicting protein structures, current machine-learning models often need help to bridge this gap effectively. The need for accurate and efficient predictions is crucial, as errors or inefficiencies in these models can lead to incorrect conclusions about protein function, potentially hampering progress in related fields.

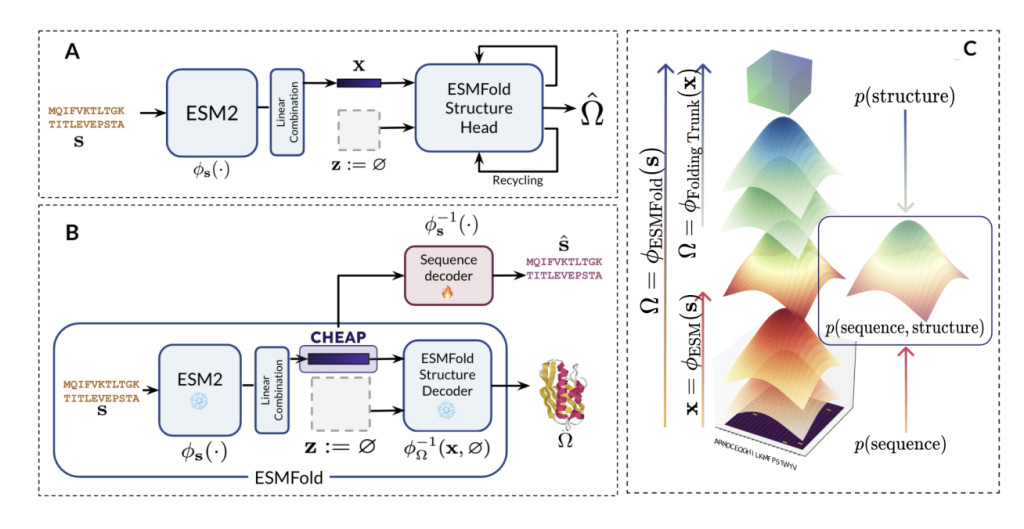

Existing methods for predicting protein structures typically involve complex machine learning models trained on data that links sequences to their corresponding structures. Models like ESMFold have made significant strides in this area by leveraging large-scale protein language models to predict structures from sequences. However, these models need substantial limitations, including the issue of massive activations. Certain channels within the model’s data output dominate in these cases, producing values up to 3000 times higher than others, regardless of the input sequence. This problem increases the computational resources required and reduces the efficiency and applicability of these models in downstream tasks such as protein generation, similarity search, and property prediction. The high-dimensional nature of these models also makes them unwieldy, limiting their generalizability and utility in diverse biological applications.

A research team from UC Berkeley, Prescient Design & Genentech, Microsoft Research, and New York University introduced a new method called CHEAP (Compressed Hourglass Embedding Adaptations of Proteins). CHEAP addresses the limitations of existing models by compressing the latent space in sequence-to-structure models like ESMFold. This compression reduces the dimensionality of the data significantly by as much as 128 times in the channel dimension and eight times in the length dimension while still retaining critical structural information. By doing so, CHEAP enhances the efficiency of these models, making them more versatile and applicable to a broader range of tasks, thus overcoming the drawbacks associated with massive activations and high-dimensional embeddings.

The CHEAP method is built upon the innovative Hourglass Protein Compression Transformer (HPCT) architecture, which compresses protein embeddings in both channel dimension and sequence length. This architecture applies a per-channel normalization scheme to combat the problem of massive activations, ensuring that the compressed embeddings maintain the structural information needed for accurate protein predictions. Continuous CHEAP embeddings retain structural details at an Angstrom-scale accuracy (<2 Ã…) and achieve near-perfect accuracy in sequence information, even after extensive data reduction. Discrete CHEAP tokens also provide a high level of structural accuracy, distinguishing CHEAP from other concurrent methods by enabling structural predictions directly from sequence data, thereby broadening the range of usable training data for tasks requiring structural information.

In tests, CHEAP embeddings retained structural accuracy within less than 1.34 Å root-mean-squared distance (RMSD) from the original structure, even when reducing the channel dimension to 32. This high level of accuracy was achieved while compressing the embedding space to only eight channels for sequence retention and four channels for structure retention, far exceeding the performance of existing models. Furthermore, CHEAP’s efficiency extends to functional prediction tasks, where it performs competitively, particularly in functions related to protein structure, such as solubility and β-lactamase activity prediction, despite the compression.

In conclusion, the CHEAP method is a milestone in protein structure prediction, as it addresses the challenges posed by the high dimensionality and inefficiencies of current models. CHEAP enables more efficient and accurate predictions through its innovative compression techniques, paving the way for broader biological research and biotechnology applications. This method democratizes access to large-scale protein models and raises important questions about the intrinsic dimensionality of protein embeddings and the potential over-parameterization of existing models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post CHEAP Embeddings and Hourglass Protein Compression Transformer (HPCT): Transforming Protein Structure Prediction with Advanced Compression Techniques for Enhanced Efficiency and Accuracy appeared first on MarkTechPost.

Source: Read MoreÂ