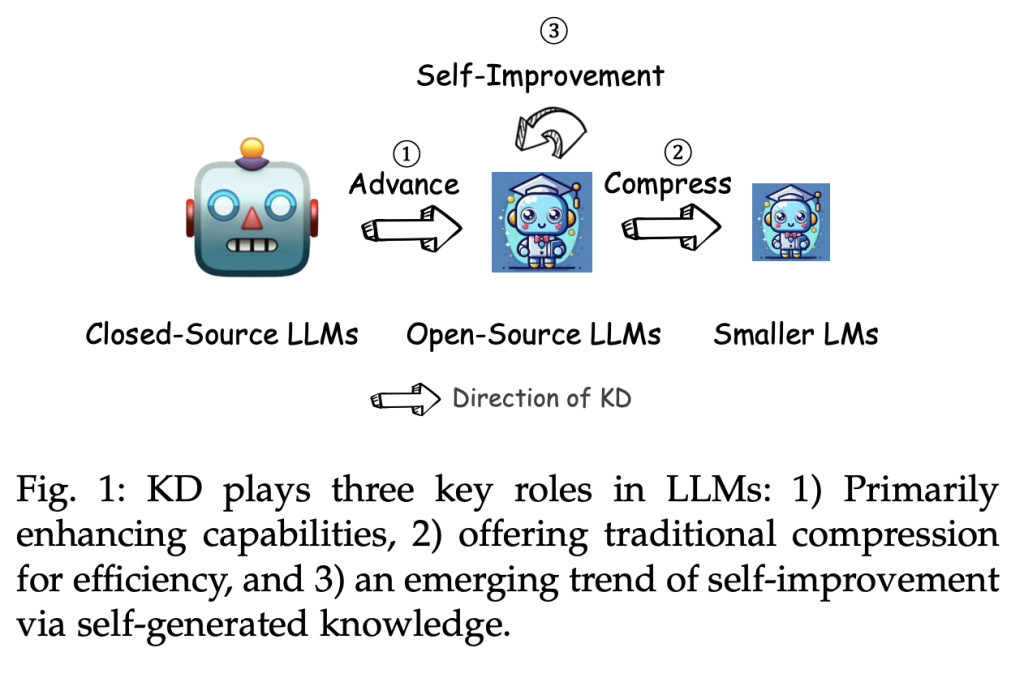

Knowledge Distillation (KD) has become a key technique in the field of Artificial Intelligence, especially in the context of Large Language Models (LLMs), for transferring the capabilities of proprietary models, like GPT-4, to open-source alternatives like LLaMA and Mistral. In addition to improving the performance of open-source models, this procedure is essential for compressing them and increasing their efficiency without significantly sacrificing their functionality. KD also helps open-source models become better versions of themselves by empowering them to become their own instructors.

In recent research, a thorough analysis of KD’s function in LLMs has been discussed, highlighting the significance of KD’s transfer of advanced knowledge to smaller, less resource-intensive models. The three primary pillars of the study’s structure were verticalisation, skill, and algorithm. Every pillar embodies a distinct facet of knowledge design, from the fundamental workings of the employed algorithms to the augmentation of particular cognitive capacities inside the models to the real-world implementations of these methods in other domains.

A Twitter user has elaborated on the study in a recent tweet. Within language models, distillation describes a process that condenses a vast and intricate model, referred to as the teacher model, into a more manageable and effective model, referred to as the student model. The main objective is to transfer the teacher’s knowledge to the student to enable the learner to perform at a level that is comparable to the teacher’s while utilizing a lot less processing power.

This is accomplished by teaching the student model to behave in a way that resembles that of the instructor, either by mirroring the teacher’s output distributions or by matching the teacher’s internal representations. Techniques like logit-based distillation and hidden states-based distillation are frequently used in the distillation process.

The principal advantage of distillation lies in its substantial decrease in both model size and computational needs, hence enabling the deployment of models in resource-constrained environments. The student model may frequently retain a high level of performance even with its reduced size, closely resembling the larger instructor model’s capabilities. When memory and processing power are limited, as they are in embedded systems and mobile devices, this efficiency is critical.

Distillation allows for freedom in the student model’s architecture selection. A considerably smaller model, such as StableLM-2-1.6B, can be created using the knowledge from a bigger model, such as Llama-3.1-70B, making the larger model usable in situations where it would not be feasible to use. When compared to conventional training methods, distillation techniques like those offered by tools like Arcee-AI’s DistillKit can result in significant performance gains, frequently without the need for extra training data.

In conclusion, this study is a useful tool for researchers, providing a thorough summary of the state-of-the-art approaches in knowledge distillation and recommending possible directions for further investigation. Through the gap between proprietary and open-source LLMs, this work highlights the potential for creating AI systems that are more powerful, accessible, and efficient.Â

Check out the Related Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post Understanding Language Model Distillation appeared first on MarkTechPost.

Source: Read MoreÂ