Managing and optimizing API calls to various Large Language Model (LLM) providers can be complex, especially when dealing with different formats, rate limits, and cost controls. Creating consistent interfaces for diverse LLM platforms can often be a struggle, making it challenging to streamline operations, particularly in enterprise environments where efficiency and cost management are critical.

Existing solutions for managing LLM API calls typically involve manual integration of different APIs, each with its own format and response structure. Some platforms offer limited support for fallback mechanisms or unified logging, but these tools often lack the flexibility or scalability to manage multiple providers efficiently.

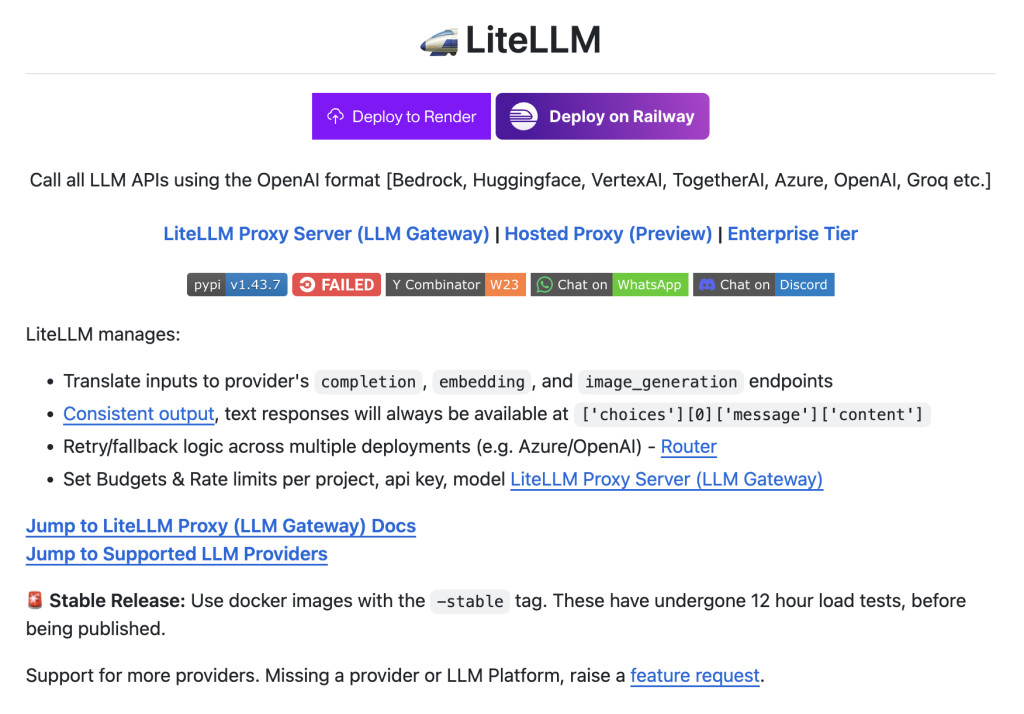

Introducing LiteLLM

The LiteLLM Proxy Server addresses these challenges by providing a unified interface for calling LLM APIs using a consistent format, regardless of the provider. It supports a wide range of LLM providers, including OpenAI, Huggingface, Azure, and Google VertexAI, translating inputs and outputs to a standard format. This simplifies the development process by allowing developers to switch between providers without changing the core logic of their applications. Additionally, LiteLLM offers features like retry and fallback mechanisms, budget and rate limit management, and comprehensive logging and observability through integrations with tools like Lunary and Helicone.

Regarding performance, LiteLLM demonstrates robust capabilities with its support for both synchronous and asynchronous API calls, including streaming responses. The platform also allows for load balancing across multiple deployments and provides tools for tracking spending and managing API keys securely. The metrics and features highlight its scalability and efficiency, making it suitable for enterprise-level applications where managing multiple LLM providers is essential. Additionally, its ability to integrate with various logging and monitoring tools ensures that developers can maintain visibility and control over their API usage.

In conclusion, LiteLLM offers a comprehensive solution for managing API calls across various LLM providers, simplifying the development process, and providing essential tools for cost control and observability. By offering a unified interface and supporting a wide range of features, it helps developers streamline their workflows, reduce complexity, and ensure that their applications are both efficient and scalable.

The post LiteLLM: Call 100+ LLMs Using the Same Input/Output Format appeared first on MarkTechPost.

Source: Read MoreÂ