Natural Language Processing (NLP), despite its progress, faces the persistent challenge of hallucination, where models generate incorrect or nonsensical information. Researchers have introduced Retrieval-Augmented Generation (RAG) systems to mitigate this issue by incorporating external information retrieval to enhance the accuracy of generated responses.

The problem, however, is the reliability and effectiveness of RAG systems in providing accurate responses across different domains. Existing benchmarks primarily focus on general knowledge but need to improve in evaluating the performance of RAG models in specialized fields like finance, healthcare, and legal sectors. This limitation arises from the difficulty in curating high-quality datasets that can comprehensively test the models’ ability to handle domain-specific information.

Current methods for evaluating RAG systems include established NLP metrics such as F1, BLEU, ROUGE-L, and EM for answer generation and Hit Rate, MRR, and NDCG for retrieval assessment. More recent approaches use LLM-generated data to evaluate contextual relevance, faithfulness, and informativeness. However, these metrics often lack the nuance required for assessing the generative capabilities of RAG systems in vertical domains. Consequently, a more robust evaluation framework is necessary to address these shortcomings and provide a detailed assessment of RAG performance in specialized areas.

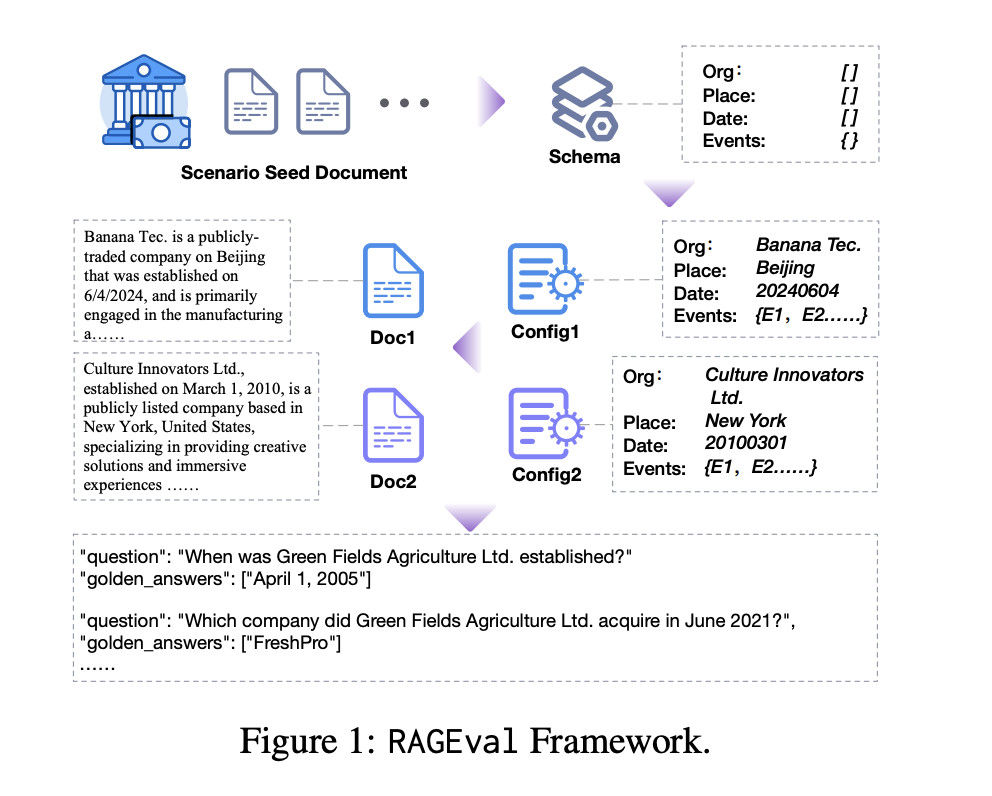

Researchers from Tsinghua University, Beijing Normal University, University of Chinese Academy of Sciences, and Northeastern University introduced the RAGEval framework to address these challenges. This framework automatically generates evaluation datasets tailored to specific scenarios in various vertical domains. The process begins by summarizing a schema from seed documents, generating diverse documents and constructing question-answering pairs based on these configurations. The framework then evaluates the model responses using novel metrics focusing on factual accuracy.

The proposed method, RAGEval, employs a “schema-configuration-document-QAR-keypoint†pipeline to ensure the robustness and reliability of the evaluation process. This involves generating a schema that encapsulates essential domain-specific knowledge, creating configurations from this schema, and producing diverse documents. These documents are then used to generate questions and reference answers, forming QAR triples evaluated for completeness, hallucination, and irrelevance. This comprehensive approach ensures that the evaluation datasets are rich in factual information and logical coherence.

A hybrid approach is used to generate these configurations, combining rule-based and LLM-based methods to assign values to the schema elements. Rule-based methods ensure high accuracy and consistency, particularly for structured data, while LLMs are used to generate more complex or diverse content. This method produces a wide range of high-quality, diverse configurations, ensuring the generated documents are accurate and contextually relevant.

Experimental results demonstrated that the RAGEval framework is highly effective in generating accurate, safe, and rich content across various domains. The human evaluation results highlighted the robustness of this method, showing that the generated documents were clear, specific, and closely resembled real-world documents. Moreover, the validation of automated evaluation metrics showed a high degree of alignment with human judgment, confirming the reliability of these metrics in reflecting model performance.

GPT-4o performed better overall, achieving the highest Completeness scores of 0.5187 for Chinese and 0.6845 for English. However, the gap with top-performing open-source models, such as Qwen1.5-14B-chat and Llama3-8B-Instruct, was relatively small. Qwen1.5-14B-chat achieved a Completeness score of 0.4926 in Chinese, while Llama3-8B-Instruct scored 0.6524 in English. These results suggest that with further advancements, open-source models have significant potential to close the performance gap with proprietary models.

In conclusion, the RAGEval framework offers a robust solution for evaluating RAG systems, addressing the limitations of existing benchmarks by focusing on domain-specific factual accuracy. This approach enhances the reliability of RAG models in various industries and paves the way for future improvements in proprietary and open-source models. For best results, researchers and developers are encouraged to leverage frameworks like RAGEval to ensure their models meet the specific needs of their application domains.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post RAGEval: An AI Framework for Automatically Generating Evaluation Datasets to Evaluate the Knowledge Usage Ability of Different LLMs in Different Scenarios appeared first on MarkTechPost.

Source: Read MoreÂ