Large Language Models (LLMs) are advancing rapidly resulting in more complex architecture. The high cost of LLMs has been a major barrier to their widespread adoption in various industries. Businesses and developers have been hesitant to invest in these models due to the substantial operational expenses. A significant portion of these costs arises from the repetitive processing of input data or “context,†as many user inputs, particularly in applications like customer support chatbots, frequently reuse similar patterns or prefixes. Traditional LLMs process this repetitive data multiple times, leading to unnecessary computational expenses and increased operational costs, thereby limiting the accessibility and scalability of these models.

Current LLM APIs typically process every user input afresh, including the repetitive inputs or identical to previous ones. This leads to inefficiencies, both in terms of computational resources and costs. DeepSeek addresses this issue with its innovative Context Caching on Disk technology. This method involves caching frequently used input context on a distributed disk array rather than storing it in more expensive memory. The cached content is then reused for subsequent inputs that share identical prefixes, thus bypassing the need for recomputation. This approach reduces service latency and significantly cuts overall usage costs, with potential savings of up to 90% for users.

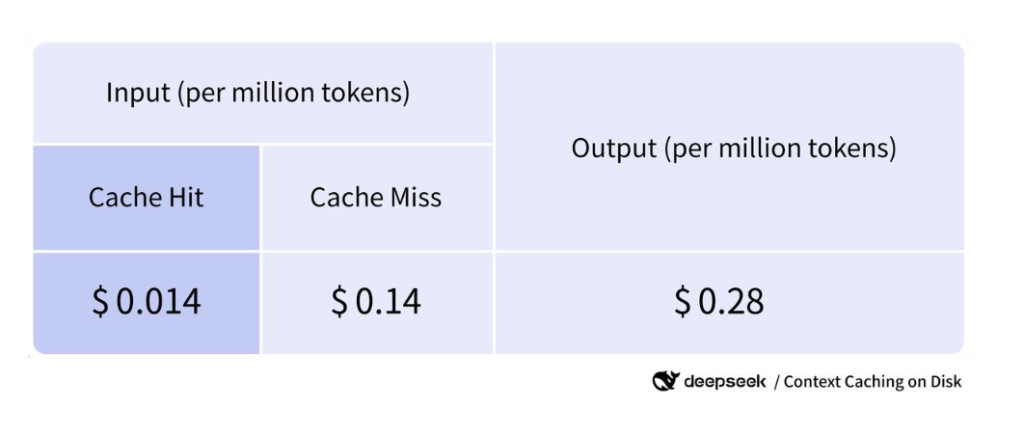

DeepSeek’s Context Caching on Disk technology works by first analyzing incoming requests to identify patterns and recurring contexts. Frequently used context is then stored on a distributed disk array, and when a new request arrives, the system checks the cache for matching contexts. If a match is found, the cached data is retrieved and used, avoiding the need for recomputation. This process is dynamically managed to ensure optimal performance and storage efficiency. The impact of this approach not only accelerates response times—cutting first-token latency from 13 seconds to just 500 milliseconds in some cases—but also increases the system’s throughput, enabling it to handle a higher volume of requests simultaneously. Moreover, the costs are drastically reduced, with the service charging only $0.014 per million tokens for cache hits, compared to $0.14 for non-cache tokens.

In conclusion, DeepSeek’s Context Caching on Disk represents a significant advancement in the field of LLMs, addressing the critical issue of high operational costs by leveraging disk-based caching. This method reduces computational expenses and enhances system performance, making LLM technology more accessible and scalable. By reducing latency and increasing throughput, this method could democratize access to LLMs and stimulate new applications across various industries.

Check out the Details here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post DeepSeek API Introduces Context Caching on Disk: Reducing Input Token Price to 1/10 appeared first on MarkTechPost.

Source: Read MoreÂ