Migrating your on-premises MySQL database to Amazon Aurora with MySQL compatibility can significantly enhance your database’s performance, scalability, and manageability. AWS Database Migration Service makes this migration process straightforward and efficient.

Homogeneous data migrations in AWS Database Migration Service (AWS DMS) simplify the migration of on-premises databases to their Amazon Relational Database Service (Amazon RDS) equivalents. For the list of supported source databases, see Sources for DMS homogeneous data migrations. For the list of supported target databases, see Targets for DMS homogeneous data migrations.

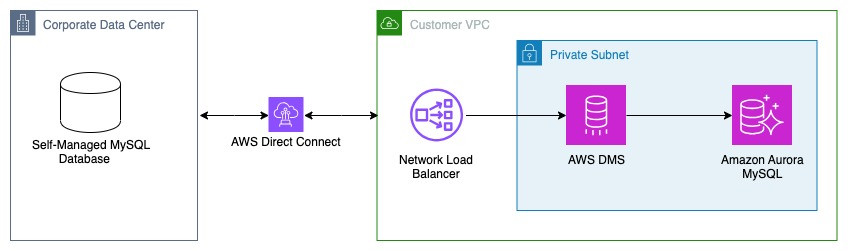

In this post, we guide you through the steps of performing a homogeneous migration from an on-premises MySQL database to Amazon Aurora MySQL using AWS DMS homogeneous data migrations over a private network using network load balancer.

Solution overview

Homogeneous data migrations are serverless and allow you to migrate data between the same database engines, such as moving from a MySQL instance to an Aurora MySQL instance. With homogeneous data migrations, you can migrate data, table partitions, data types, and secondary objects such as functions, stored procedures, and so on.

For homogeneous data migrations of the full load and change data capture (CDC) type, AWS DMS uses mydumper to read data from your source database and store it on the disk attached to the serverless environment. After DMS reads all your source data, it uses myloader in the target database to restore your data. For more details, refer to the MySQL Data Dumper project page.

After AWS DMS completes the full load, it sets up binary log replication with the binary log position set to the start of the full load. For more information, see Migrating data from MySQL databases with homogeneous data migrations in AWS DMS. We recommend that you review the Limitations for homogeneous data migrations before migrating your on-premises MySQL database to Aurora MySQL.

For homogeneous data migrations, AWS DMS connects to your source database within the public network. For more details, see Using an On-Premises Source Data Provider. However, connectivity to a source database within a public network is not always possible. In this blog we will explain how to overcome this limitation by utilization network load balancer in AWS.

The following diagram shows the architectural overview of migrating a on-premises MySQL database to Aurora MySQL using AWS DMS homogeneous data migrations and with a network load balancer:

You can use the same process to migrate an Amazon RDS for MySQL or self-managed MySQL to Amazon Aurora hosted in a different Amazon Virtual Private Cloud (Amazon VPC).

To implement this solution, use the following steps (explained in detail below):

Prepare the source database.

Prepare the target database.

Create secrets for the source and target databases in AWS Secrets Manager.

Create an AWS DMS subnet group.

Create a network load balancer.

Create an instance profile.

Create data providers.

Create a migration project.

Initiate data migrations.

Monitor replication.

Perform cutover.

Clean up.

Prerequisites

Make sure you meet the following prerequisites:

An active AWS account.

The on-premises and the AWS account must have connectivity using either AWS Direct Connector AWS Site-to-Site VPN. For more information, see Network-to-Amazon VPC connectivity options.

The on-premises security firewall should allow incoming and outgoing traffic between the on-premises network and the AWS VPC CIDR range.

An AWS Identity and Access Management (IAM) policy and role for homogeneous data migrations.

An Aurora MySQL database instance in the target AWS account.

We recommend that you don’t use homogeneous data migrations to migrate data from a higher database version to a lower database version.

For the example in this post, we use the following configuration:

Target Aurora MySQL DB cluster: aurora-mysql-target-01

VPC security group associated with the Aurora MySQL DB cluster:Â data-migration-sg

Binary log format: ROW

Amazon VPC: target-vpc

VPC CIDR range of AWS Account: 16.0.0.0/16

IAM role for AWS DMS homogeneous data migrations:Â HomogeneousDataMigrationRole

Prepare the source database

In this section, you prepare the source database for homogeneous data migrations.

Create a database user

To run homogeneous data migrations, you must use a database user with the required privileges for replication. Use the following script to create a database user with the required permissions in your MySQL database.

For more information on database user permissions, see Using a MySQL compatible database as a source for homogeneous data migrations in AWS DMS.

Enable binary Logging and other required parameters

To use CDC, make sure to enable binary logging on the source database. For more information on enabling the binary logging, see Using a self-managed MySQL compatible database as a source for homogeneous data migrations.

Modify the MySQL configuration

In this post, you use network load balancer to connect to the source on-premises database. A network load balancer’s repeated health checks could cause connection issues while connecting to a source on-premises database during homogeneous data migrations in AWS DMS and you can see an error message like “Host 10.x.x.x is blocked because of many connection errors unblock with mysqladmin flush-hosts.†To resolve this error, you can do one of the following:

Enable the skip_name_resolve parameter in the source on-premises MySQL database. Setting skip_name_resolve =1 completely avoids the DNS host name lookup and so it never blocks the NLB hosts.

Note: skip_name_resolve is a static parameter so modifying this value requires a reboot. You should be aware that the server uses only IP addresses and not host names to match connecting hosts to rows in the MySQL grant tables. For more information, see DNS Lookups and the Host Cache.

Increase the value for parameter max_connect_errors and run flush hosts by creating a MySQL event in the source on-premises MySQL database. For more information, see MySQL Documentation.

Network configuration

The on-premises security firewall should allow incoming and outgoing traffic between the on-premises network and the AWS VPC CIDR range.

Prepare the target database

Create a database user

AWS DMS requires a database user with certain permissions to migrate data into your target Aurora MySQL database. For more information on creating a database user and permissions, see Using a MySQL compatible database as a target for homogeneous data migrations in AWS DMS. In this post, you use the following script to create a database user with the required permissions in your MySQL target database.

AWS DMS will assign this user as DEFINER of MySQL database objects. For limitations on username and password when using a MySQL compatible database as a target for homogeneous data migrations, see Limitations for using a MySQL compatible database as a target for homogeneous data migrations.

Network configuration

Update the inbound rule of the VPC security group data-migration-sg attached to the target Aurora MySQL cluster by adding the CIDR range of the Amazon VPC where the Aurora MySQL cluster is located. However, this rule isn’t needed if you use AWS DMS only to perform full load. AWS DMS also adds an inbound rule in the security group attached to Aurora MySQL and the instance profile. Make sure to not delete or modify that rule. The following shows the sample Inbound Rules added in the VPC security group data-migration-sg attached to the target Aurora MySQL cluster:

Modify the DB cluster parameter group

Modify log_bin_trust_function_creators to 1 in the custom DB cluster parameter group associated with your Aurora MySQL DB cluster. For more information, see Modifying parameters in a DB cluster parameter group. Changing this parameter allows AWS DMS to create Functions and Triggers in Aurora MySQL database as part of the data migration.

Create an AWS DMS subnet group

Create a subnet group for your AWS DMS instance profile. For more information, see Creating a subnet group for an AWS DMS migration project. In this post, you create a subnet group using private subnets on AWS VPC.

Create a Network load balancer

In homogeneous data migrations, AWS DMS connects to your source data provider within the public network. For more information, see Setting up a network for homogeneous data migrations in AWS DMS. However, you can Create a Network Load Balancer and allow DMS to connect to the source database within the private network. In this post, the network load balancer registers the IP address of the source on-premises database host, if the source database host changes (for example due to host replacement or failover) you must modify the network load balancer configuration.

Configure a target group

Open the AWS Management Console and go to Amazon Elastic Compute Cloud (Amazon EC2).

In the navigation pane, choose Target Groups.

Choose Create target group.

For the Basic configuration pane, do the following:

For Choose a target type, select IP addresses.

For Target group name, enter mysql-migration-tg.

For Protocol, choose TCP.

For Port, enter 3306.

For IP address type, select IPv4.

For VPC, select target-vpc.

For Health check protocol, select TCP.

Proceed with default configuration for the rest of the settings.

Choose Next.

Register targets

You must register your targets to ensure that your load balancer can route traffic to them.

For Network, select other private IP address.

For Availability Zone, select All.

For Enter a private IP address, enter the private IP address of source database host.

For Port, enter 3306.

Choose Include as pending below.

Configure a load balancer and a listener

Open the Amazon EC2 console

In the navigation pane, choose Load Balancers.

Choose Create load balancer.

Under Network Load Balancer, choose Create.

Basic configuration

For Load balancer name, enter mysql-migration-nlb.

For Scheme, select Internal.

For IP address type, select IPv4.

Network mapping

For VPC, select the VPC target-vpc that you used for your target Aurora MySQL DB cluster.

Mappings

Select Availability Zones and the corresponding Private subnets that you used for the Aurora MySQL DB cluster and AWS DMS subnet group.

For Private IPv4 address, select Assigned from CIDR.

For Security groups, select the security group data-migration-sg that you used for your target Aurora MySQL DB cluster.

Listeners and routing

For Protocol, choose TCP.

For Port, enter 3306.

For Default action, choose target group mysql-migration-tg.

Proceed with default configuration for rest of settings.

Test the load balancer

Test the load balancer by connecting to the on-premises source MySQL database using the DNS name of the network load balancer. You can use the MySQL client installed on any Amazon EC2 hosted in the same VPC that you used for the network load balancer and connect to the source database.

Create secrets for the source and target databases in Secrets Manager

You create the secret for source database user app_user in Secrets Manager. Use the DNS name of the network load balancer(mysql-migration-nlb)for the source database host.

Create the secret for the target database user in Secrets Manager.

Create an instance profile

Create an AWS DMS instance profile to specify network and security settings for the database migration. You use this instance profile in a later step.

Open the AWS DMS console.

In the navigation pane, choose Instance profiles.

Choose Create instance profile.

For Name, enter dms-instance-profile-01.

For Description, enter AWS DMS Instance Profile.

For Network type, choose IPv4.

For Virtual private cloud (VPC) for IPv4, choose VPC target-vpc from the drop-down.

For Subnet group, select dms-subnet-group.

For VPC security groups, select data-migration-sg.

ForAssign public IP, select No.

For AWS KMS Key, select the required KMS key.

Create data providers

Create AWS DMS data providers for the source on-premises MySQL database and the target Aurora MySQL database. The data providers describe your source and target databases. You use these data providers at a later step to run the data migrations.

Source data provider

Open the AWS DMS console.

In the navigation pane, choose Data providers.

Choose Create data provider.

For Name, enter source-mysql.

For Engine type, select MySQL.

For Engine configuration, select Enter manually.

For Server name, enter the DNS name of the network load balancer that you created previously to connect to the source database using a private IP address.

For Port, enter 3306.

For Secure Socket Layer (SSL) mode, choose none for simplicity in this example. However, we recommend that you use the verify-ca to encrypt the connection in production.

Target data provider

Open the AWS DMS console.

In the navigation pane, choose Data providers.

Choose Create data provider.

For Name, enter target-aurora-mysql.

For Engine type, select Amazon Aurora MySQL.

For Engine configuration, select RDS database instance.

For Database from RDS, select aurora-mysql-target-01.

For Secure Socket Layer (SSL) mode, select none for simplicity in this example. However, we recommend that you use the verify-ca to encrypt the connection in production.

Create the migration project

Create the AWS DMS migration project with the data providers created in previous steps.

Open the AWS DMS console.

In the navigation pane, choose Migration projects.

Choose Create migration project.

For Name, enter mysql-migration-project.

For Instance profile, choose dms-instance-profile-01.

Source data provider:

For Source, select source-mysql.

For Secret ID, select mysql-db-secret-source.

For IAM role, select HomogeneousDataMigrationRole.

Select Use the same IAM role for target data provider.

Target data provider:

For Source, select target-aurora-mysql.

For Secret ID, select mysql-db-secret-target.

The project is ready to use for homogeneous data migrations.

Create a Data migration

To start using homogeneous data migrations, create a new data migration. You can create several homogeneous data migrations of different types in a single migration project.

Open the AWS DMS console

In the navigation pane, choose Migration projects.

On the Migration projects page, select project mysql-migration-project.

Choose the Data migrations

Choose Create data migration.

For Name, enter mysql-data-migration.

For Replication type, select Full load and change data capture (CDC).

Select Turn on CloudWatch logs.

In Advanced Settings, for Number of jobs, enter a number of parallel jobs.

For IAM service role, select HomogeneousDataMigrationRole.

Stop mode settings

For Stop mode, select Don’t stop CDC.

Use the following steps to verify the configuration and start the data migration:

Select the data migration mysql-data-migration.

Select Actions and Start the data migration.

Monitor replication

To monitor how far the target DB is behind the source DB, use the OverallCDClatency Amazon CloudWatch metrics. To view the metrics, use the following steps:

Open the AWS DMS console.

In the navigation pane, choose Migration projects.

On the Migration projects page, select project mysql-migration-project.

Navigate to Data migrations

Choose Data migration, mysql-data-migration.

Choose the Monitoring

For more information on monitoring the data migration’s status and progress, see Monitoring data migrations in AWS DMS. You can also use AuroraBinlogReplicaLag Amazon CloudWatch metric associated with the WRITER instance to check the lag between source and Aurora MySQL. For more information, see Instance-level metrics for Amazon Aurora.

Perform cutover

When the lag between the source and target databases is a near zero, you’re ready to point the application to the Aurora MySQL DB cluster in the target account. We recommend planning your cutover during a low-traffic window and following your in-house business cutover checklist and processes.

Stop accepting connections on the source database.

Make sure CDC latency from the source to the target DB instance is 0.

To get the binary log position on the source, execute SHOW MASTER STATUS and compare the output with the target’s binary log coordinates, such as Exec_Source_Log_Pos and Read_Source_Log_Pos. On the target Aurora MySQL instance, run SHOW REPLICA STATUS to obtain the binary log coordinates. Additionally, check the Seconds_Behind_Source field to determine how far the target database instance is behind the source.

Stop data migration

Select the data migration mysql-data-migration.

Select Actions and Stop the data migration.

Update the application configuration or DNS CNAME record with the target database endpoints.

You can also set up replication from the target Aurora MySQL cluster to the source on-premises database using binary log replication to address fallback requirements before starting the application with Aurora MySQL database.

Start your application with the Aurora MySQL database.

Clean up

As part of this migration, you have deployed several resources for AWS DMS, network load balancer, AWS Secrets Manager etc. in your AWS account. These resources will incur costs as long as they are in use. Be sure to remove any resources you no longer need.

Conclusion

In this post, we discussed the steps involved in migrating a on-premises MySQL database to Aurora MySQL using homogenous data migrations in AWS DMS. We highly recommend testing the migration steps in non-production environments prior to making changes in production.

We welcome your feedback. If you have any questions or suggestions, leave them in the comments section.

About the Authors

Alok Srivastava is a Senior Database Consultant and Data Architect at AWS, specializing in database migration and modernization programs. Leveraging his expertise in both traditional and cutting-edge technologies, he guides AWS customers and partners through their journey to the AWS Cloud. Alok’s role encompasses not only database solutions but also the integration of GenAI to enhance data-driven insights and innovation.

A. Mosaad is a Senior Database Engineer in Database Migration Service at Amazon Web Services. He works with our customers to provide guidance and technical assistance on database migration projects, helping them improve the value of their solutions when using AWS.

Source: Read More