Medical image segmentation plays a role in modern healthcare, focusing on precisely identifying and delineating anatomical structures within medical scans. This process is fundamental for accurate diagnosis, treatment planning, and monitoring of various diseases. Advances in deep learning have improved the accuracy and efficiency of medical image segmentation, making it an indispensable tool in clinical practice. Deep learning models have replaced traditional thresholding, clustering, and active contour models.

Despite the advancements in deep learning models, challenges remain in segmenting medical images with low contrast, faint boundaries, and intricate morphologies. These challenges hinder the effectiveness of segmentation models, necessitating specialized adaptations to enhance their performance in the medical imaging domain. Accurate and reliable segmentation methods are critical, as errors can lead to incorrect diagnoses & treatment plans, adversely affecting patient outcomes. Thus, improving the adaptability of segmentation models to handle the unique characteristics of medical images is a key research focus.

Existing methods in medical image segmentation include various deep learning models like U-Net and its extensions, which have shown promise in segmenting medical images. Additionally, foundational models like the Segment Anything Model (SAM) have been adapted for medical use. However, these models often require task-specific fine-tuning and modifications to address the unique challenges of medical images. The SAM has gained attention for its versatility in segmenting various objects with minimal user input. However, its performance diminishes in the medical realm due to the need for comprehensive clinical annotations and the intrinsic differences between natural and medical images.

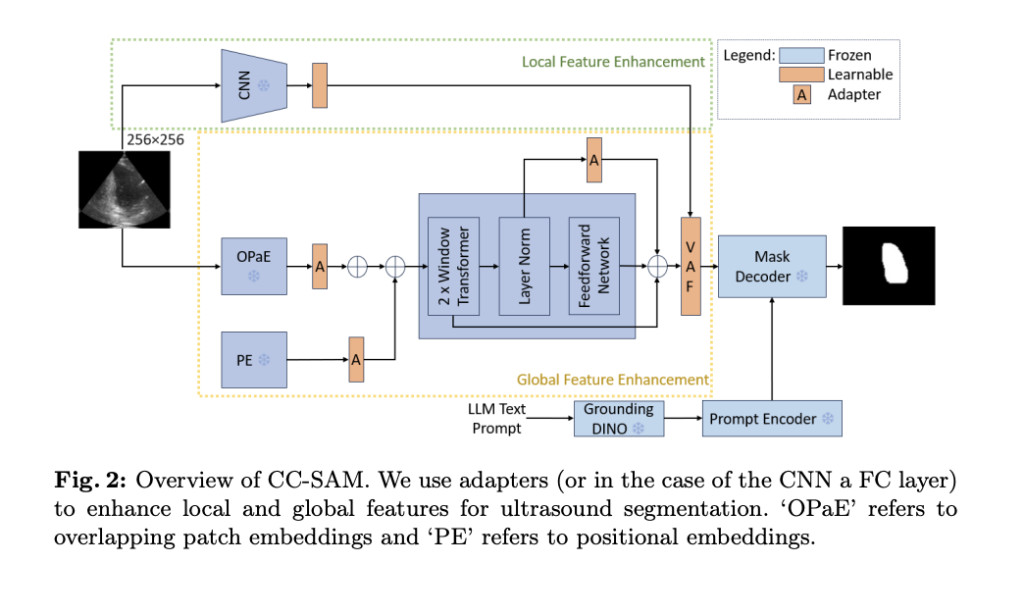

Researchers from the University of Oxford introduced CC-SAM, an advanced model building upon SAMUS, to improve medical image segmentation. This model incorporates a static Convolutional Neural Network (CNN) branch and utilizes a variational attention fusion module to enhance segmentation performance. By integrating a CNN with SAM’s Vision Transformer (ViT) encoder, the researchers sought to capture vital local spatial information crucial for medical images, thereby improving the model’s accuracy and efficiency.

CC-SAM combines a pre-trained ResNet50 CNN with SAM’s ViT encoder. The integration is achieved through a novel variational attention fusion mechanism that merges features from both branches, capturing local spatial information crucial for medical images. Adapters refine the positional and feature representations within the ViT branch, optimizing the model’s performance for medical imaging tasks. This approach leverages the strengths of both CNNs and transformers, creating a hybrid framework that excels in local and global feature extraction.

The model demonstrates superior segmentation accuracy in various medical imaging datasets, including TN3K, BUSI, CAMUS-LV, CAMUS-MYO, and CAMUS-LA. Notably, CC-SAM achieves higher Dice scores and lower Hausdorff distances, indicating its effectiveness in accurately segmenting medical images with complex structures. For instance, on the TN3K dataset, CC-SAM achieved a Dice score of 85.20 and a Hausdorff distance of 27.10, while on the BUSI dataset, it achieved a Dice score of 87.01 and a Hausdorff distance of 24.22. These results highlight the model’s robustness and reliability across different medical imaging tasks.

The researchers’ approach addresses the critical issue of adapting universal segmentation models to medical imaging. The researchers have significantly improved the model’s adaptability and accuracy by integrating a CNN with SAM’s ViT encoder and employing innovative fusion techniques. Introducing feature and position adapters within the ViT branch refines the encoder’s representations, further optimizing the model for medical imaging. Leveraging text prompts generated by ChatGPT enhances the model’s understanding of the nuances in ultrasound medical images, significantly boosting segmentation accuracy.

In conclusion, CC-SAM addresses the limitations of existing models and introduces innovative techniques to enhance performance; the researchers have created a model that excels in accuracy and efficiency. The integration of CNN and ViT encoders, along with variational attention fusion and text prompts, marks a significant step towards improving the adaptability and effectiveness of segmentation models in the medical field.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post CC-SAM: Achieving Superior Medical Image Segmentation with 85.20 Dice Score and 27.10 Hausdorff Distance Using Convolutional Neural Network CNN and ViT Integration appeared first on MarkTechPost.

Source: Read MoreÂ