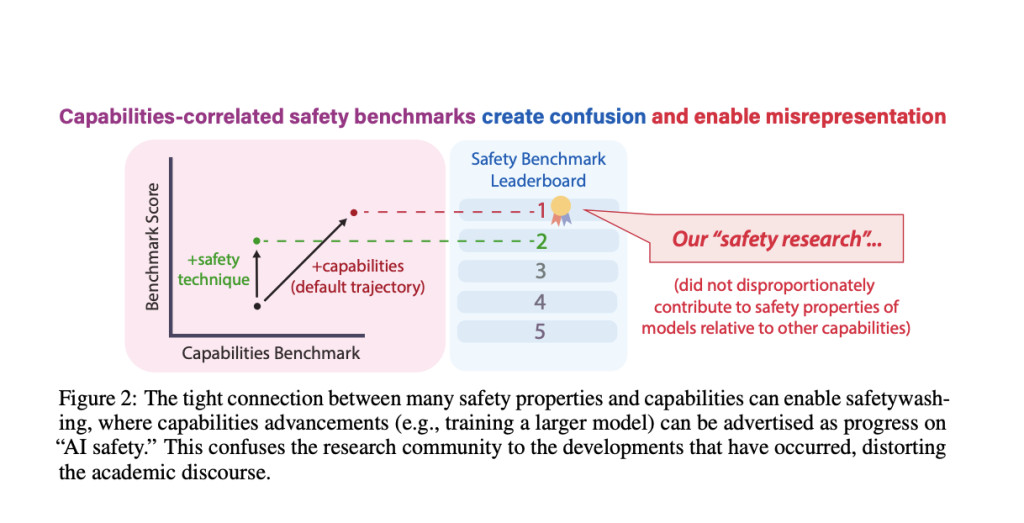

Ensuring the safety of increasingly powerful AI systems is a critical concern. Current AI safety research aims to address emerging and future risks by developing benchmarks that measure various safety properties, such as fairness, reliability, and robustness. However, the field remains poorly defined, with benchmarks often reflecting general AI capabilities rather than genuine safety improvements. This ambiguity can lead to “safetywashing,†where capability advancements are misrepresented as safety progress, thus failing to ensure that AI systems are genuinely safer. Addressing this challenge is essential for advancing AI research and ensuring that safety measures are both meaningful and effective.

Existing methods to ensure AI safety involve benchmarks designed to assess attributes like fairness, reliability, and adversarial robustness. Common benchmarks include tests for model alignment with human preferences, bias evaluations, and calibration metrics. These benchmarks, however, have significant limitations. Many are highly correlated with general AI capabilities, meaning improvements in these benchmarks often result from general performance enhancements rather than targeted safety improvements. This entanglement leads to capability improvements being misrepresented as safety advancements, thus failing to ensure that AI systems are genuinely safer.

A team of researchers from the Center for AI Safety, University of Pennsylvania, UC Berkeley, Stanford University, Yale University, and Keio University introduces a novel empirical approach to distinguish true safety progress from general capability improvements. Researchers conduct a meta-analysis of various AI safety benchmarks and measure their correlation with general capabilities across numerous models. This analysis reveals that many safety benchmarks are indeed correlated with general capabilities, leading to potential safetywashing. The innovation lies in the empirical foundation for developing more meaningful safety metrics that are distinct from generic capability advancements. By defining AI safety in a machine learning context as a set of clearly separable research goals, the researchers aim to create a rigorous framework that genuinely measures safety progress, thereby advancing the science of safety evaluations.

The methodology involves collecting performance scores from various models across numerous safety and capability benchmarks. The scores are normalized and analyzed using Principal Component Analysis (PCA) to derive a general capabilities score. The correlation between this capabilities score and the safety benchmark scores is then computed using Spearman’s correlation. This approach allows the identification of which benchmarks measure safety properties independently of general capabilities and which do not. The researchers use a diverse set of models and benchmarks to ensure robust results, including models fine-tuned for specific tasks and general models, as well as benchmarks for alignment, bias, adversarial robustness, and calibration.

Findings from this study reveal that many AI safety benchmarks are highly correlated with general capabilities, indicating that improvements in these benchmarks often stem from overall performance enhancements rather than targeted safety advancements. For instance, the alignment benchmark MT-Bench shows a capabilities correlation of 78.7%, suggesting that higher alignment scores are primarily driven by general model capabilities. In contrast, the MACHIAVELLI benchmark for ethical propensities exhibits a low correlation with general capabilities, demonstrating its effectiveness in measuring distinct safety attributes. This distinction is crucial as it highlights the risk of safetywashing, where improvements in AI safety benchmarks may be misconstrued as genuine safety progress when they are merely reflections of general capability enhancements. Emphasizing the need for benchmarks that independently measure safety properties ensures that AI safety advancements are meaningful and not merely superficial improvements.

In conclusion, the researchers provide empirical clarity on the measurement of AI safety. By demonstrating that many current benchmarks are highly correlated with general capabilities, the need for developing benchmarks that genuinely measure safety improvements is highlighted. The proposed solution involves creating a set of empirically separable safety research goals, ensuring that advancements in AI safety are not merely reflections of general capability enhancements but are genuine improvements in AI reliability and trustworthiness. This work has the potential to significantly impact AI safety research by providing a more rigorous framework for evaluating safety progress.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post AI Safety Benchmarks May Not Ensure True Safety: This AI Paper Reveals the Hidden Risks of Safetywashing appeared first on MarkTechPost.

Source: Read MoreÂ