It’s common to want to enable Amazon Athena to join data from multiple data sources for analytics. Accordingly, Athena released the Federated Query feature, which allows you to connect to multiple data sources, including Amazon Neptune, a managed graph database service from AWS.

To query a Neptune database in Athena, you can use the Amazon Athena Neptune connector, an AWS Lambda function that connects to the Neptune cluster and queries the graph on behalf of Athena. In the post Build interactive graph data analytics and visualizations using Amazon Neptune, Amazon Athena Federated Query, and Amazon QuickSight, we announced the connector. In this post, we discuss two new features of the connector:

Support for RDF – Neptune supports two common graph representations: labeled property graph and Resource Description Framework (RDF). Since its initial release, the connector has enabled queries of property graph data in Neptune using the Apache TinkerPop Gremlin query language. Now the connector also supports queries of RDF data in Neptune using the SPARQL query language.

Custom views for property graphs – Previously, the connector could perform preconfigured Gremlin queries on vertices and edges. Now the connector provides the flexibility to specify custom Gremlin queries.

In this post, we provide a step-by-step implementation guide to integrate the new version of the Athena Neptune connector and query a Neptune cluster using Gremlin and SPARQL queries.

Solution overview

To implement this solution, you create a Neptune cluster, load sample datasets, and then integrate the Athena Neptune connector to retrieve data using Gremlin and SPARQL queries. Please note, you will incur charges for the AWS services integrated in this solution for the duration of the time they are deployed.

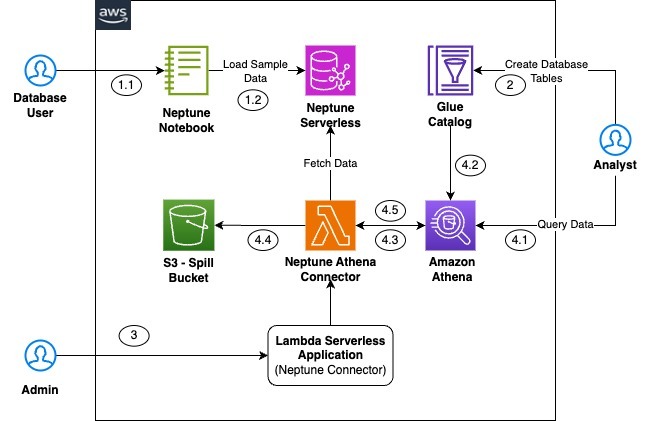

The following diagram represents the high-level architecture of the solution.

The workflow includes the following steps:

The end-user uses a Neptune notebook to load sample datasets into the graph database available on a Neptune cluster.

Configure a table in the AWS Glue Data Catalog, which stores the schema metadata for the query result to be displayed as a table in Athena.

Deploy the Athena Neptune connector, which is an one-time activity as part of the setup.

The end-user queries the newly created table in the Data Catalog using Athena, which invokes the Lambda function, which queries the Neptune cluster.

You can repeat Steps 2–4 for both property graphs and RDF to validate the query functionality with Gremlin and RDF, respectively.

Query Neptune property graph data

The following steps demonstrate how to use the new capability of the Athena Neptune connector to query the property graph data using Athena. You can deploy it in any AWS Region where the Athena Neptune connector is available.

Create a Neptune database cluster and load sample datasets

First, set up a Neptune database cluster. If you already have a cluster, you can skip this step.

On the Neptune console, choose Launch Amazon Neptune.

Choose an engine type (for this post, we select Serverless).

For DB Cluster name, enter a name (for example, neptune-serverless-db).

Choose a virtual private cloud (VPC) and private subnets for the cluster.

Keep the remaining configurations as default.

The instructions in the GitHub repo provide guidance on loading the sample air routes datasets for our use case.

For details on how to create a cluster manually using the AWS Management Console, see Launching a Neptune DB cluster using the AWS Management Console. Make sure the cluster has network connectivity to both Amazon Simple Storage Service (Amazon S3) and AWS Glue. One way to achieve this is to create an AWS Glue VPC endpoint. Refer to Gateway endpoints for Amazon S3 and AWS Glue and interface VPC endpoints (AWS PrivateLink) to set this up.

Configure a Data Catalog table

Before you deploy the Athena Neptune connector, you need to create the Data Catalog databases and tables, which will be mapped to the Neptune cluster datasets. You can do this using AWS CloudFormation.

Complete the following steps to deploy the Data Catalog databases and tables for the air routes datasets using the provided CloudFormation template:

On the AWS CloudFormation console, choose Create stack and With new resources (standard).

Select Choose an existing template and Amazon S3 URL.

Choose Next, leave everything else as default, and proceed to create the stack.

It may take few minutes to complete the deployment. When it’s complete, navigate to the AWS Glue console to validate that the database and its respective tables are created. The following screenshot shows the two databases created by the CloudFormation template.

The following screenshot shows the tables created under graph-database.

Let’s examine the table customairport. This table uses a custom view, defined by the following Gremlin query:

This query finds nodes with the label airport. It refers to these nodes as source. From these nodes, it follows the edge labeled route. It refers to the node that is the target of the edge as destination. The query uses select to return the source and destination nodes as the query output. More specifically, it returns the code property of these nodes.

In the AWS Glue table, source and destination are columns. These are mapped from the query.

In addition to custom views, the connector also supports vertex and edge tables. You can configure a vertex table by specifying a vertex label. The columns of the table are the vertex ID plus properties of the vertex having that label. The connector runs a Gremlin query that finds vertices with the label. It maps the vertex ID and properties to the column structure. airport is an example of a vertex table.

Similarly, you can configure an edge table by specifying an edge label. The columns of the table are the edge ID, source and target vertex IDs, and edge properties. The connector runs a Gremlin query that finds edges with the label. It maps results back to the column structure. route is an example of an edge table.

Deploy the Athena Neptune connector for the property graph

You can deploy the Neptune connector using the AWS Serverless Application Repository. It requires several parameters. Follow the step-by-step instructions in the GitHub repo to deploy the Athena Neptune connector.

The following screenshot shows the input parameters you specify while deploying the NeptuneAthenaConnector stack for the property graph.

The purpose of each setting is described on the page. The following are a few additional considerations:

SpillBucket – The spill bucket is used internally by the connector to spill the results to storage that can’t fit in memory. Provide the name of an S3 bucket in the same account and Region.

LambdaMemory and LambdaTimeout – Set these to maximum values. The connector is memory-intensive because it brings back potentially large results from the Neptune database. Such queries can take time to run, so allow a generous timeout in the connector.

SubnetIds – We recommend deploying the connector to one of the private subnets used by the Neptune cluster.

SecurityGroupIds – Create a new security group or use the same security group used by the Neptune cluster. See Deploy Amazon Athena Neptune Connector for more details.

Query the Neptune property graph database using Athena

To query the property graph database, complete the following steps:

On the Athena console, choose Data sources in the navigation pane.

Choose Create data source.

Select Amazon Neptune.

Choose Next.

For Data source name, enter a name (for example, neptune-source-connector).

For Lambda function, enter the ARN for the athena-catalog-neptune Lambda function.

Choose Next.

Review the configurations and choose Create data source.

Navigate to the Athena query editor.

Choose neptune-source-connector as the data source and graph-database as the database name.

Enter the following query:

SELECT * FROM “graph-database”.”sourcetodestinationairport” limit 10;

The query should produce the following output. The Athena query is a SQL query on the AWS Glue table. The connector maps this query to a Gremlin query based on the custom view. The result is data from the Neptune database in the column structure of the AWS Glue table.

Query RDF graph data

Similar to property graphs, the Athena Neptune connector also helps you query RDF graphs using SPARQL queries. In this section, we detail the steps to query RDF graph data. The setup is similar to that described in the previous section.

Load sample datasets to an RDF graph

Refer to the following instructions to seed the air routes datasets for RDF from the notebook instance.

Configure the AWS Glue table

The CloudFormation template we deployed for the property graph already created the graph-database-rdf database and respective tables.

Navigate to the AWS Glue console to confirm that the graph-database-rdf database and its respective tables are created, as shown in the following screenshot.

Three tables are created. Each table demonstrates an RDF query pattern supported by the connector. Let’s discuss these patterns and how the tables demonstrate them.

Find resources of a class

One pattern is to find resources of a given class. For each resource, you can find the values of its data type properties.

The airport_rdf table is configured to return details of airport resources from Neptune. You can configure this using the table properties shown in the following screenshot.

The following is the SPARQL query the connector runs:

The connector uses the following properties to build this query:

componenttype is set to rdf, indicating this table is for RDF, not property graph.

querymode is set to class, indicating that the connector will look for resources with a specific type. It instructs the connector to build the SPARQL query in the form of the preceding query.

classuri specifies that type. The value is class:Airport. In RDF, resources have URIs, which are web-like URLs. They are somewhat long, so you can use a short form known as a curie. In class:Airport, class is a prefix, which is a placeholder for a longer string.

prefix_class gives you the long form of the class. It is http://kelvinlawrence.net/air-routes/class/. The classuri, then, resolves to http://kelvinlawrence.net/air-routes/class/Airport.

subject is id. In the column structure, the id column is the URI of the resource itself.

strip_uri is true, indicating that if any values in the result are a URI, the connector is to strip all but the local name of the URI. In our result, the subject is a URI, but we want only the local name.

To get the remaining columns, we need to find data type properties of the subject. preds_prefix gives the predicate of the data type property. Here we set it to prop. To get the type column, for example, we query for triple ?id prop:type ?type. We return ?type and map to the type.

prefix_prop is set to http://kelvinlawrence.net/air-routes/datatypeProperty/. prop is a prefix for this longer string.

Property names match the column structure of the table. The following screenshot shows the columns.

Create a custom view

Another pattern is to map a custom SPARQL query to a resultset defined by a tabular column structure. The route_rdf AWS Glue table returns airport routes from Neptune.

The following screenshot shows the table properties.

The properties are as follows:

querymode is sparql, indicating that the connector runs a custom SPARQL query.

The sparql property gives that query. It is a fairly long SELECT query. It references two prefixes: op and prop. It also returns ?incode, ?outcode, and ?dist.

prefix_prop gives the long form of the prop.

prefix_op gives the long form of the op.

The following screenshot shows the column structure. It aligns with the returned values of the custom SPARQL query.

When the connector runs the query, it maps its result to this structure.

Create a custom view with prefixes inline

The route_rdf_nopfx table is a variation of route_rdf. It has the same column structure and the same query logic. The only difference is that the prefixes are part of the query string defined by the sparql property. There is no need for separate prefix properties.

Deploy the Athena Neptune connector for an RDF graph

Create a connector for the RDF query following the same approach used for the labeled property graph connector. You may use the same settings as you did the property graph connector, except for the following:

Use a different application name (for example, AthenaNeptuneConnectorRDF).

Use a different Athena catalog name. This value sets the name of the Lambda function. For example, use athena-catalog-rdf.

For the AWS Glue database name, use graph-database-rdf.

For the Neptune graph type, choose RDF.

Query the Neptune RDF graph database using Athena

As you did with the property graph connector, create a new data source in Athena that points to the newly created Neptune connector Lambda function for RDF. Then navigate to the Athena query editor. Choose graph-database-rdf as the database. Run the following query on the route_rdf table:

SELECT * FROM “graph-database-rdf”.”route_rdf” limit 10;

The query produces output similar to the following screenshot.

Clean up

To avoid incurring ongoing charges, clean up the resources created for this solution.

On the Neptune console, delete the cluster you created for this solution.

To remove the connectors, open the AWS Serverless Application Repository console and delete the published applications you created.

If you created a VPC, subnets, VPC endpoints, or a NAT or internet gateway for the Neptune cluster, delete the VPC to delete it and its components.

To delete the Data Catalog database and tables, delete the CloudFormation stack.

On the Amazon S3 console, delete the spill bucket.

Conclusion

In this post, we showed you how to deploy the Neptune Athena connector and configure Data Catalog tables to query data from the Neptune cluster. You can now pull Neptune graph database data to Athena with direct Gremlin and SPARQL queries, which enables you to join these datasets with other data sources such as data lakes, relational databases, and other purpose-built databases to generate aggregate output for faster decision-making.

If you’re looking for more graph analytical capabilities on top of the graph database itself, check out AWS Neptune Analytics, which was announced as generally available during AWS re:Invent 2023.

Appendix: Running federated passthrough queries in Athena

Athena supports running passthrough queries on a federated data source. A passthrough query pushes the full query down to the data source for the query run. Refer to Available data source connectors to find the complete list of data sources Athena supports.

The Athena Neptune connector supports passthrough queries, which means you can pass the Gremlin or SPARQL query through Athena SQL. The result of the query becomes available in Athena in tabular format, which you can use to join with other data sources such as data in a data lake. The following is an example of a passthrough query:

The following is a passthrough query using SPARQL that returns airports in Germany (country is “DEâ€):

To learn more about the connector, see Amazon Athena Neptune connector.

About the Authors

Sakti Mishra is a Principal Solution Architect at AWS, where he helps customers modernize their data architecture and define end-to end-data strategies, including data security, accessibility, governance, and more. He is also the author of the book Simplify Big Data Analytics with Amazon EMR. Outside of work, Sakti enjoys learning new technologies, watching movies, and visiting places with family.

Mike Havey is a Senior Solutions Architect for AWS with over 25 years of experience building enterprise applications. Mike is the author of two books and numerous articles. His Amazon author page is https://www.amazon.com/Michael-Havey/e/B001IO9JBI.

Source: Read More