Software vulnerability detection has seen substantial advancements in integrating deep learning models, which have shown high accuracy in identifying potential vulnerabilities within software. These models analyze code to detect patterns and anomalies that indicate weaknesses. However, despite their effectiveness, these models are not immune to attacks. Specifically, adversarial attacks, which involve manipulating input data to deceive the model, pose a significant threat to the security of these systems. Such attacks exploit the vulnerabilities within the deep learning models, raising the need for continuous improvement in detection and defense mechanisms.

A significant problem in this domain is that adversarial attacks can effectively bypass deep learning-based vulnerability detection systems. These attacks manipulate the input data in a way that causes the models to make incorrect predictions, such as classifying a vulnerable piece of software as non-vulnerable. This capability undermines the reliability of these models and poses a serious risk, as it allows attackers to exploit vulnerabilities undetected. The issue is further compounded by the growing sophistication of hackers and the increasing complexity of software systems, making it challenging to develop highly accurate and resilient models for such attacks.

Existing methods for detecting software vulnerabilities rely heavily on various deep-learning techniques. For example, some models use abstract syntax trees (ASTs) to extract high-level representations of code functions. In contrast, others employ tree-based models or advanced neural networks like LineVul, which uses Transformer-based approaches for line-level vulnerability prediction. Despite their advanced capabilities, these models can be deceived by adversarial attacks. Studies have shown that these attacks can exploit weaknesses in the models’ prediction processes, leading to incorrect classifications. For instance, the Metropolis-Hastings Modifier algorithm has generated adversarial samples designed to attack machine learning-based detection systems, revealing significant vulnerabilities in these models.

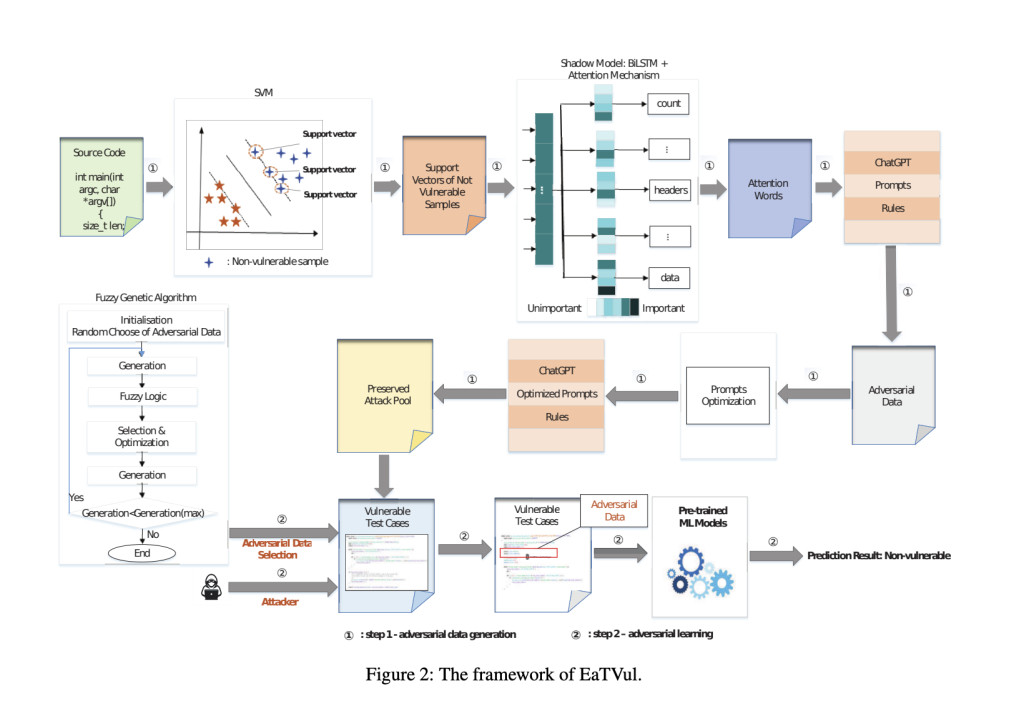

Researchers from CSIRO’s Data61, Swinburne University of Technology, and DST Group Australia introduced EaTVul, an innovative evasion attack strategy. EaTVul is designed to demonstrate the vulnerability of deep learning-based detection systems to adversarial attacks. The method involves a comprehensive approach to exploiting these vulnerabilities, aiming to highlight the need for more robust defenses in software vulnerability detection. The development of EaTVul underscores the ongoing risks associated with current detection methods and the necessity of continuous advancements in this field.

EaTVul’s methodology is detailed and multi-staged. Initially, the system identifies critical non-vulnerable samples using support vector machines (SVMs). These samples are essential as they help pinpoint the features significantly influencing the model’s predictions. Following this, an attention mechanism is employed to identify these crucial features, which are then used to generate adversarial data via ChatGPT. This data is subsequently optimized using a fuzzy genetic algorithm, which selects the most effective adversarial data for executing evasion attacks. The goal is to alter the input data so that the detection models incorrectly classify it as non-vulnerable, bypassing security measures.

The performance of EaTVul has been rigorously tested, and the results are compelling. The method achieved an attack success rate of more than 83% for snippets larger than two lines and up to 100% for snippets of four lines. These high success rates underscore the method’s effectiveness in evading detection models. In various experiments, EaTVul demonstrated its ability to consistently manipulate the models’ predictions, revealing significant vulnerabilities in the current detection systems. For example, in one case, the attack success rate reached 93.2% when modifying vulnerable samples, illustrating the method’s potential impact on software security.

The findings from the EaTVul research highlight a critical vulnerability in software vulnerability detection: the susceptibility of deep learning models to adversarial attacks. EaTVul exposes these vulnerabilities and underscores the urgent need to develop robust defense mechanisms. The study emphasizes the importance of ongoing research and innovation to enhance the security of software detection systems. By showcasing the effectiveness of adversarial attacks, this research calls attention to the necessity of integrating advanced defensive strategies into existing models.

In conclusion, the research into EaTVul provides valuable insights into the vulnerabilities of current deep learning-based software detection systems. The method’s high success rates in evasion attacks highlight the need for stronger defenses against adversarial manipulation. The study serves as a crucial reminder of the ongoing challenges in software vulnerability detection and the importance of continuous advancements to safeguard against emerging threats. It is imperative to integrate robust defense mechanisms into deep learning models, ensuring they remain resilient against adversarial attacks while maintaining high accuracy in detecting vulnerabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post EaTVul: Demonstrating Over 83% Success Rate in Evasion Attacks on Deep Learning-Based Software Vulnerability Detection Systems appeared first on MarkTechPost.

Source: Read MoreÂ