The quick development of Large Language Models (LLMs) has had a big impact on a number of different domains, like generative AI, Natural Language Understanding, and Natural Language Processing. However, hardware limitations have historically made running these models locally on a laptop, desktop, or mobile device difficult. To overcome this issue, the PyTorch team has introduced Torchchat, a flexible framework made to maximize LLM performance, like Llama 3 and 3.1, in various computing conditions. This unique approach allows for effective local inference on a variety of devices, which could democratize access to strong AI models.

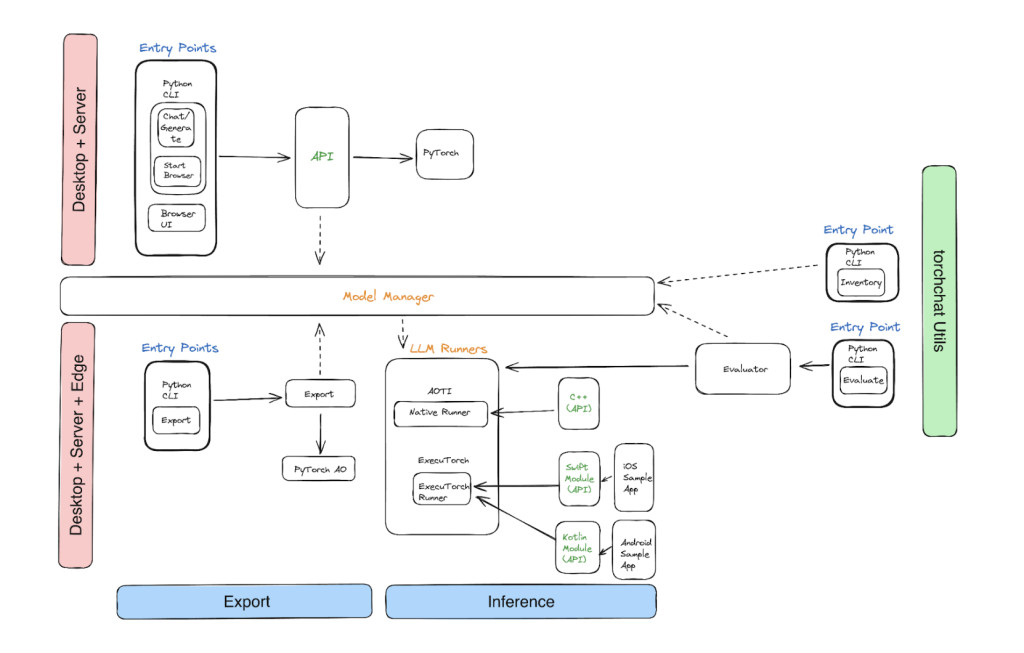

PyTorch 2, which provides outstanding performance for CUDA-based LLM execution, serves as the basis for Torchchat. Torchchat, on the other hand, takes things a step further by expanding its functionality to additional target environments, such as mobile platforms and Python and C++. For users wishing to implement LLMs locally, the library offers a complete end-to-end (E2E) solution that includes easily available features like export, quantization, and evaluation.

Torchchat’s unique feature is its capacity to provide local inference on a variety of platforms, which are as follows.

Python: A web browser or a Python command-line interface (CLI) can be used to access Torchchat’s REST API. This API is a user-friendly choice for academics and developers because it makes it simple for users to interact with LLMs.

C++: Using PyTorch’s AOTInductor backend, Torchchat offers a desktop-friendly binary for users on desktop computers. This characteristic makes LLMs run efficiently on x86-based platforms, which makes high-performance desktop environments a good fit for them.

Mobile Devices: ExecuTorch is used by Torchchat to export a ‘.pte’ binary file for on-device inference in response to the increasing demand for AI on mobile platforms. This feature makes it possible to run robust LLMs on tablets and smartphones, creating new opportunities for mobile apps.

Any AI tool’s adoption depends heavily on its performance, and Torchchat has demonstrated great performance on many platforms. The PyTorch team has released comprehensive benchmarks that demonstrate the flexibility and effectiveness of Torchchat by running Llama 3 on several systems.

Using 64GB of RAM on an Apple MacBook Pro M1 Max, Llama 3 8B Instruct accomplishes the following.

Using Arm Compile, 5.84 tokens/sec in float16 mode and MPS Eager, 16.9 tokens/sec in int8 mode.Â

These findings show how well the library uses Apple hardware, enabling quick and effective inference even on laptops.

Even more impressive is the performance on the Linux x86 platform when paired with an Intel Xeon Platinum 8339HC CPU and an A100 GPU. Using CUDA Compile, 83.23 tokens/sec in bfloat16 mode and 135.16 tokens/sec in int4 mode were obtained. These numbers demonstrate Torchchat’s potential for high-performance computing environments, which makes it an effective tool for developers using desktop and server setups to work with LLMs.

Torchchat’s mobile performance is also amazing, with 4-bit GPTQ via ExecuTorch enabling over 8T/s on the Samsung Galaxy S23 and iPhone. With this feature, mobile devices can access the power of LLMs, enabling sophisticated AI applications to be used on the fly.

In conclusion, Torchchat offers a flexible and effective way to run potent AI models on a variety of devices, marking a substantial advancement in the field of local LLM inference. Through Torchchat, developers and researchers can more easily install and optimize LLMs locally, opening up new avenues for AI exploration ranging from desktop applications to mobile breakthroughs.

Check out the GitHub and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post Meet Torchchat: A Flexible Framework for Accelerating Llama 3, 3.1, and Other Large Language Models Across Laptop, Desktop, and Mobile appeared first on MarkTechPost.

Source: Read MoreÂ