Amazon Neptune Analytics is an analytics database engine for quickly analyzing large volumes of graph data to get insights and find trends from data stored in Amazon S3 buckets or a Neptune Database. Neptune Analytics uses built-in algorithms, vector search, and in-memory computing to run queries on data with tens of billions of relationships in seconds.

Neptune Analytics now supports smaller 32 and 64 m-NCU capacity graph sizes for Amazon Neptune Analytics, making it up to 75% cheaper to get started with graph analytics for use cases like targeted content recommendations, financial network analysis, supply chain risk analysis, software bill of materials vulnerability analysis, and more.

Computational and storage capacity for a given Neptune Analytics graph is determined by its memory-optimized Neptune Capacity Units (m-NCU) configuration. The m-NCU is the unit of measurement for resource capacity in Neptune Analytics, where 1 m-NCU is equivalent to 1 GB of memory and corresponding compute and networking capacity. Therefore, different capacity units can support different graph sizes and computational power.

Whether you’re experimenting with test datasets or running analytics on subgraphs from a larger graph, it’s common to get started with a smaller graph. In this post, we show how you can reduce your cost by up to 75% when getting started with graph analytics workloads using the new 32 and 64 m-NCU capacities for Neptune Analytics. Many commonly used sample datasets (such as air routes) can fit on 32 or 64 m-NCU, allowing you to work with the same data but at a lower cost. We also discuss how to monitor the graph size and resize m-NCUs without downtime.

Working with smaller capacity units

No matter the use case, you’re likely to work with small graphs. This includes situations such as:

Getting started with graph analytics. You’re likely to work with small-scale test datasets as you experiment with graphs. For example, learning how the graph algorithms work with test datasets such as air routes.

Designing a new graph data model. When designing a graph data model for a new use case, you typically use a small-scale representation of your data. This allows you to quickly test your queries over different iterations of the graph model, and makes it straightforward to validate the query results.

Testing batch loads. It’s advised to work with a small subset of your data first when using the batch load process, to validate that data is correctly formatted before doing a large ingestion.

Running analytics over subgraphs. In some situations, you may only want to run graph analytics over a subset of your graph data. For example, in a social network that has already been segmented into different communities using clustering algorithms. You may want to use centrality algorithms to find the most influential entities within a given community, instead of across the entire social network.

Working with small graph datasets is a common situation for many, which is why Neptune Analytics is providing support for smaller capacities to help you minimize costs during all stages of building your graph analytics application.

You can now launch Neptune Analytics graphs with 32 and 64 m-NCU capacity. Previously, the smallest capacity was 128 m-NCU. The new 32 and 64 m-NCU sizes cost a quarter of and a half of the previous smallest instance size, respectively. For comprehensive pricing by AWS Region, refer to Amazon Neptune Pricing.

Getting started

To get started, you can create a Neptune Analytics graph in one of two ways:

Create an empty graph – You can follow the instructions in Creating a new Neptune Analytics graph using the AWS Management Console to create a graph with the desired capacity. This is a good option if you intend to work with multiple different test datasets, are experimenting with graph queries, or want to make sure you stick to an explicit cost budget.

Create a graph using an import task – The CreateGraphUsingImportTask (CGIT) API lets you create a Neptune Analytics graph directly from bulk load files in Amazon Simple Storage Service (Amazon S3), a Neptune Database cluster, or a Neptune Database cluster snapshot. The default capacity for CGIT is currently 128 m-NCU, so if you want to use a different capacity value, it must be explicitly specified through the minProvisionedMemory or maxProvisionedMemory For more details on this configuration, refer to Use CreateGraphUsingImportTask API to import from Amazon S3.

When creating the graph, make sure that you have at least one method of connectivity established (public and/or private). For ease of experimentation, you can configure your Neptune Analytics graph to be publicly accessible. This setting enables a connection to the graph to be made from any source without any additional networking configuration. Private graph endpoints enforce private connectivity to the graph only. These endpoints are only accessible within the VPC of which they are deployed into, unless you have other networking configuration to allow cross-VPC access such as VPC peering, AWS Transit Gateway, etc. Regardless of which method you use, requests are always secured with IAM Database Authentication.

You can connect to your Neptune Analytics graph through HTTPS requests or the AWS SDK. To make exploration straightforward, we recommend deploying a Neptune notebook. If you already have a Neptune notebook deployed, simply set %graph_notebook_host to the endpoint of your new graph like so:

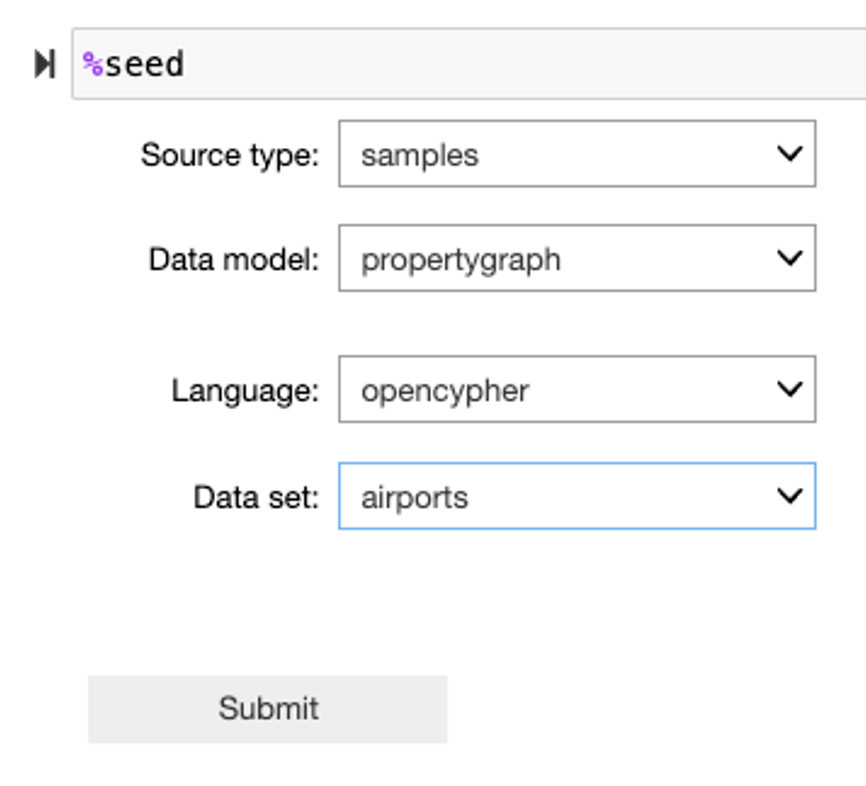

To add a sample dataset, use the %seed command and select a dataset of interest.

Customers are responsible for the costs of AWS services. For pricing information of the AWS services, see the public pricing for Neptune Analytics, Amazon SageMaker Notebook Instances (if using Neptune notebooks), and AWS PrivateLink Interface Endpoints (if using private graph endpoints). Delete the deployed resource(s) once you are finished to stop any charges.

Resizing existing graphs

As you add or remove data from your graph, you may find the need to subsequently increase or decrease the m-NCU capacity of the graph to remain right-sized and thus cost optimized. Monitor the storage usage of the graph through the Amazon CloudWatch metric GraphStorageUsagePercent. When determining a new capacity size to use, you can treat this metric as linear.

For example, the following screenshot shows the GraphStorageUsagePercent of a 64 m-NCU graph. The value of this metric is 14%.

Since our graph data only uses a small portion of the overall storage, we can downsize to save costs. The following screenshot depicts the GraphStorageUsagePercent after downsizing from 64 m-NCUs to 32 m-NCUs.

Because the m-NCU sizing is linear, the same graph data that consumed 14% of storage in 64 m-NCUs correspondingly consumes 28% of the storage in 32 m-NCUs.

Changing mNCUs is seamless and will not result in any read or write downtime.

To initiate a resize of the graph, use the UpdateGraph API, setting the provisionedMemory parameter to the new desired value of m-NCU.

Once the UpdateGraph command has been issued, you can continue to read and write to the graph even as the change is being processed. Once the change has been processed, you will see the new value reflected in the AWS console and through the ListGraphs API. Since the change happens on the underlying host, the same endpoints are used and therefore no changes to the client are required.

If the current storage footprint of your graph won’t fit in a smaller graph, then the scale-down fails with the following error:

An error occurred (ValidationException) when calling the UpdateGraph operation (reached max retries: 0): Graph [g-XXXXXXXXXX] cannot be scaled down to [32] m-NCUs due to storage memory constraints

Conclusion

In this post, we showed how you can minimize your cost during development for graph analytics workloads by using the new, smaller capacity units (32 and 64 m-NCU) for Neptune Analytics. To get started, simply create a new graph and specify the capacity units. We also discussed how to monitor your graph’s storage footprint with the GraphStorageUsagePercent CloudWatch metric, and how to resize m-NCUs through the UpdateGraph API as your graph size fluctuates. Changing the capacity units to resize the graph is a seamless operation, without read or write downtime.

Get started with Neptune Analytics today by launching a graph and deploying a Neptune notebook. Sample queries and sample data can be found within the notebook or the Graph Notebook GitHub repo.

About the authors

Melissa Kwok is a Senior Neptune Specialist Solutions Architect at AWS, where she helps customers of all sizes and verticals build cloud solutions according to best practices. When she’s not at her desk you can find her in the kitchen experimenting with new recipes or reading a cookbook.

Utkarsh Raj is a Senior Software Engineer with the Amazon Neptune team. He has worked on different storage and platform aspects of Neptune Database and Neptune Analytics and likes working on problems related to storage engine and distributed systems.

Source: Read More