The number of academic papers released daily is increasing, making it difficult for researchers to track all the latest innovations. Automating the data extraction process, especially from tables and figures, can allow researchers to focus on data analysis and interpretation rather than manual data extraction. With quicker access to relevant data, researchers can accelerate the pace of their work and contribute to advancements in their fields.

Traditionally, researchers extract information from tables and figures manually, which is time-consuming and prone to human error. Some general object detection models, such as YOLO and Faster R-CNN, have been adapted for this task, but they may need to be more specialized to understand academic paper layouts. Document layout analysis models focus on the overall structure of documents but might need more precision for accurately locating tables and figures.Â

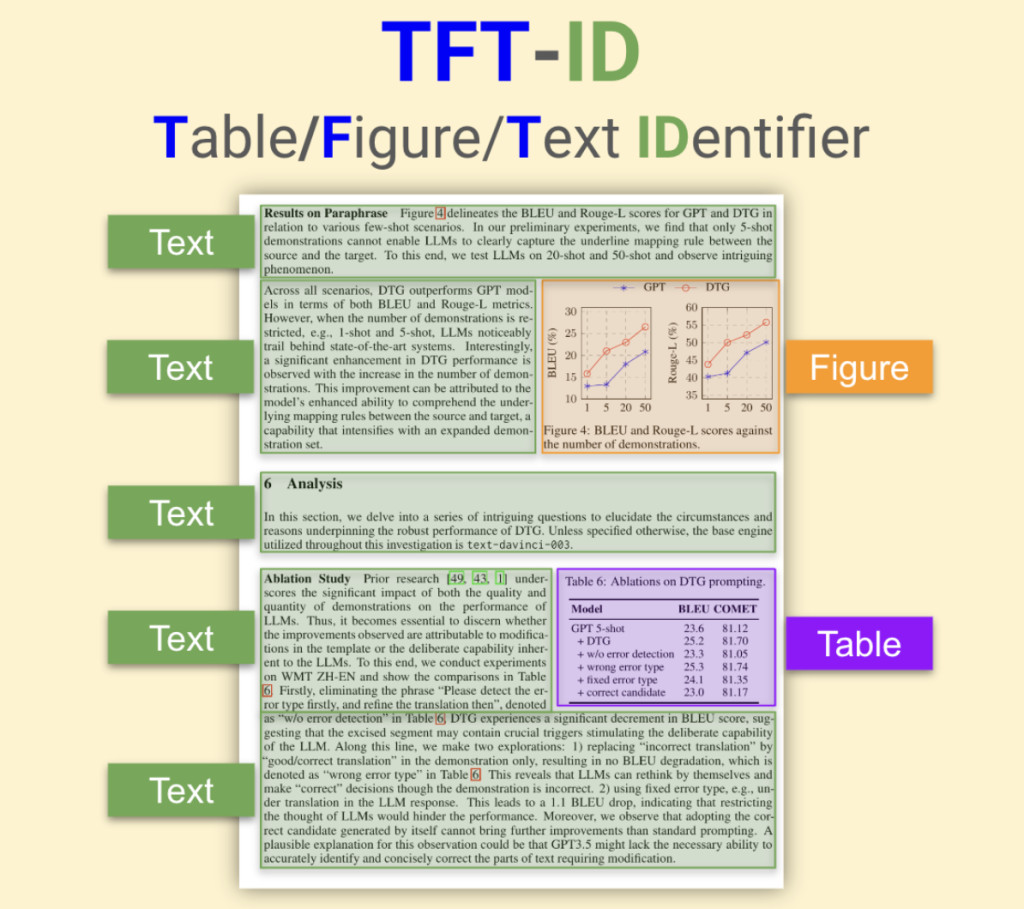

Researchers propose a family of object detection models, TF-ID (Table/Figure Identifier), to address the challenge of automatically locating and extracting tables and figures from academic papers. These models leverage object detection techniques to identify and locate tables and figures within academic papers. The model is trained on a large dataset of academic papers with manually annotated table and figure regions, allowing it to recognize visual patterns associated with these elements.

The TF-ID model uses object detection techniques to identify and locate specific objects, such as tables and figures, within images of academic papers. During training, the model learns to recognize visual patterns like grid structures, captions, and image formats. Once trained, the model processes new academic papers and outputs bounding boxes that indicate the locations of detected tables and figures. These bounding boxes can then be used for further processing, such as image cropping, optical character recognition (OCR), or data extraction. Additionally, TF-ID unlocks valuable information often hidden within visual elements, enabling deeper insights and knowledge discovery. This automation enhances data accuracy compared to manual methods, leading to more reliable research findings.

The performance of TF-ID models can vary based on factors like the size and quality of the training dataset, the complexity of the academic paper layouts, and the specific object detection architecture used. Although the performance of TF-ID is not quantified, its features suggest that the models generally outperform manual methods in terms of speed and accuracy. However, complex layouts with overlapping figures or tables still pose challenges.

In conclusion, using object detection techniques, the TF-ID model effectively addresses the problem of manually extracting tables and figures from academic papers. The proposed method leverages a large dataset and sophisticated training to locate tables and figures accurately, significantly outperforming manual methods in speed and accuracy. While there are still challenges in handling complex layouts and recognizing table structures, TF-ID represents a significant advancement in automating data extraction from academic literature.Â

Check out the Model and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post TFT-ID (Table/Figure/Text IDentifier): An Object Detection AI Model Finetuned to Extract Tables, Figures, and Text Sections in Academic Papers appeared first on MarkTechPost.

Source: Read MoreÂ