Deep learning has demonstrated remarkable success across various scientific fields, showing its potential in numerous applications. These models often come with many parameters requiring extensive computational power for training and testing. Researchers have been exploring various methods to optimize these models, aiming to reduce their size without compromising performance. Sparsity in neural networks is one of the critical areas being investigated, as it offers a way to enhance the efficiency and manageability of these models. By focusing on sparsity, researchers aim to create neural networks that are both powerful and resource-efficient.

One of the main challenges with neural networks is the extensive computational power and memory usage required due to the large number of parameters. Traditional compression techniques, such as pruning, help reduce the model size by removing a portion of the weights based on predetermined criteria. However, these methods often fail to achieve optimal efficiency because they retain zeroed weights in memory, which limits the potential benefits of sparsity. This inefficiency highlights the need for genuinely sparse implementations that can fully optimize memory and computational resources, thus addressing the limitations of traditional compression techniques.

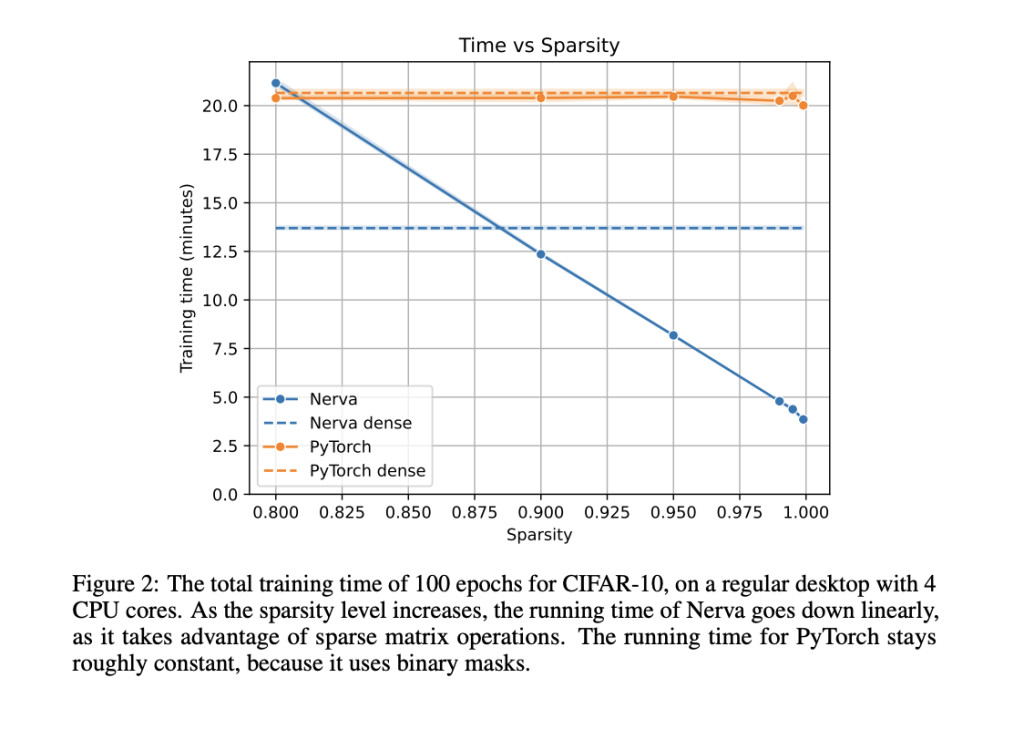

Methods for implementing sparse neural networks rely on binary masks to enforce sparsity. These masks only partially exploit the advantages of sparse computations, as the zeroed weights are still saved in memory and passed through computations. Techniques like Dynamic Sparse Training, which adjusts network topology during training, still depend on dense matrix operations. Libraries such as PyTorch and Keras support sparse models to some extent. Still, their implementations fail to achieve genuine reductions in memory and computation time due to the reliance on binary masks. As a result, the full potential of sparse neural networks still needs to be explored.

Eindhoven University of Technology researchers have introduced Nerva, a novel neural network library in C++ designed to provide a truly sparse implementation. Nerva utilizes Intel’s Math Kernel Library (MKL) for sparse matrix operations, eliminating the need for binary masks and optimizing training time and memory usage. This library supports a Python interface, making it accessible to researchers familiar with popular frameworks like PyTorch and Keras. Nerva’s design focuses on runtime efficiency, memory efficiency, energy efficiency, and accessibility, ensuring it can effectively meet the research community’s needs.

Nerva leverages sparse matrix operations to reduce the computational burden associated with neural networks significantly. Unlike traditional methods that save zeroed weights, Nerva stores only the non-zero entries, leading to substantial memory savings. The library is optimized for CPU performance, with plans to support GPU operations in the future. Essential operations on sparse matrices are implemented efficiently, ensuring Nerva can handle large-scale models while maintaining high performance. For example, in sparse matrix multiplications, only the values for the non-zero entries are computed, which avoids storing entire dense products in memory.

The performance of Nerva was evaluated against PyTorch using the CIFAR-10 dataset. Nerva demonstrated a linear decrease in runtime with increasing sparsity levels, outperforming PyTorch in high sparsity regimes. For instance, at a sparsity level of 99%, Nerva reduced runtime by a factor of four compared to a PyTorch model using masks. Nerva achieved accuracy comparable to PyTorch while significantly reducing training and inference times. The memory usage was also optimized, with a 49-fold reduction observed for models with 99% sparsity compared to fully dense models. These results highlight Nerva’s ability to provide efficient sparse neural network training without sacrificing performance.

In conclusion, the introduction of Nerva provides a truly sparse implementation, addresses the inefficiencies of traditional methods, and offers substantial improvements in runtime and memory usage. The research demonstrated that Nerva can achieve accuracy comparable to frameworks like PyTorch while operating more efficiently, particularly in high-sparsity scenarios. With ongoing development and plans to support dynamic sparse training and GPU operations, Nerva is poised to become a valuable tool for researchers seeking to optimize neural network models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post This Deep Learning Paper from Eindhoven University of Technology Releases Nerva: A Groundbreaking Sparse Neural Network Library Enhancing Efficiency and Performance appeared first on MarkTechPost.

Source: Read MoreÂ