Large Language Models (LLMs) can improve their final answers by dedicating additional computer power to intermediate thought generation during inference. System 2 strategies are used in this procedure to mimic intentional and conscious reasoning. Many more System 2 strategies, such as Rephrase and Respond, System 2 Attention, and Branch-Solve-Merge, have been proposed since the introduction of the Chain-of-Thought method. These methods make use of intermediary reasoning stages to enhance the final responses produced by LLMs in terms of both quality and accuracy.

System 1 can be understood as the simple implementation of the Transformer model for LLMs in order to generate replies straight from the input without creating intermediate processes. System 2 systems, on the other hand, generate intermediate tokens or stages and use advanced strategies like searching and repeatedly prodding before arriving at a final response.

Because System 2 procedures include explicit reasoning, they frequently produce more accurate outcomes. However, as production systems mostly use the quicker System 1 generation, they are less appropriate due to their greater computing costs and increased latency.

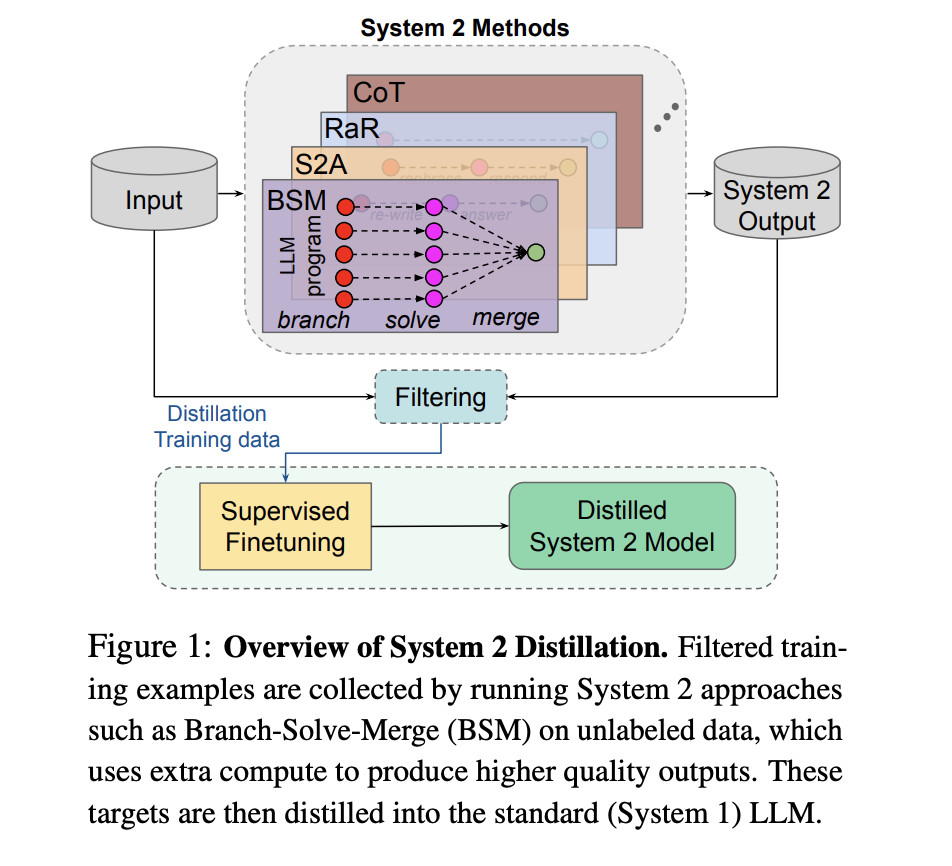

In this study, a team of researchers from Meta FAIR has studied self-supervised ways to compile or distill these high-quality System 2 outputs back into generations of LLMs. By eliminating the requirement to create intermediate reasoning token sequences during inference, this procedure seeks to incorporate reasoning straight into the model’s more instinctive System 1 replies. This avoids the greater computing costs associated with System 2 methodologies while still achieving increased performance over the initial System 1 outputs.

The team has shared that the results suggested that a number of System 2 methods can be efficiently reduced to System 1. This distillation procedure is more efficient since it lowers the inference cost while maintaining the quality improvements provided by System 2 reasoning. Methods such as Rephrase and Respond, System 2 Attention, and Branch-Solve-Merge, for instance, can be reduced to System 1 and produce better results at a lower computational cost than if System 2 approaches were used directly.

The team has shared that System 2 distillation will be essential to the creation of AI systems that will always be learning in the future. These systems will be able to focus their System 2 resources on reasoning tasks that they find difficult and use condensed System 1 replies for tasks that they can complete quickly. AI systems are able to maximize their processing capacity and sustain excellent performance on a variety of tasks with the help of this technique.

In conclusion, incorporating System 2 reasoning methods into LLM inference procedures signifies a great progression in AI capabilities. Better performance can be obtained without having to pay the significant computational costs associated with System 2 approaches by condensing these intentional, higher-quality reasoning procedures into more effective System 1 processes. This distillation is a workable option for real-world applications since it improves the model’s output quality and accuracy while also making optimal use of available resources.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post Optimizing Artificial Intelligence Performance by Distilling System 2 Reasoning into Efficient System 1 Responses appeared first on MarkTechPost.

Source: Read MoreÂ