In September 2022, Ethereum transitioned to a Proof of Stake (PoS) consensus model. This change allows anyone with a minimum of 32 ether to stake their holdings and operate a validator node, thereby participating in network validation and earning staking rewards. The PoS system is engineered to enable individuals and companies with standard hardware to run a validator node. Although validator node downtime results in penalties, these are relatively minor penalties, and downtime can be avoided with a well-designed system. However, institutional stakers, who typically operate multiple validators, face more demanding technical and uptime requirements due to the larger scale of their operations.

In this post, we explore the technical challenges and requirements of operating an institutional-grade Ethereum staking service. Additionally, we outline a solution for deploying an Ethereum staking service on AWS. For more comprehensive information on installation and deployment within your AWS account, please visit the accompanying GitHub repository.

How staking software works

The Proof of Stake network, also known as the Beacon Chain, comprises thousands of individually operated nodes globally. A beacon node, also referred to as a consensus client, communicates with other beacon nodes via peer-to-peer network protocols. These nodes primarily exchange information about new transactions being submitted to the network and new blocks being proposed and created. There are several consensus clients to select from, and they are written in various popular programming languages, the most popular being Rust (Lighthouse), TypeScript (Lodestar), Go (Prysm), and Java (Teku). A comprehensive list of these clients is available on ethereum.org.

In parallel with a consensus client, an execution client operates. The primary responsibilities of an execution client include accepting and broadcasting new transactions submitted to it and processing new blocks by parsing the transactions and data within the block into its local data store. The most used execution clients are developed in languages such as Go (Geth, Erigon), .NET (Nethermind), and Java (Besu), with the full list and distribution of execution clients across the network available in ethernodes.org.

By running both a consensus client and an execution client, you maintain a real-time copy of the blockchain state. This capability is invaluable for indexing blockchain data and receiving notifications about specific events, such as the execution of a particular smart contract or activity in a designated wallet. However, operating these two clients alone is insufficient for network validation, which is necessary to earn rewards.

A third type of software, known as a validator client, is required for network validation. A validator client has two primary responsibilities: 1) monitoring new blocks as they are created and validating the data within them, and 2) proposing new blocks. To promote honest behavior, validators must first stake 32 ether into the beacon chain. This stake is at risk of being slashed if the validator fails to validate the network due to downtime – with a minor penalty – or if the validator acts maliciously, such as by approving a block found to contain malicious transactions -incurring a significant penalty. Therefore, securing validator private keys is crucial to prevent compromises by malicious actors.

Many consensus clients encompass a validator client, as it is typically a relatively lightweight component.

Challenges and requirements of running a staking service

Operating a staking service involves navigating various risks and challenges. One primary risk is the potential for slashing penalties due to downtime or if a bug in a consensus client leads to incorrect behavior. For instance, if a bug causes a client to miss validations, the staking service must promptly switch to an alternative client until the issue is resolved in the original one. A notable occurrence of this was in May 2023 when a bug in the Prysm client temporarily hindered its block validation capabilities (see Post-Mortem Report). To mitigate the risk of slashing due to client downtime, it is crucial to ensure that the clients are highly available and capable of rapid recovery in the event of a failure. This is particularly challenging with execution clients, as a fresh synchronization can take upwards of 24 hours. Therefore, strategically architecting the storage to facilitate reuse across client restarts is vital.

Another critical aspect is the security and access control of validator keys, as these keys provide access to potentially significant financial assets. Implementing stringent access control policies, while maintaining the resilience and availability of the keys, is essential for operational excellence.

Finally, a streamlined method for monitoring the service and receiving alerts about significant operational and security events is indispensable.

Solution overview

For those interested in a deeper dive into the solution or looking to deploy it in their own AWS account, refer to the associated GitHub repository.

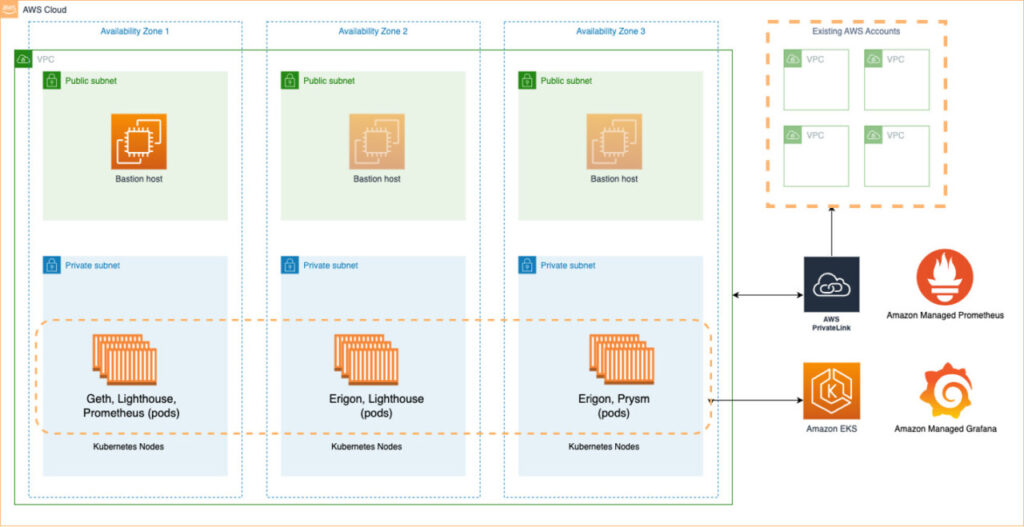

The diagram below shows the primary components involved in the solution.

This solution utilizes Kubernetes on Amazon Elastic Kubernetes Service (EKS). EKS is pivotal in managing the availability and scalability of Kubernetes control plane nodes. These nodes are crucial for scheduling containers, ensuring application availability, managing cluster data storage, and performing other essential functions. To bolster service reliability, the EKS cluster operates across multiple Availability Zones (AZs) within a single region, safeguarding against service disruptions in the unlikely event of an AZ outage.

In this deployment, we utilize two types of clients on the EKS cluster: execution clients (Geth and Erigon) and consensus clients (Lighthouse and Prysm). These are deployed using Helm charts provided by Stakewise. We selected these particular clients for their popularity and illustrative value, although this solution is easily adaptable to other clients.

To monitor the deployment effectively, we employ Prometheus Operator with kube-prometheus-stack. This setup runs a Prometheus instance within the cluster that collects metrics from both the execution and consensus clients. The Prometheus server is configured to transmit its data to Amazon Managed Service for Prometheus (AMP), set as the remote_write target. The gathered data is then visualized using a Grafana dashboard hosted on Amazon Managed Service for Grafana. Below, we provide examples of these dashboards.

The remainder of this blog post will delve into the three primary facets of the solution:

Ensuring high availability of clients in case of failure.

Securing the network perimeters of the EKS cluster.

Comprehensive monitoring of both the cluster and the clients.

Additionally, we will briefly discuss the management of validator keys and validator clients.

Running highly available clients

We use Amazon Elastic Block Store (Amazon EBS) gp3 storage and create a Kubernetes storage class. Notably, the storage class YAML contains the following:

The reclaimPolicy of Retain ensures that the EBS volume persists beyond the lifecycle of the pod it is attached to. This enables us to quickly launch a new execution client pod after one has been stopped by attaching the fully synchronized EBS volume of the stopped pod to the new pod. Without this reclaimPolicy, the new pod would require at least 1 day (Geth) or 1 week (Erigon) to fully synchronize.

For optimal price to performance of the cluster nodes, they run on r7g instances, powered by the latest generation AWS Graviton3 processors, which are ideal for memory-intensive workloads.

Securing the network boundaries of the EKS cluster

In light of the significant financial risks associated with security breaches, ensuring the solution is not accessible via the public internet is critical. To this end, the EKS cluster is set up within a newly created Virtual Private Cloud (VPC), although it can also be integrated into an existing VPC. The nodes within the cluster are situated in private subnets, making them inaccessible from the public internet.

Access to the EKS cluster is strictly limited to a Bastion host for administrative tasks. This host is strategically located within a public subnet of the VPC, facilitating a secure entry point for operational tasks. These tasks include deploying Helm charts and configuring the execution and consensus clients. To enhance connectivity and security between the EKS VPC and any existing platform infrastructure, you should utilize AWS PrivateLink. That is outside the scope of this blog post.

Monitoring the EKS cluster and the clients

The solution involves the collection of Prometheus metrics from both the EKS cluster and the execution and consensus clients. These metrics are scraped by the Prometheus instance operating within the cluster and are subsequently stored in Amazon Managed Service for Prometheus for persistence. We have created multiple dashboards within Amazon Managed Grafana to facilitate effective visual monitoring. These dashboards allow for tracking the status of the cluster and clients comprehensively. The setup includes two dashboards specifically for the Geth execution client, one for the Lighthouse consensus client, and several others dedicated to the EKS cluster.

Client Dashboards

The client dashboards included in the GitHub repository provide extensive insights. Here are some examples of the data that can be gleaned from these dashboards:

Execution Client Dashboard: The ‘Chain head’ feature on this dashboard indicates the latest block that has been processed by the client.

Lighthouse Consensus Client Dashboard: This dashboard offers a quick visual representation of the current number of validators active on the network.

Geth Dashboard: This dashboard provides an at-a-glance view of various metrics related to the client, offering a comprehensive snapshot.

EKS Node Dashboard: This dashboard displays all the pods running on a node, including detailed information on the CPU and memory usage of each pod, both individually and in aggregate.

EKS Cluster Dashboard: This dashboard focuses on the system resource consumption across the nodes of the EKS cluster, providing a holistic view of the cluster’s performance.

A Note on Validator Client Signing Keys

When a validator proposes a new block or attests to a proposed block, they are required to sign specific data values to signify this action. By default, validator clients use a locally available private key to sign transactions. This process can be extended to facilitate remote signing through Web3Signer’s HTTPS interface. For instance, the blog post AWS Nitro Enclaves for running Ethereum validators demonstrates how to implement this using AWS Nitro Enclaves. There are also third-party products designed to seamlessly switch to alternative consensus clients if some are temporarily unavailable or become unusable due to software bugs.

Conclusion

In this post we covered how Ethereum’s shift from Proof of Work to Proof of Stake represented a significant change, moving from the need to maintain just a single, all-encompassing client like Geth or Erigon, to managing three distinct clients. This transition naturally increases the potential for component failures or unexpected operational issues. However, with properly configured alerting and system setup, the impact of such issues can be significantly mitigated.

For those interested in operating an Ethereum staking client, beginning with deploying the GitHub repository is a great place to start. If you are seeking more in-depth understanding about running a validator client, the Ethereum.org FAQs offer valuable insights and information.

About the authors

Emile Baizel is a Senior Blockchain Architect at AWS. He has been working with blockchain technology since 2018 when he participated in his first Ethereum hackathon. He didn’t win but he got hooked. He specializes in blockchain node infrastructure, digital custody and wallets, and smart contract security.

Karan Thanvi is a Startup Solutions Architect working with Fintech Startups helping them design and run their workloads on AWS. He specializes in container-based solutions, databases and machine learning. He is passionate about developing solutions and playing chess!

Source: Read More