This post is co-authored with Ashesh Nishant, VP Technology at Ola, Saeed Meethal, principal database engineer at Ola, and Karan Tripathi, principal engineer at Ola.

Ola Money is a financial service provided by Ola Financial Services (OFS), which is part of the Ola group of companies. Its products include Ola Money Credit Card, Ola Money Wallet, and Ola Money Postpaid, the world’s first digital credit payment from a ride-hailing platform. It is integrated with more than 300 growing partners throughout India, including Ola Cabs, Ola Electric, and others.

Ola Money workloads that had previously been hosted on another cloud service provider were migrated to AWS using a lift-and-shift methodology, and AWS has been a partner in helping the company achieve its business objectives and expansion goals by providing a reliable and scalable infrastructure.

In this post, we share the modernization journey of Ola Money’s MySQL workloads using Amazon Aurora, a relational database management system built for the cloud with MySQL and PostgreSQL compatibility that gives the performance and availability of commercial-grade databases at one-tenth the cost. We cover the architectural and operational challenges that we overcame by migrating to Amazon Aurora MySQL-Compatible Edition, how we migrated the data to support our availability and scalability requirements, and the outcomes of the migration.

Previous architecture at Ola Money

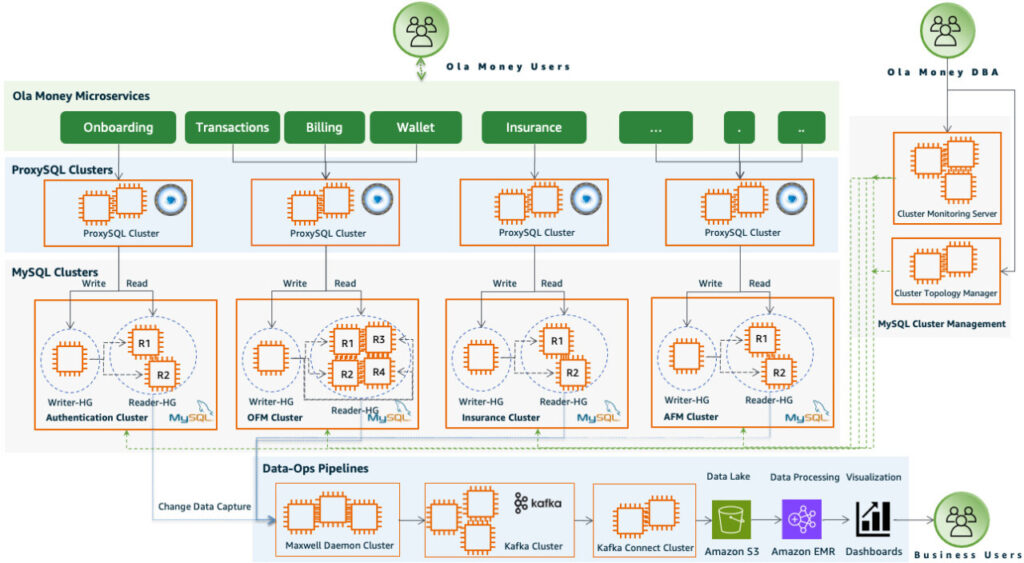

In this section, we look at the architectural patterns previously adopted at Ola Money to support our business requirements. Our database administrators (DBAs) were responsible for managing four self-managed MySQL clusters on Amazon Elastic Compute Cloud (Amazon EC2) instances and Amazon Elastic Block Store (Amazon EBS) volumes. We used licensed third-party cluster management and monitoring tools for managing the clusters’ topology and scaling database reader instances.

Ola Money had a stringent requirement for subsecond-latency write-heavy requests from the microservices as end-users initiate transactions, and read-heavy requests from business intelligence (BI) dashboards. To address this, we adopted a Command and Query Responsibility Segregation (CQRS) architectural pattern, which focuses on the clear separation of write and read operations with the help of ProxySQL, a layer 7 open source database proxy.

Ola data platform engineers are responsible for managing the data engineering platform components, including the data pipelines that use transaction log-based change data capture (CDC) to record changes made to data in MySQL database tables to power business intelligence dashboards that assist Ola business stakeholders in receiving updates in near real time. The key components of the data pipelines are as follows:

Maxwell, an open source CDC tool, is used to replicate data from MySQL using transaction logs for all tables, and data is streamed to self-managed Apache Kafka clusters

Kafka Connect plugins are used to deliver the data to the central data lake on Amazon Simple Storage Service (Amazon S3), an object storage service built to store and retrieve data from anywhere

The data gets further processed by hundreds of jobs deployed in Amazon EMR, a petabyte-scale platform for data processing, interactive analytics, and machine learning (ML) using open source frameworks, to update the data stores that power Ola business intelligence dashboards

The following diagram is the architectural representation of various components of the legacy solution.

Key challenges

Our DBA team faced multiple challenges in order to support our business and compliance requirements. In this section, we share the most critical requirements in detail.

Operational overhead

With the self-managed MySQL database architecture, over 40% of our DBA team’s productive time was regularly being consumed by undifferentiated heavy lifting tasks.

Firstly, we were in charge of monotonous activities, such as performing administrative tasks like patching and updating MySQL database libraries and plugins, taking regular backups, managing cluster topology and replication, setting up high availability, and more.

Secondly, we were responsible for security and compliance-focused activities:

We implemented additional security measures along with a table-level encryption technique and managed the encryption keys to adhere to compliance requirements. As a result, we had to recreate the complete database using the new keys for every node in every cluster whenever encryption had to be activated or key rotation was necessary every 6 months.

We used HashiCorp Vault, an open source software for secrets management, for creating, distributing, and managing the encryption keys, and self-managed EC2 instances.

Finally, we had to regularly optimize the MySQL clusters, which included releasing space from the EBS volumes connected to the cluster instances. In order to achieve the cost benefits resulting from this optimization effort, a multi-step manual procedure of shrinking the underlying EBS volumes had to be performed for each of the four clusters and all of the reader nodes within each cluster.

Increased cost

We also had additional charges on top of the infrastructure charges of the self-managed MySQL clusters:

To manage the peak traffic and mitigate potential performance problems, we overprovisioned compute and storage resources. For example, maintaining separate reader instances 24×7 for performing backup jobs incurred significant additional charges.

Infrastructure for cluster management utilities, vaults, and more, incurred additional costs.

MySQL clusters were set up across multiple AWS Availability Zones and AWS Regions which incurred charges for both inter-zone and inter-Region data transfer.

The use of third-party cluster management software cost us thousands of dollars a year for support and licensing.

Migration to Aurora MySQL

In this section, we discuss the migration journey of our self-managed Ola Money MySQL clusters to Aurora MySQL.

The AWS team recommended that we evaluate Aurora MySQL for our self-managed MySQL workloads; to assist us in making an informed decision, they calculated the total cost of ownership (TCO) for Aurora MySQL based on the configuration and utilization metrics of the self-managed MySQL clusters. The results showed that the TCO was greater than 50% cost savings in comparison to what Ola Money used to pay for self-managed MySQL clusters on Amazon EC2.

We got immediate approval to get started with a proof of concept on Aurora MySQL. We selected a self-managed MySQL cluster for the proof of concept, used the mysqldump utility to create a database dump file, bootstrapped an Aurora MySQL instance in the Asia Pacific (Mumbai) Region, and imported the data from the dump file; see Logical migration from MySQL to Amazon Aurora MySQL by using mysqldump for more details. As we gained more confidence on the service, we moved forward with the proof of concept.

Disaster recovery and architectural considerations

We chose pilot light disaster recovery (DR) strategy for Ola Money workloads. In this section, we share the architectural options we explored while migrating to Aurora MySQL and the current architecture with Aurora MySQL, including DR site setup.

We evaluated the following DR strategies:

Amazon Aurora Global Database – Aurora global databases are designed for globally distributed applications, allowing a single Aurora database to span multiple AWS Regions. This mechanism uses physical replication of data to secondary clusters using an AWS dedicated infrastructure that leaves your databases entirely available to serve your application.

Aurora cross-Region replication – This option also improves DR capabilities and helps you scale read operations in a Region. However, this mechanism uses logical replication using MySQL binary log replication, so the replication lag can be influenced by the change/apply rate and replicating to multiple destinations can increase load on the source database cluster.

Although there are many advantages with Aurora global databases, including lower Recovery Time Objective (RTO) and Recovery Point Objective (RPO) during recovery from Region-wide outages, we chose cross-Region replication for disaster recovery primarily due to our data pipeline requirements that are based on MySQL binary logs.

With the AWS team’s guidance, we architected the applications targeted for the proof of concept with an Aurora MySQL cluster, implementing high availability using reader replicas (Reader#1 and Reader#2) spanning across Availability Zones in the Asia Pacific (Mumbai) Region, and a DR site with a read replica (Reader#3) in the Asia Pacific (Hyderabad) Region using cross-Region replication. The following diagram illustrates this architecture.

This architecture consists of the following key services:

Amazon Route 53 is a highly available and scalable Domain Name Service (DNS) that routes application traffic to the associated resources using an active-passive failover configuration. Therefore, the requests are primarily routed to the resources in the Asia Pacific (Mumbai) Region and the resources in the Asia Pacific (Hyderabad) Region are on standby.

The first line of defense is provided by AWS WAF, which guards the web applications against common threats, and AWS Shield, a managed DDoS protection solution that keeps apps secure.

Elastic Load Balancing automatically distributes incoming traffic across EC2 instances, containers, and IP addresses, in one or more Availability Zones. It is configured in both Regions and associated with Route 53 records.

The applications are deployed in both Regions on EC2 instances with Auto Scaling groups configured to serve the application traffic requests in the Asia Pacific (Mumbai) Region, and configured with value 0 in the Asia Pacific (Hyderabad) Region to adhere with the pilot light strategy. We use the same configuration for the ProxySQL instances.

The always on critical database layer includes an Aurora MySQL writer instance with two read replicas (Reader#1 and Reader#2) in the Asia Pacific (Mumbai) Region and a single read replica (Reader#3) in the Asia Pacific (Hyderabad) Region. Reader#3 is almost up to date, with its primary Aurora MySQL writer instance using asynchronous cross-Region replication.

Aurora uses endpoint mechanism to seamlessly connecting to these database instances; see Amazon Aurora connection management for details. And these reader and writer endpoints are configured in the ProxySQL.

Experiments on OFS Aurora deployment

We conducted performance testing on the Aurora MySQL database, increasing the load with five times production load with various payload sizes for insert, delete, and update operations, and validated throughput and response time. The results of performance testing on Aurora MySQL showed the response time was well within OLA’s SLA requirements.

We conducted chaos experiments using AWS Fault Injection Simulator (FIS), a fully managed service for running fault injection experiments on AWS to simulate failures and improve application performance, observability, and resiliency for stress testing the Aurora MySQL deployment. We ran the aws:rds:failover-db-cluster action on the Aurora MySQL cluster and observed that Aurora MySQL automatically failed over to the new primary instance within 30 seconds. We also conducted DR drills and observed minimal data loss during the failover due to the asynchronous nature of data replication, but that was within our RPO SLA.

The aforementioned experiments assisted us in validating the proposed architecture using Aurora MySQL, and we started devising the data migration strategy.

Data migration and cutover to Aurora

Database migration is a time-consuming and critical task that requires precise planning and implementation—for Ola Money, we had to migrate around 10 TB of database storage. In this section, we discuss our data migration plan and how we achieved a near-zero downtime switchover from self-managed MySQL database on Amazon EC2 to Aurora MySQL.

For data migration from a self-managed MySQL cluster, we performed the following tasks sequentially:

Use Percona XtraBackup, an open source hot backup utility for MySQL servers that doesn’t lock the database during the backup, to create a full backup of databases of a self-managed MySQL cluster in .xbstream format, which supports simultaneous compression and streaming.

Upload the full backup files to an S3 bucket.

Restore to an Aurora MySQL database cluster from Percona Xtrabackup backup files stored in Amazon S3. The cluster was configured to work with AWS Identity and Access Management (IAM), which helps administrators securely control access to AWS resources for database authentication.

Recreate the database users and configure permissions in an IAM identity policy; see Identity-based policy examples for Amazon Aurora for policy examples.

Set up binary log-based replication from the self-managed MySQL instance to the Aurora MySQL database instance to enable the Aurora MySQL instance to catch up with the transactions that occurred on the self-managed MySQL instance after the backup was taken.

Add Aurora MySQL readers across Availability Zones and Regions, based on the cluster requirement.

For cutover to Aurora MySQL with near-zero downtime, we performed the following tasks:

Drain the connections from the self-managed MySQL writer instance to allow in-flight requests to complete gracefully.

Make sure all the writes are replicated with zero replication lag between the self-managed MySQL and Aurora MySQL database.

Stop the self-managed MySQL writer instance and promote the Aurora MySQL instance as the writer.

Update the host group configurations in the ProxySQL setup toAurora MySQL writer and reader endpoints to switch application traffic and data pipeline requests to the Aurora MySQL database cluster setup.

We closely monitored the production traffic and Aurora MySQL cluster’s performance insights for a couple of weeks before deleting the self-managed MySQL clusters.

Outcomes

We discuss the key advantages experienced from this modernization endeavor in this section.

Operational excellence

We are able to focus and contribute more towards our products’ innovative features than spending time and effort on undifferentiated heavy lifting infrastructure and database administration operations.

For example, we no longer need to upgrade software libraries and perform manual patching because Aurora provides automated patching for version upgrades with the latest patches. Additionally, we no longer need to manage the EBS volumes and storage-reclaiming operations because Aurora MySQL cluster volumes automatically grow and shrink with a high-performant and highly distributed storage subsystem as the data grows. When Aurora MySQL data is removed while optimizing table indexes or dropping or truncating a table, the space allocated for that data is freed. This not only frees us from mundane storage management activities, but also minimizes the charges due to the automatic reduction in storage usage.

Aurora helps us encrypt databases, automated backups, snapshots, and readers using the keys we create and control through AWS Key Management Service (AWS KMS). This way, we no longer need to manage encryption keys and the vault infrastructure separately. With Aurora’s disk-level encryption capability, we avoid the time-consuming process of table-level encryption. This not only makes encryption in the primary Region simple, but also makes the encryption with cross-Region replication simpler; see Multi-Region keys in AWS KMS for more details.

Cost optimization

We realized cost optimizations in various ways, as described in this section.

Firstly, we saved on compute. The compute instances that were used in the self-managed MySQL setup had to be overprovisioned to support peak scaling requirements. With the current architecture on Aurora MySQL, there is no need to overprovision because it only takes a few clicks to provision a read replica to support our scaling requirements.

Secondly, we saved on storage. With the self-managed MySQL setup, we used to overprovision the EBS volumes for future data growth and as a safety mechanism to support the additional processes like always-on backup jobs. With the current architecture on Aurora MySQL, we don’t need to provision excess storage to handle future growth because the storage volume expands in increments of 10 GB up to a maximum of 128 TiB, and we only pay for the storage we consume

With Aurora, we aren’t charged for the backup storage of up to 100% of the size of database cluster. Moreover, Aurora automatically makes data durable across three Availability Zones in a Region, but we pay only for one copy of the data, so it improves the fault tolerance of our clusters’ storage at reduced cost.

Thirdly, we saved on data transfer costs. With our self-managed MySQL setup, for data transferred between a writer on an EC2 instance and a reader instance in a different Availability Zone in the same Region, we used to pay for inter-zone data transfer. With Aurora MySQL, data transferred between Availability Zones for cluster replication comes with no cost.

Lastly, we saved on cluster management tooling and support. Our previous third-party cluster management and monitoring software was no longer necessary because Aurora is a fully managed AWS service that offers the features needed for managing MySQL database clusters. As a result, we saved money on licensing and support fees for the third-party cluster management and monitoring software after adopting Aurora.

Improved performance

With the previous self-managed MySQL database architecture, scaling of MySQL readers in the primary Region used to take hours for new readers to become available, because the process relied on MySQL native binary log replication to make a new copy of the data. Therefore, we had to perform this scaling activity in advance to support the application requirements. However, with the current architecture using Aurora we can create a reader in a few minutes, which is equivalent to spinning up a compute instance without requiring restore of the complete data. We reduced the replica lag time to single-digit milliseconds because Aurora MySQL reader instances share the same underlying storage as the primary instance.

Additionally, there is no table-level lock that happens with Aurora while enabling encryption for clusters because it uses disk-level encryption for the cluster database storage, which enables the available database tables to serve the application requests, unlike the Keyring-based encryption that we used previously. Annual key rotation is also transparent and compatible with AWS services.

Conclusion

In this post, we discussed Ola Money’s prior architecture with self-managed MySQL clusters, and the multifaceted difficulties we faced. We also discussed how we began evaluating Aurora MySQL, how we migrated to Aurora MySQL with a DR site in the Asia Pacific (Hyderabad) Region, and the outcomes achieved with cost and performance benefits as well as operational excellence.

The new architecture provided Ola Money lower TCO, improved performance, and resulted in up to 60% savings on the database workloads. As next steps, we are considering exploring other Aurora features like Amazon Aurora Machine Learning, which enables us to add ML-based predictions to our applications using SQL for use cases like fraud detection and customer churn prediction.

Share your feedback in the comments section. If you would like to explore common Aurora use cases, check out Amazon Aurora tutorials and sample code to get started.

About the Authors

Ashesh Nishant is the VP Technology at Ola, bringing experience from his previous roles at Warner Bros. Discovery, Mobile Premier League (MPL), Reliance Jio, and Hotstar. He holds a master’s degree from the Institute of Management Technology, Ghaziabad. With a robust skill set that includes open source, Java Enterprise Edition, application architecture, solution architecture, and more, he contributes valuable insights to the industry.

Kayalvizhi Kandasamy works with digital-centered companies to support their innovation. As a Senior Solutions Architect (APAC) at Amazon Web Services, she uses her experience to help people bring their ideas to life, focusing primarily on microservice and serverless architectures and cloud-centered solutions using AWS services. Outside of work, she is a FIDE rated chess player, and coaches her daughters the art of playing chess.

Saeed Meethal is a principal database engineer at Ola, where he designs and implements web-scale SQL and NoSQL databases for online, nearline, and offline systems. He has over 11 years of experience in database management, performance optimization, and cost-effective solutions across various domains and industries.

Karan Tripathi is a principal engineer at Ola, and he specializes in leading critical technological transitions and innovative projects across various domains, including financial services, developer productivity, commerce, and mobility.

Source: Read More