Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading artificial intelligence (AI) companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API. To equip FMs with up-to-date and proprietary information, organizations use Retrieval Augmented Generation (RAG), a technique that fetches data from company data sources and enriches the prompt to provide more relevant and accurate responses. Knowledge Bases for Amazon Bedrock is a fully managed capability that helps you implement the entire RAG workflow, from ingestion to retrieval and prompt augmentation. However, information about one dataset can be in another dataset, called metadata. Without using metadata, your retrieval process can cause the retrieval of unrelated results, thereby decreasing FM accuracy and increasing cost in the FM prompt token.

On March 27, 2024, Amazon Bedrock announced a key new feature called metadata filtering and also changed the default engine. This change allows you to use metadata fields during the retrieval process. However, the metadata fields need to be configured during the knowledge base ingestion process. Often, you might have tabular data where details about one field are available in another field. Also, you could have a requirement to cite the exact text document or text field to prevent hallucination. In this post, we show you how to use the new metadata filtering feature with Knowledge Bases for Amazon Bedrock for such tabular data.

Solution overview

The solution consists of the following high-level steps:

Prepare data for metadata filtering.

Create and ingest data and metadata into the knowledge base.

Retrieve data from the knowledge base using metadata filtering.

Prepare data for metadata filtering

As of this writing, Knowledge Bases for Amazon Bedrock supports Amazon OpenSearch Serverless, Amazon Aurora, Pinecone, Redis Enterprise, and MongoDB Atlas as underlying vector store providers. In this post, we create and access an OpenSearch Serverless vector store using the Amazon Bedrock Boto3 SDK. For more details, see Set up a vector index for your knowledge base in a supported vector store.

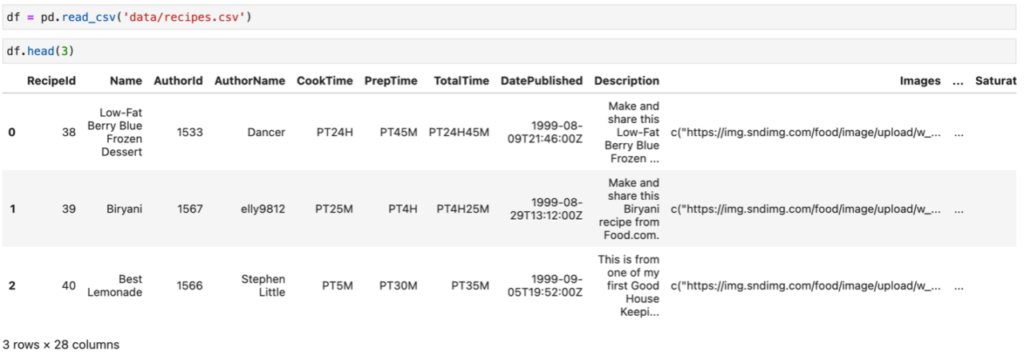

For this post, we create a knowledge base using the public dataset Food.com – Recipes and Reviews. The following screenshot shows an example of the dataset.

The TotalTime is in ISO 8601 format. You can convert that to minutes using the following logic:

After converting some of the features like CholesterolContent, SugarContent, and RecipeInstructions, the data frame looks like the following screenshot.

To enable the FM to point to a specific menu with a link (cite the document), we split each row of the tabular data in a single text file, with each file containing RecipeInstructions as the data field and TotalTimeInMinutes, CholesterolContent, and SugarContent as metadata. The metadata should be kept in a separate JSON file with the same name as the data file and .metadata.json added to its name. For example, if the data file name is 100.txt, the metadata file name should be 100.txt.metadata.json. For more details, see Add metadata to your files to allow for filtering. Also, the content in the metadata file should be in the following format:

For the sake of simplicity, we only process the top 2,000 rows to create the knowledge base.

After you import the necessary libraries, create a local directory using the following Python code:

Iterate over the top 2,000 rows to create data and metadata files to store in the local folder:

Create an Amazon Simple Storage Service (Amazon S3) bucket named food-kb and upload the files:

Create and ingest data and metadata into the knowledge base

When the S3 folder is ready, you can create the knowledge base on the Amazon Bedrock console using the SDK according to this example notebook.

Retrieve data from the knowledge base using metadata filtering

Now let’s retrieve some data from the knowledge base. For this post, we use Anthropic Claude Sonnet on Amazon Bedrock for our FM, but you can choose from a variety of Amazon Bedrock models. First, you need to set the following variables, where kb_id is the ID of your knowledge base. The knowledge base ID can be found programmatically, as shown in the example notebook, or from the Amazon Bedrock console by navigating to the individual knowledge base, as shown in the following screenshot.

Set the required Amazon Bedrock parameters using the following code:

The following code is the output of the retrieval from the knowledge base without metadata filtering for the query “Tell me a recipe that I can make under 30 minutes and has cholesterol less than 10.†As we can see, out of the two recipes, the preparation durations are 30 and 480 minutes, respectively, and the cholesterol contents are 86 and 112.4, respectively. Therefore, the retrieval isn’t following the query accurately.

The following code demonstrates how to use the Retrieve API with the metadata filters set to a cholesterol content less than 10 and minutes of preparation less than 30 for the same query:

As we can see in the following results, out of the two recipes, the preparation times are 27 and 20, respectively, and the cholesterol contents are 0 and 0, respectively. With the use of metadata filtering, we get more accurate results.

The following code shows how to get accurate output using the same metadata filtering with the retrieve_and_generate API. First, we set the prompt, then we set up the API with metadata filtering:

As we can see in the following output, the model returns a detailed recipe that follows the instructed metadata filtering of less than 30 minutes of preparation time and a cholesterol content less than 10.

Clean up

Make sure to comment the following section if you’re planning to use the knowledge base that you created for building your RAG application. If you only wanted to try out creating the knowledge base using the SDK, make sure to delete all the resources that were created because you will incur costs for storing documents in the OpenSearch Serverless index. See the following code:

Conclusion

In this post, we explained how to split a large tabular dataset into rows to set up a knowledge base with metadata for each of those records, and how to then retrieve outputs with metadata filtering. We also showed how retrieving results with metadata is more accurate than retrieving results without metadata filtering. Lastly, we showed how to use the result with an FM to get accurate results.

To further explore the capabilities of Knowledge Bases for Amazon Bedrock, refer to the following resources:

Knowledge bases for Amazon Bedrock

Amazon Bedrock Knowledge Base – Samples for building RAG workflows

About the Author

Tanay Chowdhury is a Data Scientist at Generative AI Innovation Center at Amazon Web Services. He helps customers to solve their business problem using Generative AI and Machine Learning.

Source: Read MoreÂ