On January 4, 2024, CMU professor Andy Pavlo, known for his database lectures, published his 2023 database review, primarily focusing on the rise of vector databases. These innovative data storage solutions have taken center stage. As the popularity of generative artificial intelligence (AI) models continues to soar, the spotlight has shifted to include databases with vector storage and search capabilities, which provide a cost-effective mechanism for extending the capabilities of a foundation model (FM). With advancements in distributed computing, cloud-centered architectures, and specialized hardware accelerators, databases with vector search are likely to become even more powerful and scalable.

In this post, we explore the key factors to consider when selecting a database for your generative AI applications. We focus on high-level considerations and service characteristics that are relevant to fully managed databases with vector search capabilities currently available on AWS. We examine how these databases differ in terms of their behavior and performance, and provide guidance on how to make an informed decision based on your specific requirements. By understanding these essential aspects, you will be well-equipped to choose the most suitable database for your generative AI workloads, achieving optimal performance, scalability, and ease of implementation.

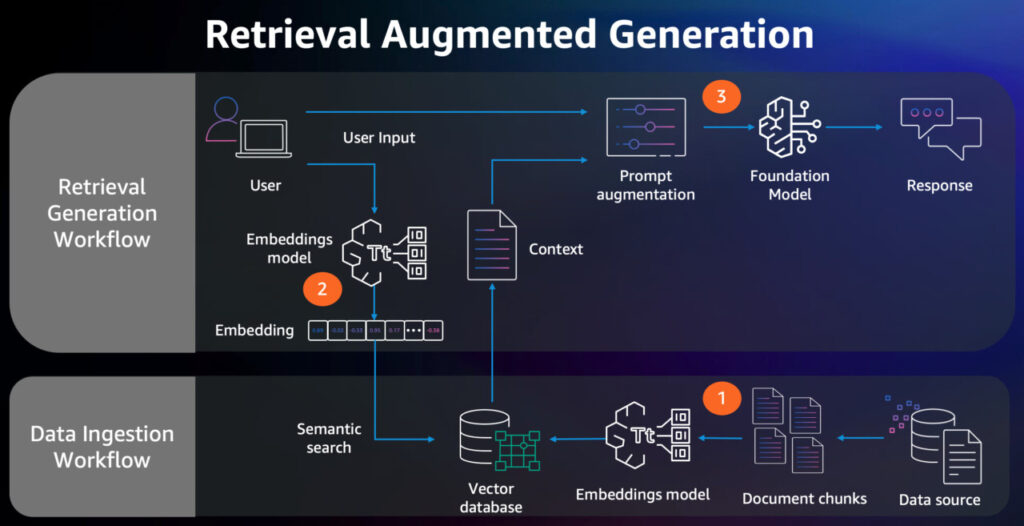

Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) is the process of optimizing the output of a large language model (LLM), so it references an authoritative knowledge base outside of its training data sources before generating a response. Databases extend the already powerful capabilities of LLMs to specific domains or an organization’s internal knowledge base, all without the need to retrain the model. It’s a cost-effective approach to improving LLM output so it remains relevant, accurate, and useful in various contexts.

The following diagram illustrates the RAG workflow.

The key steps in the RAG workflow are as follows:

The data source is processed to create document chunks, which are then passed through an embeddings model (such as Amazon Titan Text Embeddings V2) to convert the text into numerical representations called embeddings. These embeddings capture the semantic meaning of the text and are stored in a database optimized for vector search, along with the original document chunks.

The user input is converted into an embedding using the same embeddings model. A semantic search is performed on the database using the user input embedding as the query vector, retrieving the top-k most relevant document chunks based on their proximity in the vector space. The retrieved chunks serve as context for the subsequent generation step.

The user input is used as a prompt, and the retrieved document chunks are used for prompt augmentation. The augmented prompt is fed into an FM (such as Anthropic Claude 3 on Amazon Bedrock), which generates a response based on its pre-trained knowledge and the provided context from the database. The generated response is more informed and contextually appropriate due to the retrieved information.

When selecting a database for RAG, one of the first considerations is deciding which database is right for your use case. The discussion around databases and generative AI has been vast and multifaceted, and this post seeks to simplify this discussion, focusing primarily on the decision-making process of which database with vector search capabilities is right for you. If you’re seeking guidance on LLMs, refer to Generative AI with LLMs.

Vector search on AWS

As of publishing this post, AWS offers the following databases with the full suite of vector capabilities, including vector storage, retrieval, index, and search. AWS also offers databases that have integration with Knowledge Bases for Amazon Bedrock.

Amazon Aurora PostgreSQL-Compatible Edition and Amazon Relational Database Service (Amazon RDS) for PostgreSQL are fully managed relational databases with the pgvector open source vector search extension. Aurora PostgreSQL also supports vector search with its Amazon Aurora Serverless v2 deployment

Amazon OpenSearch Service is a fully managed service for running OpenSearch, an open source search engine and analytics suite. OpenSearch supports vector search through its vector engine for both managed clusters and serverless collections. OpenSearch Service also supports vector search with its Amazon OpenSearch Serverless deployment option.

Amazon Neptune Analytics is an analytics graph database engine with graph algorithms and vector search for combining graphs with RAG, such as GraphRAG.

Vector search for Amazon DocumentDB (with MongoDB compatibility) is available with Amazon DocumentDB 5.0 instance-based clusters.

Vector search for Amazon MemoryDB is an in-memory database providing the fastest vector search performance at the highest recall rates among popular vector databases on AWS. MemoryDB offers single-digit millisecond vector search and update latencies at even the highest levels of recall.

Amazon DynamoDB zero-ETL integration with OpenSearch Service provides advanced search capabilities such as full-text search and vector search on your Amazon DynamoDB.

In the following sections, we explore key considerations that can help you choose the right database for your generative AI applications:

Familiarity

Ease of implementation

Scalability

Performance

Familiarity

Opting for familiar technology, when possible, will ultimately save developer hours and reduce complexity. When developer teams are already familiar with a particular database engine, using the same database engine for vector search leverages existing knowledge for a streamlined experience. Instead of learning a new skillset, developers can utilize their current skills, tools, frameworks, and processes to include a new feature of an existing database engine.

For example, a team of database engineers may already manage a set of 100 relational databases hosted on Aurora PostgreSQL. If they are exploring supporting a new database with vector search requirement for their applications, they should first start with evaluating the pgvector extension on their existing Aurora PostgreSQL databases.

Similarly, if the team is working with graph data, they can consider using Neptune Analytics, which seamlessly integrates with their existing AWS infrastructure and provides powerful graph querying and visualization features.

In cases where the team deals with JSON documents and needs a scalable, fully managed document database, Amazon DocumentDB offers a compatible and familiar MongoDB experience, allowing them to use their existing skills and knowledge.

If the team is experienced with Redis OSS and needs a highly scalable, in-memory database for real-time applications, consider using MemoryDB. MemoryDB provides a familiar Redis OSS-compatible interface, allowing the team to use their existing Redis OSS knowledge and client libraries while benefiting from the fully managed, durable, and scalable capabilities of MemoryDB.

In these examples, the database engineering team doesn’t need to onboard a new database software, which would include adding more developer tooling and integrations. Instead, they can focus on enabling new capabilities within their domain of expertise. Development best practices, operations, and query languages will remain the same, reducing the number of new variables in the equation of successful outcomes. Another benefit of using your existing database is that it aligns with the application’s requirements for security, availability, scalability, reliability, and performance. Moreover, your database administrators can use familiar technology, skills, and programming tools.

If your current tech stack lacks vector search support, you can take advantage of serverless offerings to help fill the gap in your vector search needs. For example, OpenSearch Serverless with a quick create experience on the Amazon Bedrock console lets you get started without having to create or manage a cluster. Although onboarding a new technology is inevitable in this case, opting for serverless minimizes the management overhead.

Ease of implementation

Beyond familiarity, the expected process to actually implement a given database is the next primary consideration in the evaluation process. A seamless integration process can minimize disruption and accelerate time to value for your database. In this section, we explore how to evaluate databases across some core implementation focus areas such as vectorization, management, access control, compliance, and interface.

Vectorization

The foremost consideration for implementation is the process of populating your database with vector embeddings. If your data isn’t represented as a vector, you’ll need to use an embedding model to convert it into vectors and store it into a database enabled for vectors. For example, when you use OpenSearch Service as a knowledge base in Amazon Bedrock, your generative AI application can take unstructured data stored in Amazon Simple Storage Service (Amazon S3), convert it to text chunks and vectors, and store it in OpenSearch Service automatically.

Management

The day-to-day management needs to be a consideration for overall implementation. You need to select a database that won’t overburden your existing team’s database management workload. For example, instead of taking on the management overhead of a self-managed database on Amazon Elastic Compute Cloud (Amazon EC2), you can opt for a serverless database, such as Aurora Serverless v2 or OpenSearch Serverless, that supports vector search.

Access control

Access control is a critical consideration when integrating vector search into your existing infrastructure. In order to adhere to your current security standards, you should thoroughly evaluate the access control mechanisms offered by potential databases. For instance, if you have robust role-based access control (RBAC) for your non-vector Amazon DocumentDB implementation, choosing Amazon DocumentDB for vector search is ideal because it already aligns with your established access control requirements.

Compliance

Compliance certifications are key evaluation criteria for a chosen database. If a database doesn’t meet essential compliance needs for your application, it’s a non-starter. AWS is backed by a deep set of cloud security tools, with over 300 security, compliance, and governance services and features. In addition, AWS supports 143 security standards and compliance certifications, including PCI-DSS, HIPAA/HITECH, FedRAMP, GDPR, FIPS 140-2, and NIST 800-171, helping adhere to compliance requirements for virtually every regulatory agency around the globe, making sure your databases can meet your security and compliance needs.

Interface

How your generative AI application will interact with your database is another implementation consideration. This may have implications for the general usability of your database. You need to evaluate how you will connect to and interact with the database, choosing an option with a simple, intuitive interface that helps meet your needs. For instance, Neptune Analytics simplifies vector search through API calls and stored procedures, making it an attractive choice if you prioritize a streamlined, user-friendly interface. For more details, refer to working with vector similarity in Neptune Analytics.

Integrations

Integrations with Knowledge Bases for Amazon Bedrock is important if you’re looking to automate the data ingestion and runtime orchestration workflows. Both Aurora PostgreSQL-Compatible and OpenSearch Service are integrated with Knowledge Bases for Amazon Bedrock, with more to come. Similarly, integration with Amazon SageMaker enables a seamless, scalable, and customizable solution for building applications that rely on vector similarity search, personalized recommendations, or other vector-based operations, while using the power of machine learning (ML) and the AWS environment. In addition to Aurora PostgreSQL and OpenSearch Service, Amazon DocumentDB and Neptune Analytics are integrated with SageMaker.

Additionally, open source frameworks like LangChain and LlamaIndex can be helpful for building LLM applications because they provide a powerful set of tools, abstractions, and utilities that simplify the development process, improve productivity, and enable developers to focus on creating value-added features. LangChain seamlessly integrates with various AWS databases, storage systems, and external APIs, in addition to being integrated with Amazon Bedrock. This integration allows developers to easily use AWS databases and Bedrock’s models within the LangChain framework. LlamaIndex supports Neptune Analytics as a vector store and graph databases for building GraphRAG applications. Similarly, Hugging Face is a popular platform that provides a wide range of pre-trained models, including BERT, GPT, and T5. Hugging Face is integrated with AWS services, allowing you to deploy models on AWS infrastructure and use them with databases like OpenSearch Service, Aurora PostgreSQL-Compatible, Neptune Analytics, or MemoryDB.

Scalability

Scalability is a key factor when evaluating databases, enabling production applications to run efficiently without disruption. The scalability of databases for vector search is tied to their ability to support high-dimensional vectors and vast numbers of embeddings. Different databases have different means of scaling to support increased utilization, for example the scaling mechanisms and engineering of Aurora PostgreSQL will function differently than scaling on OpenSearch Service or MemoryDB. Understanding the scaling mechanisms of a database is essential to planning for continued growth of your applications. If we consider the example of a music company looking to build a rapidly growing music recommendation engine, OpenSearch Service makes it straightforward to operationalize the scalability of the recommendation engine by providing scale-out distributed infrastructure. Similarly, a global financial services company can use Amazon Aurora Global Database to build a scalable and resilient vector search solution for personalized investment recommendations. By deploying a primary database in one AWS Region and replicating it to multiple secondary Regions, the company can provide high availability, disaster recovery, and global application access with minimal latency, while delivering accurate and personalized recommendations to clients worldwide.

AWS databases offer an extensive set of database engines with diverse scaling mechanisms to help meet the scaling demands of nearly any generative AI requirement.

Performance

Another critical consideration is database performance. As organizations strive to extract valuable insights from vast amounts of high-dimensional vector data, the ability to run complex vector searches and operations at scale becomes paramount. When evaluating the performance of databases with vector capabilities, it’s important to evaluate the following characteristics:

Throughput – Number of queries processed per second

Recall – Relevance and completeness of retrieved vectors, providing accurate responses

Index build time – Duration required to build the vector index

Scale/cost – Ability to efficiently scale to billions of vectors while remaining cost-effective

p99 latency – Maximum latency for 99% of requests, meeting response time expectations

Storage utilized – How efficiently storage of high-dimensional vectors is used, which is particularly important for high-dimensional vectors

For example, a global bank building a real-time recommendation engine for their financial instruments needs to stay below their established end-to-end latency budget, while delivering highly relevant vector search results and experiences for users at single-digit millisecond latencies for tens of thousands of concurrent users. In this scenario, MemoryDB is the right choice. The choice of indexing technique also significantly impacts query performance. Approximate nearest neighbor (ANN) based techniques such as Hierarchical Navigable Small World (HNSW) and inverted file with flat compression (IVFFlat) trade off search performance, returning the most relevant results over other k-NN techniques. Understanding these indexing methods is essential for choosing the best database for your specific use case and performance requirements. For example, Aurora Optimized Reads with NVMe caching using HNSW indexing can provide up to a nine-times increase in average query throughput compared to instances without Optimized Reads.

AWS offers databases that can help fulfill the vector search performance needs of your application. These databases provide various performance optimization techniques and advanced monitoring tools, allowing organizations to fine-tune their vector data management solutions, address performance bottlenecks, and achieve consistent performance at scale. By using AWS databases, businesses can unlock the full potential of their vector search requirements, enabling real-time insights, personalized experiences, and innovative AI and ML applications that drive growth and innovation.

High-level service characteristics

Choosing a fully managed database on AWS to run your vector workloads, supported by the pillars of the Well-Architected Framework, offers significant advantages. It combines the scalability, security, and reliability of AWS infrastructure with the operational excellence and best practices around management, allowing businesses to use well-established database technologies while benefiting from streamlined operations, reduced overhead, and the ability to scale seamlessly as their needs evolve. The following are the characteristics that we have observed as pivotal in the database evaluation process:

Semantic search – Semantic search is an information retrieval technique that understands the meaning and context of search queries to deliver more relevant and accurate results. Semantic search is supported by many databases currently offered on AWS that support vector search capabilities.

Serverless – This is the ability for a database to elastically scale to efficiently meet demands with little-to-no user management. Serverless is currently available for OpenSearch Service and Aurora PostgreSQL, and both are integrated with Knowledge Bases for Amazon Bedrock, which provide fully managed RAG workflows.

Dimensionality – Many AWS customers employ open source or custom embedding models that span a diverse range of dimensions; some examples include models such as Cohere Embed English v3 on Amazon Bedrock with 1,024, or the Amazon Titan Text Embeddings V2 with a choice of 256, 512, or 1,024 dimensions. AWS databases that support vector search support these dimension sizes across all of these models and are continuously innovating to deliver new standards on scalability and functionality.

Indexing – The most widely adopted indexing algorithm amongst our customer base is HNSW, and all AWS database services with vector search support HNSW. The IVFFlat indexing method is also supported amongst a subset of these database services.

Billion-scale vector workloads – As your vector workloads grow to support enterprise applications throughout 2024 and into 2025, our database services are equipped to handle billion-scale vector workloads.

Relevancy – To optimize your applications by use cases, you must also confirm the relevancy of the vector search results, as measured by recall. All AWS database services with vector search support configurable recall in some capacity.

Hybrid search and pre-filtering – Many customers consider how to pre- and post-filter their vector search queries to focus on specific product categories, geographies, or other data subsections. AWS database services provide a layer of hybrid search or pre-filtering capabilities, with several like Aurora PostgreSQL, Amazon RDS for PostgreSQL, MemoryDB, and OpenSearch Service going a step beyond and offering full-text search and hybrid search capabilities.

Conclusion

Selecting the right database for your generative AI application is crucial for success. While factors like familiarity, ease of implementation, scalability, and performance are important, we recognize the need for more specific guidance in this rapidly evolving space. Based on our current understanding and available options within the AWS managed database portfolio, we recommend the following:

If you’re already using OpenSearch Service, Aurora PostgreSQL, RDS for PostgreSQL, DocumentDB or MemoryDB, leverage their vector search capabilities for your existing data.

For graph-based RAG applications, consider Amazon Neptune.

If your data is stored in DynamoDB, OpenSearch can be an excellent choice for vector search using zero-ETL integration.

If you are still unsure, use OpenSearch Service which is the default database engine for Amazon Bedrock.

The generative AI landscape is dynamic and continues to evolve rapidly. We encourage testing different database services with your specific datasets and ML algorithms with consideration for how your data will grow over time so your solution can scale seamlessly with your workload.

AWS offers a diverse range of database options, each with unique strengths. By leveraging AWS’s powerful ecosystem, organizations can empower their applications with efficient and scalable databases featuring vector storage and search capabilities, driving innovation and competitive advantage. AWS is committed to helping you navigate this journey. If you have further questions or need assistance in designing your optimal path forward for generative AI, don’t hesitate to contact our team of experts.

About the Authors

Shayon Sanyal is a Principal Database Specialist Solutions Architect and a Subject Matter Expert for Amazon’s flagship relational database, Amazon Aurora. He has over 15 years of experience managing relational databases and analytics workloads. Shayon’s relentless dedication to customer success allows him to help customers design scalable, secure and robust cloud native architectures. Shayon also helps service teams with design and delivery of pioneering features, such as Generative AI.

Graham Kutchek is a Database Specialist Solutions Architect with expertise across all of Amazon’s database offerings. He is an industry specialist in media and entertainment, helping some of the largest media companies in the world run scalable, efficient, and reliable database deployments. Graham has a particular focus on graph databases, vector databases, and AI recommendation systems.

Source: Read More