A common administrative task for database administrators (DBAs) is to perform backups of the databases from production, and move to lower environments such as development, quality assurance, staging, and so on. As customers are migrating to the AWS Cloud to take advantage of managed database services such as Amazon Relational Database Service (Amazon RDS) for MySQL, Amazon RDS for MariaDB, and Amazon Aurora MySQL-Compatible Edition, they also look to automate these administrative tasks.

During our work with a customer for a data center migration, we encountered a precise set of requirements for their database backup strategy. This customer was transitioning from Microsoft SQL Server databases to Amazon Aurora MySQL, Amazon RDS for MySQL, and RDS for MariaDB as part of a migration from on-premises to the cloud, and their primary aim was to achieve minimal disruption to their operations team’s workflow during this migration.

Their operations teams had requirements to take database backups of individual databases from production environments to be restored in lower-end environments. They were new to cloud technology and aimed to maintain a consistent backup approach that could work similarly to their existing on-premises systems.

After a thorough analysis of their needs, the following requirements were established:

Preserve the existing Aurora MySQL endpoint names when restoring a backup to prevent the need for application redeployments in case of a restore activity.

Keep the capability to refresh lower environments, such as development and testing, with backups from the production database.

Avoid the necessity of using infrastructure as code (IaC) to restore a snapshot from a different AWS account.

Impose a minimal learning curve for the operations team, who were still getting familiar with Aurora MySQL.

Implement an API-friendly approach to reduce the need for connecting to an Amazon Elastic Compute Cloud (Amazon EC2) instance or similar services to invoke backup requests.

Use Amazon Simple Storage Service (Amazon S3) and pre-signed URLs to facilitate the movement of backups by the operations team.

Solution Overview

This post shows how a DBA or other user with access to a custom API can make MySQL and MariaDB backup requests. It uses Infrastructure as Code (IaC) with the AWS Cloud Development Kit (AWS CDK) to simplify the deployment.

Amazon API Gateway provides an API that can be called to start a backup process. Amazon Elastic Container Service (Amazon ECS) is used to run the backup process. Amazon S3 is used to store the backup. Amazon Simple Notification Service (Amazon SNS) is used to sending a notification about the finished process.

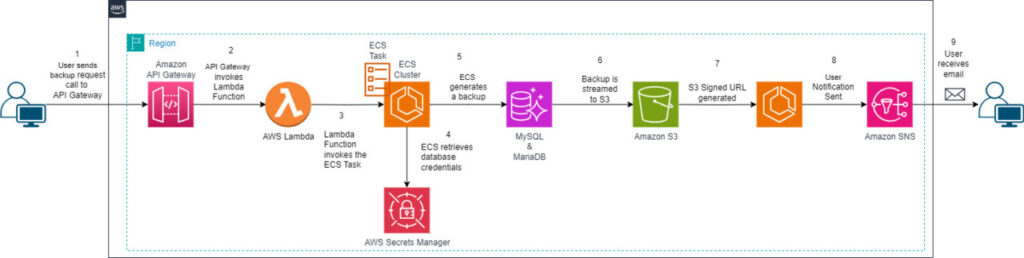

The following diagram shows the high-level user interaction with the solution and how the components work.

The process includes the following steps:

Using bash or Python, the user invokes the API Gateway endpoint, sending the hostname and database to be backed up.

API Gateway receives the hostname and database name payload, and sends it to an AWS Lambda

The function extracts the hostname and database name payload from the API parameters and runs an Amazon ECS run task command, sending those values as environment variables.

The ECS task starts and reads the environment variables to retrieve the hostname and database name.

The task uses AWS Secrets Manager to retrieve the database credentials for the given hostname. Then it retrieves the TCP port from the Amazon RDS API for the given MySQL or MariaDB hostname.

Using mysqldump, it connects to the given MySQL or MariaDB instance and starts running the backup. If you’re using this solution on a MariaDB environment, consider switching from mysqldump to the mariadb-dump

At runtime, the backup is generated.

At runtime, the backup is zipped and streamed to Amazon S3, and a new Amazon S3 pre-signed URL is generated for the file. The backup file is uploaded directly into Amazon S3 Standard-Infrequent access storage class, which is the recommended for storing backups.

The user email address specified in the SNS topic during the cdk deploy step is notified.

Prerequisites

To follow along, you must have the following prerequisites:

An active AWS account with a VPC and at least one of the following database platforms deployed:

Amazon Aurora MySQL-Compatible Edition

Amazon RDS for MySQL

Amazon RDS for MariaDB

The AWS Command Line Interface (AWS CLI) installed and configured.

AWS CDK v2 set up on your local machine. For instructions, see Getting Started with the AWS CDK. This project uses TypeScript as the language, so you should also have that set up on your laptop.

Docker installed on your laptop to build the container for the first time.

The GitHub repository downloaded to your local machine. A template to create an AWS Identity and Access Management (IAM) role is provided in this repository.

Limitations and items out of scope

A demonstration of the database restore process is not included in this post.

Additionally, this solution works best for databases in the same AWS Region as the rest of the backup infrastructure provisioned. If you have databases in different Regions, you can deploy the backup solution separately in each one.

Create database credentials

As a prerequisite, you need a database user that has permissions to take schema dumps. Before you deploy the solution into your AWS account, you need to create the same user in your environments.

To create a new database user in one of the database engines of your choice, run the following script:

Store database credentials in Secrets Manager

After you create the database credentials, you need to store them in your AWS account. For this post, we use Secrets Manager. Secrets Manager helps you manage and retrieve credentials safely. Later, the ECS task will retrieve during runtime these secrets to perform the database backups.

To create the secret, complete the following steps:

On the Secrets Manager console, choose Secrets in the navigation pane.

Choose Store a new secret.

For Secret type, enter the credentials for the RDS database.

For User name, enter NameOfUser.

For Password, enter PasswordOfUser.

For Database, choose the DB instance to which the credential belongs.

Choose Next.

For Secret name, enter backup/EndpointIdentifierName/user. For Aurora MySQL instances, the EndpointIdentifierName should be the writer or reader endpoint.

Choose Next.

Choose Next again.

Choose Store.

Your secret is now listed on the Secrets page.

Deploy the solution in the AWS account

After you complete the prerequisites and set up the IAM user and the AWS CDK, it’s time to deploy the solution to the AWS account.

Download the code from the GitHub repository and extract the contents of the .zip file.

Navigate to the project folder that you downloaded and open a terminal session from there.

In the terminal, make sure you are connected to the right AWS account where the project will be deployed into.

Run the following code:

After the initial bootstrap has completed successfully, it’s time to deploy the solution in the AWS account. Before you run cdk deploy, there are a few important values that need to be specified on the deployment:

EXISTING_VPC_ID – This specifies which VPC you want to deploy the solution on. It’s recommended to deploy in the same VPC where the database subnets are located.

BackupEmailReceivers – This is the email address that receives email messages from Amazon SNS when backups are complete. To specify multiple emails, you need to split them using commas. For example: someemail@company.com, someotheremail@company.com. At a later stage, if you need to add extra emails, you need to run cdk deploy with the extra emails included.

ECSTaskCPU – This specifies the number of vCPUs to use for the container that runs the backup tasks. To understand the values, refer to Task CPU and memory. For this post, we use 2048, which corresponds to 2 vCPUs.

ECSTaskMemory – This specifies the amount of memory in MB to use for the container that runs the backup tasks. To understand the values, see Task CPU and memory. For this post, we use 8192, which corresponds to 8 GB of memory.

Deploy the solution.

For Windows users, use the following code:

For Ubuntu and MacOs users, use the following code:

In this step, you provide an email address that will be used for sending backup email notifications.

The solution takes around 30–45 minutes to deploy. When it’s complete, you should see the following output.

The deployment time may vary from case to case.

Note the following information exported to use in a later step:

exportMySqlToS3.SecurityGroupId

exportMySqlToS3.apiURL

Grant Amazon ECS access to database security groups

After you deploy the solution, you need to grant permissions on the database security groups to allow the ECS task to access your database and perform the database backups. As shown in the preceding screenshot, you retrieved the value of the output named exportMyqlToS3.SecurityGroupId.

Complete the following steps:

On the Amazon RDS console, choose Databases in the navigation pane.

Choose any RDS for MySQL or MariaDB instance.

In the VPC security groups section, choose your security group.

If the instance has multiple security groups attached, you can choose one of them.

In the Inbound rules section, choose Edit inbound rules.

Choose Add rule.

For Type, select MYSQL/Aurora; this will prepopulate the TCP port needed.

If your instance uses a custom TCP port, select Custom TCP.

For Source, enter the security group ID retrieved from the stack output.

Choose Save rules.

Retrieve API Gateway information

To invoke a database backup task, you need the API URL. You can either retrieve that from the cdk deploy output shown earlier, or use the API Gateway console. In this section, we show how to retrieve the information using the API Gateway console.

On the API Gateway console, choose APIs in the navigation pane.

Choose the API exporttos3-rest-api.

Choose Stages.

From the list of stages, choose prod.

Note the Invoker URL to use in a later step to invoke the backup task.

We also need the API key, which is necessary to securely invoke the API that takes the backups.

On the Secrets Manager console, locate the secret backup/exportToS3/apiKey.

In the Secret value section, choose Retrieve secret value.

Note the API key.

Invoke a backup

Now you use the API URL and API key to invoke a backup task.

The API has been deployed into a private subnet. This means that it’s not possible to invoke from outside the VPC. Either your corporate network has access to the resources deployed into the private subnet, or you invoke from a bastion host deployed in the same VPC.

The following steps describe how to invoke a backup task using a bastion host deployed in the VPC:

In the bastion host, open the terminal.

Invoke a backup:

If you’re using curl, run the following script:

This produces the following result:

Container request started for /backup. ECS Task is: arn:aws:ecs:eu-west-1: AwsAccountId:task/exportMysqlToS3-ecs-cluster/1a5b8301cfc64f30b5b319518f2e317d with the following parameters: DB_NAME=world, HOST_NAME=database-1-instance-1.cl64px3dsqbn.eu-west-1.rds.amazonaws.com

If you’re using Python, you can run the following script:

This produces the following result:

Container request started for /backup. ECS Task is: arn:aws:ecs:eu-west-1:AwsAccountId:task/exportMysqlToS3-ecs-cluster/79f71052f6b240e8a23326777b1ecb2b with the following parameters: DB_NAME=world, HOST_NAME=database-1-instance-1.cl64px3dsqbn.eu-west-1.rds.amazonaws.com

After the backup is complete and uploaded to Amazon S3, you receive the following email.

The email contains the following information:

Instance identifier

Database name

S3 bucket name

S3 object name

Download URL

With this information, you can use the download backup file if required.

An S3 lifecycle rule is in place that deletes the files after 24 hours. For more details, see Managing your storage lifecycle.

Troubleshoot backup failures

In this section, we discuss two methods to troubleshoot backup failures: Amazon CloudWatch and ECS task logs.

CloudWatch logs

If something isn’t working, you may need to review the CloudWatch logs. Complete the following steps:

On the CloudWatch console, choose Log groups in the navigation pane.

This page displays a list of all log groups that exist in the Region in which you’re operating.

Search for exportMySqlToS3 to retrieve all the log groups for this project.

From the list, you have one log group per service deployed for the project. If you choose the Lambda log group, for example, you will see a log stream, in which each entry corresponds to one or more Lambda function runs that occurred.

You can explore the log streams in more detail to see the log events.

ECS task logs

Another source of information for logs is the ECS task, which contains the container running the code that takes the backup from the database. To view the container logs, complete the following steps:

On the Amazon ECS console, choose Clusters in the navigation pane.

Search for and choose the ECS cluster exportMysqlToS3-ecs-cluster.

Choose the Tasks

The default display will only show ECS tasks currently running. If the list is empty, switch the filter from Running tasks to All tasks. You should now be able to view your tasks.

Choose your desired task.

To view the logs generated by the task, choose the Logs

To view the environment variables passed to the container running inside of the ECS task, choose the Environment variables and files

Scale up an ECS task

When you ran cdk deploy for the first time, you passed two parameters, ECSTaskCPU and ECSTaskMemory, which specified the default compute capacity being provisioned for Amazon ECS.

If you want the ECS tasks to finish faster, or depending on the size of your databases, you might want to scale up the ECS task. If you want to perform that change, complete the following steps:

Identify the values that can be used for CPU and memory on the Amazon ECS console and check the Task CPU and memory

Open a terminal session on the local folder exporttos3 in your laptop and run the following code:

For Windows users:

For Ubuntu or MacOs users:

Amazon RDS backup strategies

You can take backups of an RDS instance through multiple options, such as automated RDS backups, manual RDS snapshots, AWS Backup, and exporting the data to Amazon Simple Storage Service (Amazon S3).

Amazon RDS offers an automated backup functionality, implementing the regular capture of snapshots for your MySQL and MariaDB instances. These snapshots are pivotal in preserving the entire database’s state at a specific moment, assuring data consistency. You have the flexibility to set a retention period for these backups, which can extend up to 35 days, accommodating various use cases. In the case of data loss, you can restore your database to any point within the retention period, providing data integrity and minimizing downtime.

Another option that is useful if you need to set up processes and guardrails around your backup and recovery procedures is to use AWS Backup for Amazon RDS for MySQL and Amazon RDS for MariaDB, as well as AWS Backup for Amazon Aurora MySQL. AWS Backup extends the data protection capabilities by allowing you to centralize and manage backup policies across multiple AWS services, including Amazon RDS, making it straightforward to implement consistent backup and retention strategies. One common practice while using AWS Backup is to have a central AWS account that handles the backups across your organization. Enterprises usually implement that as part of using AWS Control Tower. Refer to the following posts for more information:

Use AWS Backup and CI/CD tools to automate centralized backup across AWS services

Automate centralized backup at scale across AWS services using AWS Backup

Besides these options, Amazon RDS for MySQL, Amazon RDS for MariaDB, and Amazon Aurora MySQL offer the capability to export DB snapshot data to Amazon S3. In this strategy, you can export data in the background from the DB snapshot and store it securely in Amazon S3 the format of Apache Parquet files, which can later be analyzed using Amazon Athena or Amazon Redshift Spectrum. With this method of extraction, you can choose to export specific sets of databases, schemas, or tables.

Best practices

The following are best practices when using this solution:

Secure your backups – Apply security measures to protect your backup data. Implement IAM policies to control access to your backups and use encryption at rest to safeguard sensitive information. Following AWS security best practices will help prevent unauthorized access and maintain the confidentiality of your backup data.

Take advantage of read-only replicas – Although we have conducted tests on the solution for backing up extensive databases (over 50 GB), it is crucial to consider the additional strain this procedure may impose on your environment because of the single-threaded operations of mysqldump. Using read-only replicas to take backups of the databases can alleviate the performance challenges caused by the mysqldump

Test the restore process – A database backup is as good as the database restore. From time to time, it is recommended to restore the backups to lower environments, and make sure they can be restored in a case of emergency. To restore the backup to another MySQL instance, you need access to a host with the MySQL utility tools. The backup file can be retrieved from Amazon S3 (within the 24h period) by generating a pre-signed URL.

Lessons learned

In this section, we share lessons learned while building this solution.

Regularly review and update your backup strategy

While working on this solution, we had to make changes at various points to adjust this process to the customer needs. As data and applications grew, we had to reassess our solution to make sure they aligned with the customers’ changing needs. With fewer data in the beginning, we used Lambda to create the backups.

Over time, we realized that the 15-minute timeout for Lambda functions wouldn’t work with ever-growing amounts of data, and switched to an ECS Fargate task. This allowed us to start with a simpler solution and change it as needed.

Monitor storage costs

In our solution, we store the backups in Amazon S3, and costs can accumulate over time, especially with longer retention periods or large databases. The purpose of this solution was to have backups that could be moved to lower environments, and didn’t need to be archived for longer, so we stored all backups for only 24 hours with a retention policy. If your needs are different, you can change the AWS CDK code to a longer period.

You could also use the Amazon S3 Storage Lens to help you track and optimize your storage costs, and see where you are spending the most.

Use the Storage Class for your needs. In our case, we switched from Amazon S3 Standard to Amazon S3 Standard-Infrequent Access, which is best suited for storing backup files.

Backing up large databases

When dealing with backups for large databases, optimizing the backup process becomes crucial to prevent excessive resource utilization from the MySQL instance. Besides using parameters like –single-transaction and –quick, especially for large tables, consider using advanced tools such as MyDumper and MyLoader for more efficient operations.

MyDumper introduces parallelized backups that substantially minimize downtime. This multi-threaded tool can use multiple parallel threads, providing a secure and swift backup of databases.

MyLoader complements MyDumper by facilitating the restoration process. MyLoader efficiently loads data back into the MySQL database, offering seamless recovery after a backup operation.

Additionally, the MySQL Shell serves as a versatile and powerful interface for database administration, offering a comprehensive set of tools to enhance the management of large databases. It provides a flexible environment for various tasks, including backup and recovery. Its integration with MySQL utilities allows you to use efficient backup and restoration mechanisms, complementing tools like MyDumper and MyLoader.

Furthermore, the MySQL Shell supports parallel data transfer, making it well suited for handling large datasets during backup and restoration. Its extensibility through scripting languages empowers administrators to tailor solutions to specific requirements, providing adaptability to diverse database environments.

Clean up

To decommission this project from the AWS account, complete the following steps:

Remove the files generated in the S3 bucket.

Open a terminal session on the local folder exporttos3 in your laptop and run the following code:

For Windows users:

For Ubuntu and MacOs users:

Enter y to confirm the deletion.

Conclusion

In this post, we explained how our customer implemented Amazon RDS for MySQL and MariaDB database backups using AWS serverless solutions that achieved their migration outcomes when moving to AWS. We looked at how you can use API Gateway, Lambda, ECS clusters, Amazon SNS, and Amazon S3 to facilitate this procedure.

If you have any questions or suggestions, leave a comment.

About the Authors

Marcos Freccia is a Sr. Database Specialty Architect with the AWS Professional Services team. He has been supporting and enabling customers on their journey to migrate and modernize their database solutions from on-premises data centers to AWS.

Fabian Jahnke is a Senior Cloud Application Engineer with the AWS Professional Services team. He builds production-ready, cloud-centered solutions with customers. He specializes in the use of AWS services for software engineering challenges. He enjoys trying out new ideas and finding innovative solutions to problems.

Source: Read More