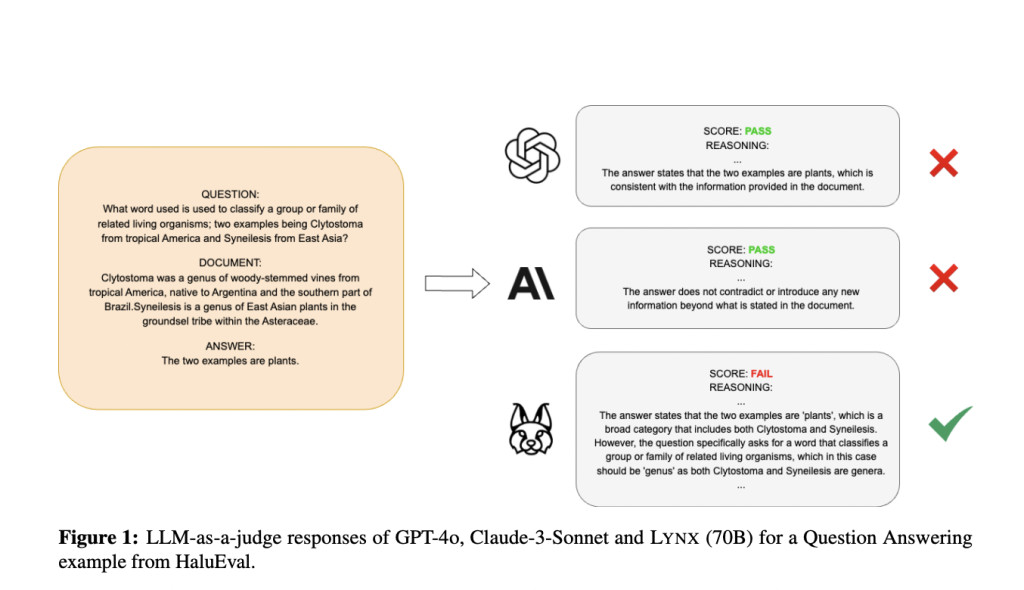

Patronus AI has announced the release of Lynx. This cutting-edge hallucination detection model promises to outperform existing solutions such as GPT-4, Claude-3-Sonnet, and other models used as judges in closed and open-source settings. This groundbreaking model, which marks a significant advancement in artificial intelligence, was introduced with the support of key integration partners, including Nvidia, MongoDB, and Nomic.

Hallucination in large language models (LLMs) refers to generating information either unsupported or contradictory to the provided context. This poses serious risks in applications where accuracy is paramount, such as medical diagnosis or financial advising. Traditional techniques like Retrieval Augmented Generation (RAG) aim to mitigate these hallucinations, but they are not always successful. Lynx addresses these shortcomings with unprecedented accuracy.

One of Lynx’s key differentiators is its performance on the HaluBench, a comprehensive hallucination evaluation benchmark consisting of 15,000 samples from various real-world domains. Lynx has superior performance in detecting hallucinations across diverse fields, including medicine and finance. For instance, in the PubMedQA dataset, Lynx’s 70 billion parameter version was 8.3% more accurate than GPT-4 at identifying medical inaccuracies. This level of precision is critical in ensuring the reliability of AI-driven solutions in sensitive areas.

The robustness of Lynx is further evidenced by its performance compared to other leading models. The 8 billion parameter version of Lynx outperformed GPT-3.5 by 24.5% on HaluBench and showed significant gains over Claude-3-Sonnet and Claude-3-Haiku by 8.6% and 18.4%, respectively. These results highlight Lynx’s ability to handle complex hallucination detection tasks with a smaller model, making it more accessible and efficient for various applications.

The development of Lynx involved several innovative approaches, including Chain-of-Thought reasoning, which enables the model to perform advanced task reasoning. This approach has significantly enhanced Lynx’s capability to catch hard-to-detect hallucinations, making its outputs more explainable and interpretable, akin to human reasoning. This feature is particularly important as it allows users to understand the model’s decision-making process, increasing trust in its outputs.

Lynx has been fine-tuned from the Llama-3-70B-Instruct model, which produces a score and can also reason about it, providing a level of interpretability crucial for real-world applications. The model’s integration with Nvidia’s NeMo-Guardrails ensures that it can be deployed as a hallucination detector in chatbot applications, enhancing the reliability of AI interactions.

Patronus AI has released the HaluBench dataset and evaluation code for public access, enabling researchers and developers to explore and contribute to this field. The dataset is available on Nomic Atlas, a visualization tool that helps identify patterns and insights from large-scale datasets, making it a valuable resource for further research and development.

In conclusion, Patronus AI launched Lynx to develop AI models capable of detecting and mitigating hallucinations. With its superior performance, innovative reasoning capabilities, and strong support from leading technology partners, Lynx is set to become a cornerstone in the next generation of AI applications. This release underscores Patronus AI’s commitment to advancing AI technology and effective deployment in critical domains.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Patronus AI Introduces Lynx: A SOTA Hallucination Detection LLM that Outperforms GPT-4o and All State-of-the-Art LLMs on RAG Hallucination Tasks appeared first on MarkTechPost.

Source: Read MoreÂ