Existing open-source large multimodal models (LMMs) face several significant limitations. They often lack native integration and require adapters to align visual representations with pre-trained large language models (LLMs). Many LMMs are restricted to single-modal generation or rely on separate diffusion models for visual modeling and generation. These limitations introduce complexity and inefficiency in both training and inference time. There is a need for a truly open, autoregressive, native LMM capable of high-quality, coherent multimodal generation.

Researchers from the Generative AI Research Lab address the challenge of limited multimodal functions in LMMs. Open-source LMMs, such as LLaVA, CogVLM, and DreamLLM, primarily focus on multimodal understanding without generation capabilities. Many of these models are not natively multimodal and rely on pre-trained LLMs as their backbone, requiring additional diffusion models for vision generation. To address these issues, the researchers propose ANOLE, an open, autoregressive, native LMM for interleaved image-text generation. Built on Meta AI’s Chameleon, ANOLE uses a data-efficient and parameter-efficient, fine-tuning strategy. This study aims to enhance Chameleon’s capabilities to enable vision and multimodal generation without compromising its text generation and comprehension strengths.

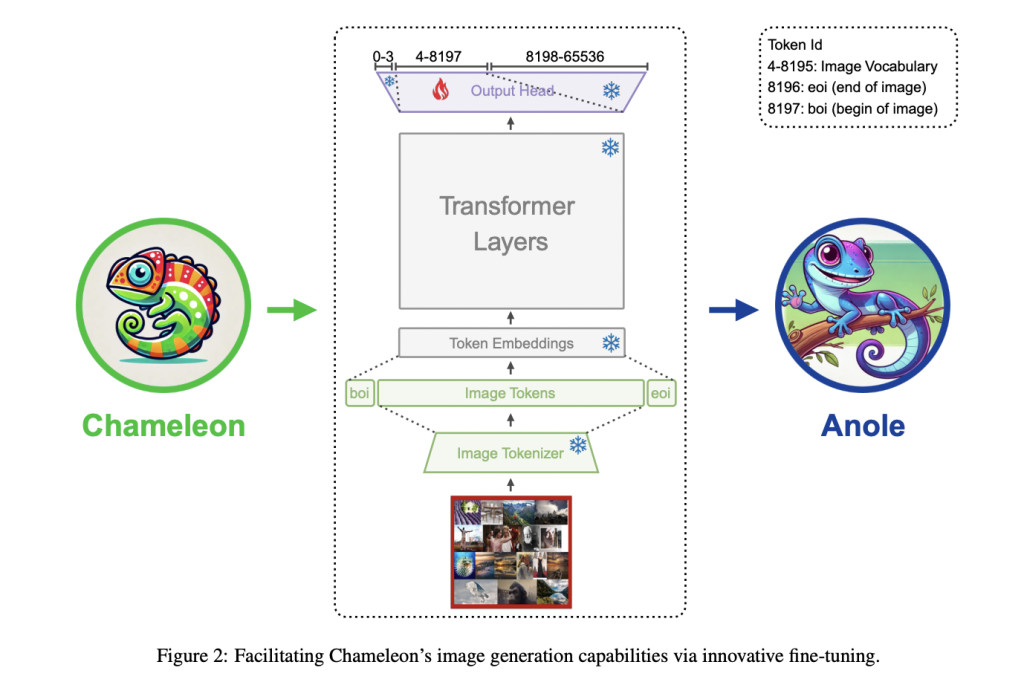

ANOLE adopts an early-fusion, token-based autoregressive approach to model multimodal sequences without using diffusion models, relying solely on transformers. The fine-tuning process focuses on the logits corresponding to image token IDs in the transformer’s output head layer, following the principle of “less is more.†ANOLE-7b-v0.1 was developed using a small amount of image data (5,859 images) and was fine-tuned on fewer than 40M parameters in around 30 minutes on 8 A100 GPUs.Â

With the limited data and parameters, ANOLE demonstrates impressive image and multimodal generation capabilities, producing high-quality and coherent interleaved image-text sequences. Qualitative analysis shows that ANOLE can generate diverse and accurate visual outputs from textual descriptions and seamlessly integrate text and images in interleaved sequences. For instance, ANOLE can generate detailed recipes with corresponding images and produce informative interleaved image-text sequences, such as guides to cooking traditional Chinese cuisines or descriptions of architectural designs.

In conclusion, the proposed method represents a significant advancement in the field of multimodal AI by addressing the limitations of previous open-source LMMs. ANOLE offers an innovative solution that is both data and parameter-efficient, facilitating high-quality multimodal generation capabilities. By building on Chameleon, ANOLE democratizes access to advanced multimodal AI technologies and paves the way for more inclusive and collaborative research in this field.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Anole: An Open, Autoregressive, Native Large Multimodal Model for Interleaved Image-Text Generation appeared first on MarkTechPost.

Source: Read MoreÂ