Personalized review generation within recommender systems is an area of increasing interest, particularly in creating custom reviews based on users’ historical interactions and preferences. This involves utilizing data about users’ previous purchases and feedback to produce reviews that accurately reflect their unique preferences and experiences, enhancing recommender systems’ overall effectiveness.

Recent research addresses the challenge of generating personalized reviews that align with users’ experiences and preferences. Many users only provide ratings without detailed reviews after making purchases, which complicates capturing the subtleties of user satisfaction and dissatisfaction. This gap in detailed feedback necessitates innovative methods to ensure that the reviews generated are personalized and reflect the users’ genuine sentiments.

Existing methods for review generation often employ encoder-decoder neural network frameworks. These methods typically leverage discrete attributes such as user and item IDs and ratings to generate reviews. More recent approaches have incorporated textual information from item titles and historical reviews to improve the quality of the generated reviews. For instance, models like ExpansionNet and RevGAN have been developed to integrate phrase information from item titles and sentiment labels into the review generation process, enhancing the relevance and personalization of the reviews produced.

Researchers from Tianjin University and Du Xiaoman Financial have introduced a novel framework called Review-LLM, designed to harness the capabilities of LLMs such as Llama-3. This framework aggregates user historical behaviors, including item titles and corresponding reviews, to construct input prompts that capture user interest features and review writing styles. The research team has developed this approach to improve the personalization of generated reviews.

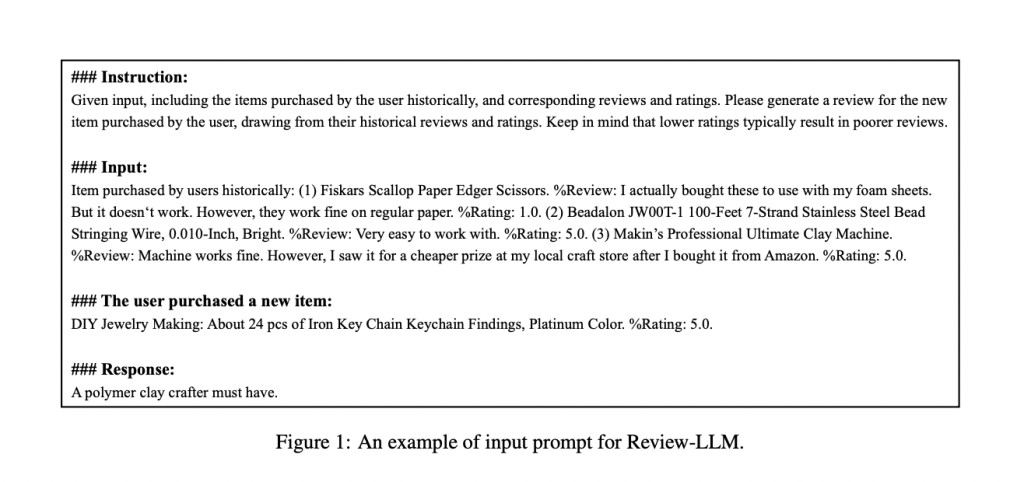

The Review-LLM framework employs a supervised fine-tuning approach, where the input prompt includes the user’s historical interactions, item titles, reviews, and ratings. This comprehensive input enables the LLM to understand user preferences better and generate more accurate and personalized reviews. The fine-tuning process involves adapting the LLM to generate reviews based on user-specific information. For instance, the model reconstructs the input by aggregating the user’s behavior sequence, including item titles and corresponding reviews, to enable the model to learn user interest features and review writing styles from semantically rich text information. Incorporating the user’s rating of the item into the prompt helps the model understand the user’s satisfaction level.

The performance of the Review-LLM was evaluated using several metrics, including ROUGE-1, ROUGE-L, and BertScore. The experimental results demonstrated that the fine-tuned model outperformed existing models, including GPT-3.5-Turbo and GPT-4o, in generating personalized reviews. For example, Review-LLM achieved a ROUGE-1 score of 31.15 and a ROUGE-L score of 26.88, compared to the GPT-3.5-Turbo’s scores of 17.62 and 10.70, respectively. The model’s ability to generate negative reviews when dissatisfied users was particularly noteworthy. The human evaluation component of the study, involving 10 Ph.D. students familiar with review/text generation, further confirmed the model’s effectiveness. The percentage of generated reviews marked as semantically similar to the reference reviews was significantly higher for Review-LLM compared to the baseline models.

The Review-LLM framework effectively leverages LLMs to generate personalized reviews by incorporating user historical behaviors and ratings. This approach addresses the challenge of creating reviews that reflect users’ unique preferences and experiences, enhancing review generation’s overall accuracy and relevance in recommender systems. The research indicates that by fine-tuning LLMs with comprehensive input prompts that include user interactions, item titles, reviews, and ratings, personalized reviews that are more aligned with the users’ true sentiments can be generated.

In conclusion, the Review-LLM framework produces highly personalized reviews that accurately reflect user preferences and experiences by aggregating detailed user historical data and employing sophisticated fine-tuning techniques. This research demonstrates the potential for LLMs to significantly improve the quality and personalization of reviews in recommender systems, addressing the existing challenge of generating meaningful and user-specific reviews. The experimental results, including notable performance metrics and human evaluation outcomes, underscore the effectiveness of the Review-LLM approach.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Review-LLM: A Comprehensive AI Framework for Personalized Review Generation Using Large Language Models and User Historical Data in Recommender Systems appeared first on MarkTechPost.

Source: Read MoreÂ