AWS Database Migration Service (AWS DMS) helps you migrate your data to Amazon Web Services (AWS) efficiently and securely. You can migrate data to open source databases such as MySQL and PostgreSQL from widely used commercial databases such as Oracle and SQL Server. AWS DMS provides the capability to validate your data as you migrate from various supported sources to AWS. Data integrity and accuracy is one of key requirements we often hear about from our customers that determines a successful migration project. In this post, we delve deep into AWS DMS data validation feature. We explore its benefits, configurations, and use cases.

AWS DMS data validation

AWS DMS data validation helps to make sure that your data is migrated accurately from the source to the target. When you choose Validation with data migration with Full Load-only (Migrate existing data) migration type in AWS DMS migration tasks, data validation begins immediately after a full load is completed. For Validation with data migration with Full Load + CDC (Migrate existing data and replicate ongoing changes) migration, validation starts right after after a full load is completed. Then it continues to compare incremental changes for a change data capture (CDC) as they occur. In the case of a CDC-only task with validation enabled, all preexisting data in a table is validated before the validation of new data begins.

During data validation, AWS DMS compares each row in the source with its corresponding row at the target, verifies the rows contain the same data, and reports any mismatches. The value of the columns is compared differently depending on its datatype. For instance, the checksum function is used to compare large binary object (LOB) columns, while actual values are used for other datatypes.

You can enable the data validation feature on migration tasks by using the AWS DMS console or AWS Command Line Interface (AWS CLI). For more information, refer to Migration Validation (Part 2) – Introducing Data Validation in AWS Database Migration Service. Also, you can create validation only tasks to preview and validate data without performing any migration or data replication. This verification consumes additional resources at the source and target as well as additional network resources, and it requires the source and target tables to have a primary key or unique index. It is recommended that you review the limitations of AWS DMS validation before you use it.

Solution overview

This post covers the following topics:

The use cases of AWS DMSÂ validation only task (Relational databases)

How to configure and monitor an AWS DMS validation only task

Data validation only task:- Full Load compared to CDC

How to optimize an AWS DMS validation only task

How to handle validation failure cases

Use cases of AWS DMSÂ validation only task (Relational databases)

There are a few common use cases for validation only tasks in AWS DMS.

Validate data without performing migration – Validation only tasks allow you to validate the data between a source and target without replicating any data. This is useful for testing validation rules and settings.

Isolate validation from migration tasks – By creating separate validation only tasks, you can validate data independently without impacting existing migration tasks. This prevents validation failures from blocking migrations and provides the possibility of running validation only tasks on a separate replication instance (RI). Additionally, this separate RI can have the different DMS release for new features.

Troubleshoot validation failures – In case a validation with migration task fails, a validation only task makes it easier to investigate and troubleshoot the specific failures with the isolation from ongoing migrations.

Scheduled validation – You can schedule a validation only task to run periodically to validate the data as it changes in the source and target databases. This can reduce the load on the source and target database instance as compared to a continuous running task.

Quickly obtain data rows mismatched at a given point in time – You can run a full load validation only task before or during the production cutover window to validate your data before pointing the application to your new target endpoint.

Data repair scripts – When you have created a data repair script that reads from the validation failure table, a full load validation only task can quickly report failures for the script to act upon.

How to configure and monitor the AWS DMS validation only task

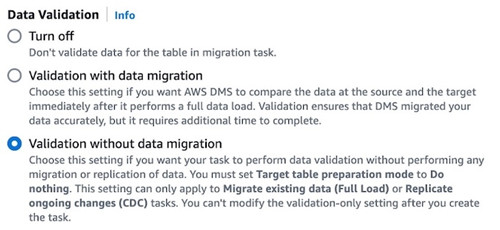

To create a validation only task using the AWS DMS console, when you create an AWS DMS task, set the following settings,

Set TargetTablePrepMode to DO_NOTHING (the default for a validation only task).

Set Migration Type to Migrate existing data or Replicate data changes only.

In Data Validation, select Validation without data migration.

Or you can modify Task settings using the JSON editor:

When data validation is enabled, you can monitor the state of validation in the AWS DMS console or with Amazon CloudWatch.

In case some records in the table don’t match between the source and target, the Validation state is Mismatched records, and you can look at the details in the awsdms_validation_failures_v1 table on the target database. This table is created under the schema that you defined in ControlSchema or the default location on your database engine. If any record enters the ValidationSuspended or the ValidationFailed state, AWS DMS writes diagnostic information to the same table.

The following screenshot shows a PostgreSQL example (target).

You can refer to Insights into AWS DMS resiliency and recovery scenarios with mitigations – Part 2 post for more details on the monitoring of AWS DMS data validation.

Full load and CDCÂ validation only migration types

As mentioned, you can create a validation only task with two types of migration type: full load validation only tasks and CDC validation only tasks. The data validation process and key tuning parameters of these two types are explained in the following section.

Full load validation only

Beginning with AWS DMS version 3.4.6 and higher, a full load validation only task quickly compares all rows from the source and target tables, reports validation failure without delay, and stops the task after validation completion.

AWS DMS divides data logically into partitions (default is 10,000 per partition) and compares this data between corresponding partitions with multiple AWS DMS data validation threads (the default is five). Any data validation error is logged into a control table (awsdms_control.awsdms_validation_failures_v1) in the target database. In the following diagram, the mismatch record is reported due to a mismatch value for the data Row n on column coln.

The following table shows important task parameters for full load validation only tasks. For the complete supported parameters in AWS DMS data validation tasks, refer to Data validation task settings.

Parameters

Default setting

Remarks

PartitionSize

10,000

Specifies the batch size of records to read for comparison from both source and target.

ThreadCount

5

Specifies the number of execution threads that AWS DMS uses during validation.

SkipLobColumns

FALSE

AWS DMS skips data validation for all LOB columns if setting is “TRUE.â€

ValidationPartialLobSize

0

AWS DMS validates all LOB column data by default. Specify the truncation value that you used in the the limited LOB mode. For instance, if you have set the maximum LOB size to 32KB during the data migration task, set “ValidationPartialLobSize†to 32. This ensures AWS DMS validates only the first 32KB of the column data in both the source and target.

CDC validation only

After the CDC validation only task starts, it validates preexisting data first, then validates the incremental changes captured by CDC and reports any mismatches. It continuously revalidates ongoing replication changes. CDC validation retries mismatched rows before failing them to prevent false positives. The amount of time it waits for retries depends on the failure retry period (in minutes) configured in the task setting using the parameters RecordFailureDelayLimitInMinutes and RecordSuspendDelayInMinutes. In the following diagram, at the time T1, the AWS DMS validation thread discovers data discrepancy for Row n. Instead of immediately reporting the failure, it retries and expects ongoing data replication tasks could fix the problem.

At the time T2 in the following diagram, which is still within the failure retry period, an AWS DMS validation thread compares the data for Row n again and finds that the data matches, and it doesn’t report any data validation failures. For any new data found by the AWS DMS validation task, AWS DMS divides them into partitions and assigns data validation threads to validate the data.

The following table shows important task parameters for the CDC validation only task. The should be considered in addition to the parameters highlighted in the preceding full load validation only task. For the complete supported parameters in the AWS DMS data validation task, refer to Data validation task settings.

Parameters

Default setting

Remarks

Failure retry period

RecordFailureDelayInMinutes=0

RecordFailureDelayLimitInMinutes=0

Specifies the delay time in minutes before reporting any validation failure details.

The value is the same as RecordFailureDelayLimitInMinutes if this parameter value is greater than 0. Otherwise, use the value from RecordFailureDelayInMinutes plus the actual AWS DMS CDC task latency. In the data validation only task, the default value for the CDC task latency value is 0.

Table level failure control

TableFailureMaxCount=1000

Specifies the maximum number of rows in one table that can fail validation before validation is suspended for the table.

Table level failure control

RecordSuspendDelayInMinutes=0

Specifies the delay time in minutes before tables are suspended from validation due to an error threshold set in FailureMaxCount.

Task Level Failure Control

FailureMaxCount=10000

Specifies the maximum number of records that can fail validation before validation is suspended for the task.

How to optimize an AWS DMS validation only task

This section describes several validation task settings that could optimize the performance of the AWS DMS validation only task. These settings have to be tested in a lower environment if possible, before implementing them in production. Each validation task is unique and requires fine-tuning at the task level.

ThreadCount: This parameter specifies the number of threads AWS DMS uses when performing data validation. The default is five, and each thread works on data that has not been validated yet to compare and validate. The higher number can improve the overall performance of the validation task, but adding more thread consumes additional resources on the source and target endpoints.

PartitionSize: This setting specifies the number of records each thread reads from the source and target instances. Increasing from the default value of 10,000 allows AWS DMS to read more records for validation, but it induces additional load of the migration components.

RecordFailureDelayLimitInMinutes: This parameter allows you to specify the delay before AWS DMS reports validation failure. Normally, AWS DMS uses the task latency value to allow the actual delay for data changes to be replicated to the target in order to prevent false positives. This setting allows a higher value to be defined.

ValidationQueryCdcDelaySeconds: This is the amount of time before the first validation query is delayed on the source and target. For the validation only task, the default value is 180 seconds. If you notice high latency due to the large amount of updates on the source, you may set this to a higher value to reduce contention on the source instance.

FailureMaxCount: This setting tracks the maximum amount of validation failures before the task is suspended. The default value is 10,000. In situations where you want validation to continue to validate all your records, set this value higher than the number of records in your source table. On the other hand, if you cannot tolerate data mismatches, you can use a lower value that the task is suspended quickly when failures occur, ensuing stricter data integrity checks.

HandleCollationDiff: This option controls how column collation differences are handled. By default, the value is false, which ignores the collation differences between columns, potentially leading to false positives in data validation. Collation determines how records are ordered and how data is matched during comparisons, making it a crucial aspect of data validation. For example, the following illustrates the sort ordering of case-insensitive versus case-sensitive using the string “abcABC†in ASCII representation,

ASCII representation

Case insensitive

sort order

Case sensitive

sort order

a = 01100001

b = 01100010

c = 01100011

A = 01000001

B = 01000010

C = 01000011

A = 01000001

a = 01100001

B = 01000010

b = 01100010

C = 01000011

c = 01100011

A = 01000001

B = 01000010

C = 01000011

a = 01100001

b = 01100010

c = 01100011

Result:

“AaBbCcâ€

“ABCabcâ€

How to handle validation failure cases

In this section, we walk through some of the common scenarios in data validation failures reported by AWS DMS validation tasks and how to fix them.

Truncated LOB

When migrating your database to a target instance, AWS DMS replicates your data, including large objects (LOB), if configured in a migration task. You have a few configurable settings for LOB objects. By default, AWS DMS uses limited LOB mode with a maximum LOB size of 32 KB for an individual LOB. If you use the default configuration, any LOB that exceeds the maximum LOB size is truncated.

The truncated LOB column leads to data validation failure because the source and target have a different value. You can verify the LOB truncation by searching truncation warnings in AWS DMS CloudWatch Logs. To avoid truncation, set the maximum LOB size to match your LOB size on your source database. If you are unsure of your LOB size, you can select inline LOB mode (if the endpoint supports) to replicate both small and large LOBs. It is recommended to set the ValidationPartialLobSize value in your validation-only task to the same value as the max LOB size of your data migration task.

Truncated numbers and timestamps

Numeric and timestamp data usually have different scale and precision in different database engines, and they have different default values for scale and precision if the value is not specified. The following describes a few common examples to illustrate potential problems regarding the numbers and timestamps and how to fix them.

Oracle database as a source

In the Oracle database, for numeric data defined as NUMBER, without scale and precision explicitly mentioned, AWS DMS truncates the data with a scale of 10. For existing data with scales greater 10, the number would be truncated in the target. For example, data 123456.123456789012 would be truncated to 123456.1234567890. To avoid the data being truncated, modify the endpoint of the AWS DMS data replication task with the appropriate value in the Extra Connection Attribute (ECA) parameter NumberDataTypeScale. The default value is 10, but in this example, set the value to 12 to avoid data truncation. Refer to AWS DMS documentation for more information about these parameter settings.

PostgreSQL as a source and target

In PostgreSQL to PostgreSQL migration, when numerical data is stored in a column defined as numeric data type without any precision or scale defined, AWS DMS truncates the data with precision of 28 and scale of 6. To avoid truncation, use ECA MapUnboundedNumericAsString=true at both source and target endpoints to map the unbound numeric data as a string type during the migration.

Timestamp data

Oracle supports up to nine digits of fraction per second (that is, nanosecond) in the TIMESTAMP data type. SQL Server supports up to seven digits of fraction per second in the TIME data type. When this data is mapped to a target such as MySQL or PostgreSQL, both support up to six digits of fraction per second with the TIMESTAMP data type. Then, the AWS DMS data validation task reports mismatched records if a source database (for example, Oracle) has a data type defined as TIMESTAMP(7) to TIMESTAMP(9). The same issue could apply to these TIMESTAMP data type variants, such as TIMESTAMP WITH TIME ZONE and TIMESTAMP WITH LOCAL TIME ZONE in Oracle, and DATEIME2 and DATETIMEOFFSET in SQL Server. If data truncation is acceptable for the business, you can set up override validation rules with custom functions as we show later in this post.

Updated data in the target by trigger and schedulers

AWS DMS migrates data from source to target database and keeps it in sync with replication. Any change that occurs outside of AWS DMS increases the risk of data discrepancy and potentially causes data validation errors. Some of these changes are unintentional, such as:

Database triggers enabled in the target can update data when the AWS DMS task performs data manipulation language (DML) changes on the target tables. As a best practice, it is recommended to disable triggers during the data migration phase and only enable them during the cutover window. If PostgreSQL is your target, you can use the parameter session_replication_role=replica to disable triggers and foreign key constraints.

Scheduler jobs running on the target database might modify data. During the initial schema conversion, you can migrate scheduler jobs to the target as well. If these jobs are enabled, it might change data on the target and impact data validation that requires complex troubleshooting. As a best practice, it is recommended to disable scheduler jobs that potentially modify target data, either internal database scheduler jobs or external jobs scheduled as part of the application.

Character set and collation settings

During data migration, data goes through multiple decoding and encoding phases, reading data from the source database, processing the data in the AWS DMS replication instance, and finally loading the data into the target database based on the target database locale setting. In the event of a conversion, if an inappropriate configuration is set up, such as a Unicode to Latin character set, the data validation task could report these records as ValidationFailed because of record differences caused by lossy conversion.

The accent sensitivity and case sensitivity could cause data errors in the AWS DMS replication task. For example, there is a source database configured to be accent-sensitive and case-sensitive, which is the default behavior. A table with a primary key column on the NAME column has three records AAAA, aaaa, and áááá. When these records reach a target database, accent-insensitive and case-insensitive, such as a MySQL database created with collation utf8mb4_0900_ai_ci, duplicate key errors will occur because they are treated as the same record with variance in accent and case. Due to such unique constraint violation errors, these records would show up in the awsdms_validation_failures_v1 control table with FAILURE_TYPE as MISSING_TARGET.

Effects of transformation and filter rules

AWS DMS has powerful transformation rules to transform your existing schema and data as part of data migration, and it also supports filter rules to migrate data to a target database only when filter criteria are met. These transformation and filter rules used in data migration tasks can unintentionally create data discrepancy in a target database. When you create a data validation only task, it’s critical to use the same transformation and filter rules to avoid data validation issues as side effects of these rules. In case you include transformation or filter rules in your data validation only task, AWS DMS applies the rules first, then validates data and reports failures for mismatched records.

For example, you have set up a migration task with a transformation rule that renames COL1 on the source to col2 on the target. However, if you create a data validation only task without the same transformation rule, AWS DMS is not aware of the underlying table metadata as the TargetTablePrepMode is DO_NOTHING. In this scenario, AWS DMS ignores COL1 and col2 for validation. In a different scenario, you apply filter rules in data migration but omit filter rules in the data validation only task, the records exist on a source database but are not migrated to a target by AWS DMS due to the filter rules. It would show validation errors.

How to utilize override rule in data validation

During data validation, you may find various validation errors, including cases described in this post. For example, an error can be Mismatched records with RECORD_DIFF, though the same data is migrated due to the different character set between source and target engines. Or you may get ValidationFailed and observe errors in CloudWatch Logs such as <No authorized routine named ‘HASH’ of type ‘FUNCTION’ having compatible arguments was found> or <pg_catalog.timezone(unknown, json)> due to the version of your source databases not supporting pre-built functions being used in AWS DMS data validation. For those cases, AWS DMS data validation provides an override-validation-function of validation rule-type in Mapping-rule. You can add your own validation rules using database functions supported by your source and target engines.

For example, for the preceding timestamp data truncation case from Oracle to MySQL migration, the following override rule compares the data using custom functions. This rule converts the source column timestamp value to YYYY-MM-DD HH:MM:SS.ffffff format using Oracle’s to_char function and the target column value to the same format using MySQL’s date_format function.

The following is an example to compare the length of data for a specific column in IBM Db2 to PostgreSQL migration.

The next validation rule compares a column of source and target after converting it to text, encoding in UTF8, calculating as MD5 hash, converting the hash to uppercase, and ensuring the final output type as VARCHAR(64). This could be useful for comparing as a consistent and unique identifier from variable databases, such as from PostgreSQL to PostgreSQL migration.

AWS DMS uses MD5 cryptographic hashes to validate the LOBs such as SYS.DBMS_CRYPTO.HASH(TO_CLOB(“COLUMN_NAME”), 2). When source Oracle enables Federal Information Processing Standards (FIPS) (DPFIPS_140 = true), HASH_MD5 may not work depending on versions. In this case, you can consider the following override rule for PostgreSQL migration.

Cleanup

If you created a test environment to follow along this post, use the information provided in Clean up AWS DMS resources to clean up the test environment you created for testing the solution proposed in this post.

Conclusion

In this post, we demonstrated how to utilize, monitor, optimize, and handle common use cases of AWS DMS data validation only tasks. Depending on your source and target databases and data migration requirements, you can consider AWS DMS data validation only tasks and gain a high level of confidence within your migrations.

If you have any questions, leave them in the comments section.

About the Authors

Jay Shin is a Database Migration Specialist in DMA (Database Migration Accelerator), based out of Singapore, where she is responsible for accelerating customers’ database migration and modernisation with Cloud native database services.

Donghua Luo is a Senior RDS Specialist Solutions Architect. He works with AWS customers to migrate and achieve higher flexibility, scale, and resiliency with database services in AWS cloud.

Barry Ooi is a Senior Database Specialist Solution Architect at AWS. His expertise is in designing, building and implementing data platform using cloud native services for customers as part of their journey on AWS. His areas of interest include data analytics and visualization.

Source: Read More