Advances in hardware and software have enabled AI integration into low-power IoT devices, such as ultra-low-power microcontrollers. However, deploying complex ANNs on these devices requires techniques like quantization and pruning to meet their constraints. Additionally, edge AI models can face errors due to shifts in data distribution between training and operational environments. Furthermore, many applications now need AI algorithms to adapt to individual users while ensuring privacy and reducing internet connectivity.

One new paradigm that has emerged to meet these problems is continuous learning or CL. This is the capacity to learn from new situations constantly without losing any of the information that has already been discovered. The best CL solutions, known as rehearsal-based methods, reduce the likelihood of forgetting by continually teaching the learner fresh data and examples from previously acquired tasks. However, this approach requires more storage space on the device. A possible trade-off in accuracy may be involved with rehearsal-free approaches, which depend on specific adjustments to the network architecture or learning strategy to make models resilient to forgetting without storing samples on-device. Several ANN models, such as CNNs, require large amounts of on-device storage for complicated learning data, which might burden CL at the edge, particularly rehearsal-based approaches.

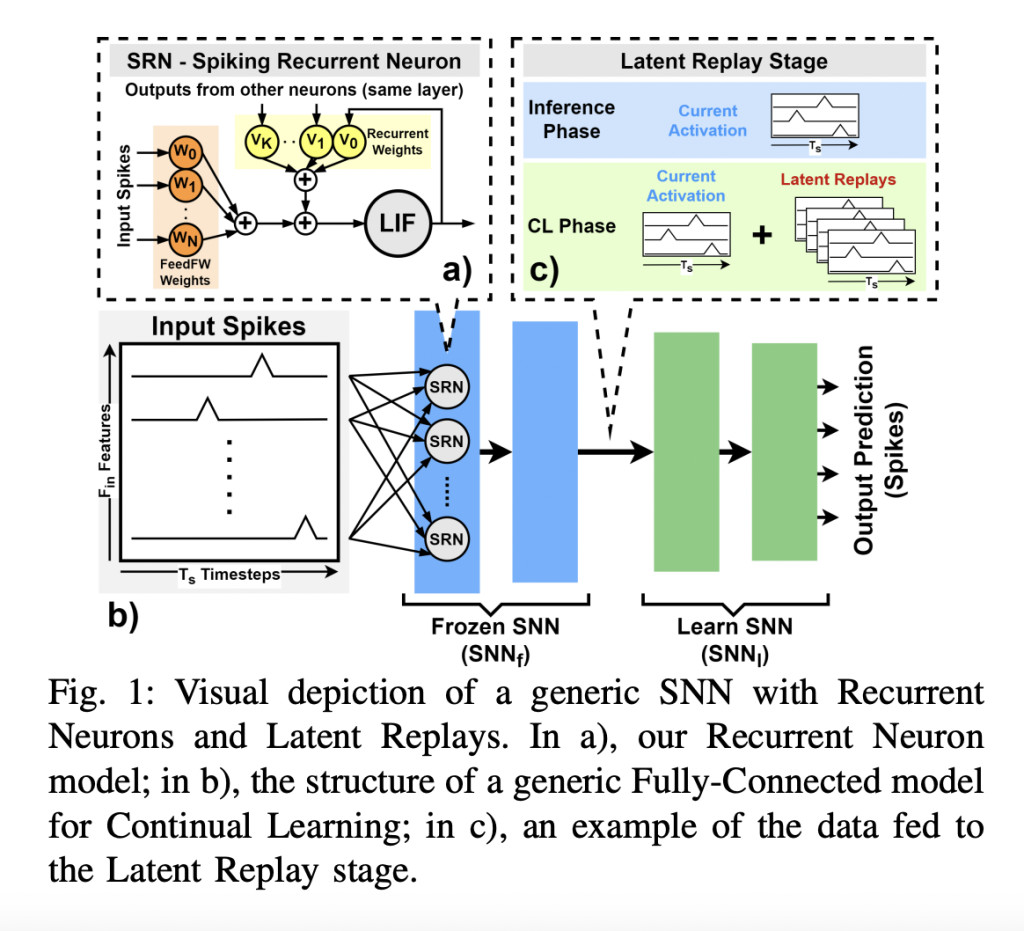

Given this, Spiking Neural Networks (SNNs) are a potential paradigm for energy-efficient time series processing thanks to their great accuracy and efficiency. By exchanging information in spikes, which are brief, discrete changes in the membrane potential of a neuron, SNNs mimic the activity of organic neurons. These spikes can be easily recorded as 1-bit data in digital structures, opening up opportunities for constructing CL solutions. The use of online learning in software and hardware SNNs has been studied, but the investigation of CL techniques in SNNs using Rehearsal-free approaches is limited.

New research by a team at the University of Bologna, Politecnico di Torino, ETH Zurich, introduces the state-of-the-art implementation of Rehearsal-based CL for SNNs that is memory efficient and designed to work seamlessly with devices with limited resources. The researchers use a Rehearsal-based technique, specifically Latent Replay (LR), to enable CL on SNNs. LR is a method that stores a subset of past experiences and uses them to train the network on new tasks. This algorithm has proven to reach state-of-the-art classification accuracy on CNNs. Using SNNs’ resilient information encoding to accuracy reduction, they apply a lossy compression on the time axis, which is a novel way to decrease the rehearsal memory.Â

The team’s approach is not only robust but also impressively efficient. They use two popular CL configurations, Sample-Incremental and Class-Incremental CL, to test their approach. They target a keyword detection application utilizing Recurrent SNN. By learning ten new classes from an initial set of 10 pre-learned ones, they test the proposed approach in an extensive Multi-Class-Incremental CL procedure to show its efficiency. On the Spiking Heidelberg Dataset (SHD) test set, their approach achieved a Top-1 accuracy of 92.46% in the Sample-Incremental arrangement, with 6.4 MB of LR data required. This happens when adding a new scenario, improving accuracy by 23.64% while retaining all previously taught ones. While learning a new class with an accuracy of 92.50% in the Class-Incremental setup, the method achieved a Top-1 accuracy of 92% while consuming 3.2 MB of data, with a loss of up to 3.5% on the previous classes. By combining compression with selecting the best LR index, the memory needed for the rehearsal data was decreased by 140 times, with a loss of accuracy of only up to 4% compared to the naïve method. In addition, when learning the set of 10 new keywords in the Multi-Class-Incremental setup, the team attained an accuracy of 78.4 percent using compressed rehearsal data. These findings lay the groundwork for a novel method of CL on edge that is both power-efficient and accurate.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Efficient Continual Learning for Spiking Neural Networks with Time-Domain Compression appeared first on MarkTechPost.

Source: Read MoreÂ