The research on vision-language models (VLMs) has gained significant momentum, driven by their potential to revolutionize various applications, including visual assistance for visually impaired individuals. However, current evaluations of these models often need to pay more attention to the complexities introduced by multi-object scenarios and diverse cultural contexts. Two notable studies shed light on these issues, exploring the intricacies of object hallucination in vision-language models and the importance of cultural inclusivity in their deployment.

Multi-Object Hallucination

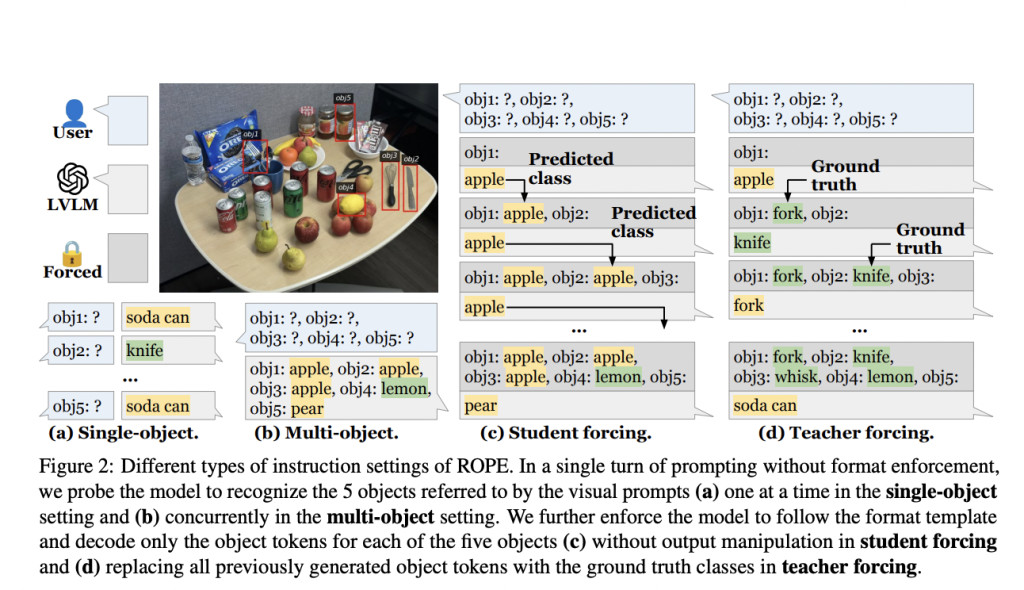

Object hallucination occurs when vision-language models describe objects not present in the given image. This phenomenon first noted in image captioning tasks, is particularly problematic when models are tasked with recognizing multiple objects simultaneously. The study on multi-object hallucination introduces the Recognition-based Object Probing Evaluation (ROPE) protocol, a comprehensive framework designed to assess how models handle scenarios involving multiple objects. The evaluation focuses on factors such as the distribution of object classes within images and the influence of visual prompts on model performance.

The ROPE protocol categorizes test scenarios into four subsets: In-the-Wild, Homogeneous, Heterogeneous, and Adversarial. This classification allows for a nuanced analysis of models’ behavior under different conditions. The findings reveal that large vision-language models (LVLMs) tend to hallucinate more frequently when focusing on multiple objects than single ones. The study identifies several key factors influencing hallucination behaviors, including data-specific attributes like object salience and frequency and intrinsic model behaviors such as token entropy and visual modality contribution.

The study’s empirical results show that multi-object hallucinations are prevalent across different LVLMs, regardless of their scale or training data. The ROPE benchmark provides a robust method for evaluating and quantifying these hallucinations, highlighting the need for more balanced datasets and advanced training protocols to mitigate this issue.

Cultural Inclusivity in Vision-Language Models

While the technical performance of vision-language models is crucial, their effectiveness depends on their ability to cater to diverse cultural contexts. The second study addresses this by proposing a culture-centric evaluation benchmark for VLMs. This research highlights the gap in current evaluation methods, which often need to consider the cultural backgrounds of users, particularly those who are visually impaired.

The study involves creating a survey to gather preferences from visually impaired individuals regarding including cultural details in image captions. Based on the survey results, the researchers filter the VizWiz dataset—a collection of images taken by blind individuals—to identify pictures with implicit cultural references. This filtered dataset serves as a benchmark for evaluating the cultural competence of state-of-the-art VLMs.

Several models, both open-access and closed-source, are evaluated using this benchmark. The findings indicate that while closed-source models like GPT-4o and Gemini-1.5-Pro perform better in generating culturally relevant captions, there still needs to be a significant gap in their ability to fully capture the nuances of different cultures. The study also reveals that automatic evaluation metrics, commonly used to assess model performance, often must align with human judgment, particularly in culturally diverse settings.

Comparative Analysis

The juxtaposition of findings from both studies provides an understanding of the challenges vision-language models face in real-world applications. The issue of multi-object hallucination underscores the technical limitations of current models, while the focus on cultural inclusivity highlights the need for more human-centered evaluation frameworks.

Technical Improvements:

ROPE Protocol: Introducing automated evaluation protocols that consider object class distributions and visual prompts.

Data Diversity: Ensuring balanced object distributions and diverse annotations in training datasets.

Cultural Considerations:

User-Centered Surveys: Incorporating feedback from visually impaired individuals to determine caption preferences.

Cultural Annotations: Enhancing datasets with culture-specific annotations to improve the cultural competence of VLMs.

Conclusion

Integrating vision-language models into applications for visually impaired users holds great promise. However, addressing these studies’ technical and cultural challenges is crucial to realizing this potential. Researchers and developers can create more reliable and user-friendly VLMs by adopting comprehensive evaluation frameworks like ROPE and incorporating cultural inclusivity into model training and assessment. These efforts will improve the accuracy of these models and ensure they are better aligned with their users’ diverse needs.

Check out the Paper 1 and Paper 2. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our 46k+ ML SubReddit, 26k+ AI Newsletter, Telegram Channel, and LinkedIn Group.

If You are interested in a promotional partnership (content/ad/newsletter), please fill out this form.

The post Enhancing Vision-Language Models: Addressing Multi-Object Hallucination and Cultural Inclusivity for Improved Visual Assistance in Diverse Contexts appeared first on MarkTechPost.

Source: Read MoreÂ