The ever-evolving nature of quantum computing renders managing tasks with the traditional heuristic approach very tricky. These models often struggle with adapting to the changes and complexities of quantum computing while maintaining the system efficiency. Scheduling tasks is crucial for such systems to reduce time wastage and resource management. Existing models are liable to place tasks on unsuitable quantum computers, requiring frequent rescheduling due to mismatched resources. The quantum computation resources require novel strategies to optimize task completion time and scheduling efficiency.

Currently, quantum task placement relies on heuristic approaches or manually crafted policies. While practical in certain contexts, these methods cannot exploit the full potential of dynamic quantum cloud computing environments. As quantum cloud computing integrates classical cloud resources to host applications that interact with quantum computers remotely, efficient resource management becomes increasingly critical.

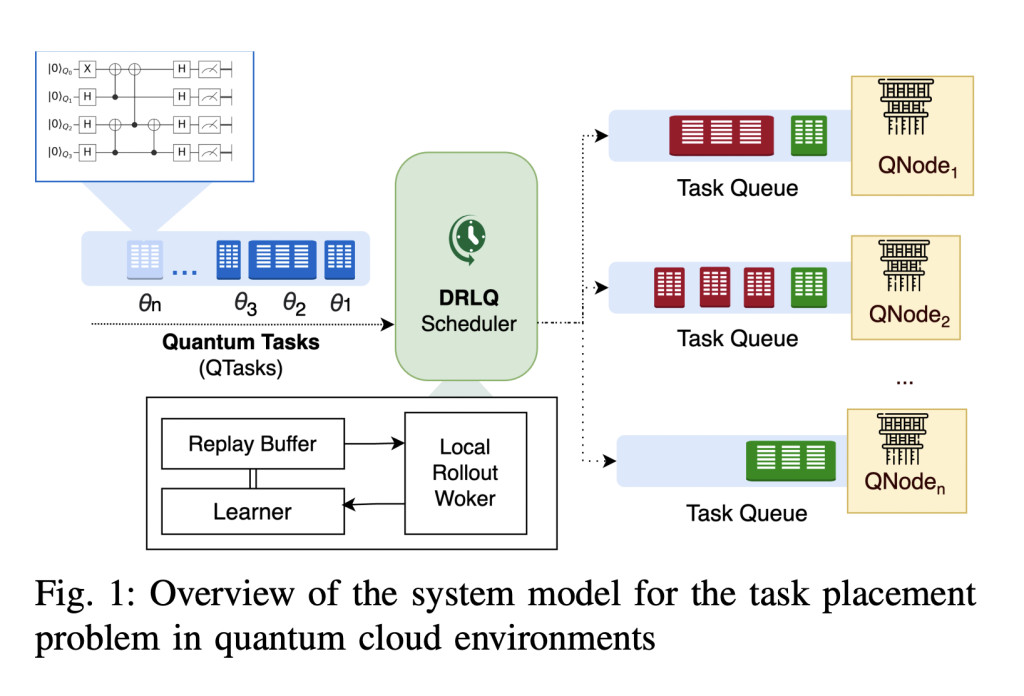

Researchers from the University of Melbourne and Data61, CSIRO have proposed DRLQ, a novel technique based on Deep Reinforcement Learning (DRL) for task placement in quantum cloud computing environments. DRLQ leverages the Deep Q Network (DQN) architecture, enhanced with the Rainbow DQN approach, to create a dynamic task placement strategy. DRLQ aims to address the limitations of traditional heuristic methods by learning optimal task placement policies through continuous interaction with the quantum computing environment, thus enhancing task completion efficiency and reducing the need for rescheduling.

The DRLQ framework employs Deep Q Networks (DQN) combined with the Rainbow DQN approach, which integrates several advanced reinforcement learning techniques, including Double DQN, Prioritized Replay, Multi-step Learning, Distributional RL, and Noisy Nets. These enhancements collectively improve the training efficiency and effectiveness of the reinforcement learning model.Â

The system model includes a set of available quantum computation nodes (QNodes) and a set of incoming quantum tasks (QTasks), each with specific properties such as qubit number, circuit depth, and arrival time. The task placement problem is formulated as selecting the most appropriate QNode for each incoming QTask to minimize the total response time and mitigate replacement frequency. The state space of the reinforcement learning model consists of features of QNodes and QTasks, while the action space is defined as the selection of a QNode for a QTask. The reward function is designed to minimize the total completion time and penalize task rescheduling attempts, encouraging the policy to find optimal placements that reduce completion time and avoid rescheduling.

Experiments conducted on QSimPy simulation toolkit demonstrate that DRLQ significantly improves task execution efficiency. The proposed method reduces total quantum task completion time by 37.81% to 72.93% compared to other heuristic approaches. Moreover, DRLQ effectively minimizes the need for task rescheduling, achieving zero rescheduling attempts in evaluations, compared to substantial rescheduling attempts by existing methods.

In conclusion, the paper presents DRLQ, an innovative Deep Reinforcement Learning-based approach for optimizing task placement in quantum cloud computing environments. By leveraging the Rainbow DQN technique, DRLQ addresses the limitations of traditional heuristic methods, providing a dynamic and adaptive solution for efficient quantum cloud resource management. This approach is one of the first in quantum cloud resource management, enabling adaptive learning and decision-making.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post DRLQ: A Novel Deep Reinforcement Learning (DRL)-based Technique for Task Placement in Quantum Cloud Computing Environments appeared first on MarkTechPost.

Source: Read MoreÂ