Recent developments in the field of Artificial Intelligence are completely changing the way humans engage with video material. The open-source chat video agent ‘Jockey‘ is a great example of this innovation. Jockey provides improved video processing and interaction by utilizing the potent powers of Twelve Labs APIs and LangGraph.Â

Twelve Labs offers modern video understanding APIs that can extract comprehensive insights from video footage. Its APIs operate directly with video data, analyzing visuals, audio, on-screen text, and temporal correlations, in contrast to traditional methods that rely on pre-generated captions. With this all-encompassing approach, videos are understood more precisely and contextually.

Classification, question answering, summarization, and video search are some of the main features of Twelve Labs APIs. With the help of these APIs, developers can build apps for various use cases, including AI-generated highlight reels, interactive video FAQs, automated video editing, and content discovery. The scalability and strong enterprise-grade security of these APIs make them ideal for managing large video archives, creating new opportunities for applications that rely on video.

With the release of LangGraph v0.1 by LangChain, an adaptable framework for creating agentic and multi-agent applications has been presented. With LangGraph’s customizable API for cognitive architectures, developers can more precisely control the flow of code, prompts, and large language model (LLM) calls than they could with LangChain AgentExecutor, its predecessor. Additionally, LangGraph allows for human approval prior to task execution and offers ‘time travel’ capabilities for altering and resuming agent operations, which in turn facilitates human-agent collaboration.

LangChain introduced LangGraph Cloud, which is presently in closed beta, to supplement this architecture. LangGraph Cloud provides scalable infrastructure for deploying LangGraph agents, and managing servers and task queues to effectively manage several concurrent users and big states. It interfaces with LangGraph Studio and enables real-world interaction patterns to visualize and troubleshoot agent trajectories. Because of this combination, agentic applications can be developed and deployed more quickly.

With its most recent release, v1.1, Jockey has seen a substantial change compared to its original LangChain-based version. By using LangGraph, Jockey boasts improved scalability and functionality in both frontend and backend operations. This shift has optimized Jockey’s architecture, enabling more accurate and efficient control over intricate video workflows.

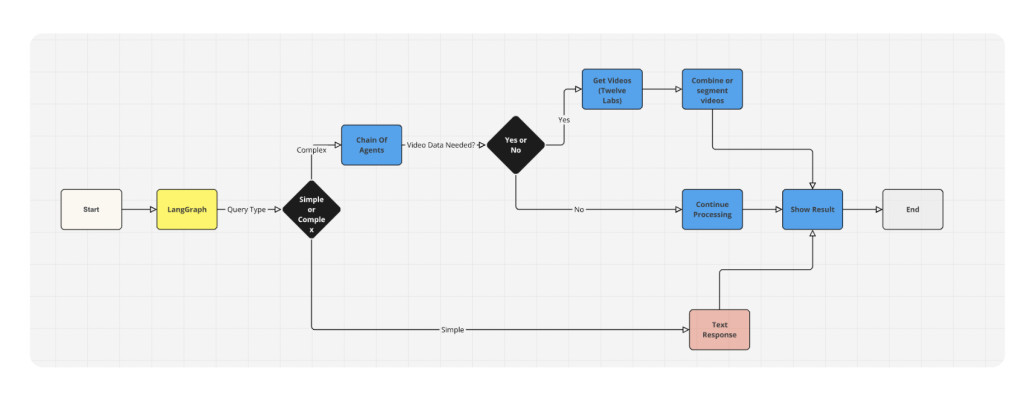

Jockey fundamentally combines the advantages of LLMs with the customizable structure of LangGraph to provide video APIs from Twelve Labs. The complex network of nodes that makes up LangGraph, which includes elements like the Supervisor, planner, video-editing, video-search, and video-text-generation nodes, helps in Jockey’s decision-making. This configuration guarantees smooth execution of video-related operations and quick processing of user requests.

The fine control LangGraph offers over every stage of the workflow is one of its most notable features. By carefully controlling the information flow between nodes, Jockey can maximize token consumption and improve node response accuracy. Video processing is more successful and efficient as a result of this refined control.

Jockey’s advanced architecture uses a multi-agent system to manage intricate video-related activities. The Supervisor, Planner, and Workers are the three primary parts of the architecture. As the main coordinator, the Supervisor oversees the process and assigns tasks to other nodes. It manages mistake recovery, ensures the plan is followed and starts replanning when it’s needed.

The planner is in charge of dissecting intricate user requests into digestible chunks that the Workers can carry out. This part is essential for managing workflows, which include multiple steps in video processing. The Workers carry out activities in accordance with the planner’s strategy and include specialized agents for video search, video text generation, and video editing.

Jockey’s modular architecture makes extension and customization easier. To accommodate more complicated scenarios, developers can expand the state, change the prompts, or add extra workers for particular use cases. Because of its adaptability, Jockey provides a flexible platform on which to develop sophisticated video AI applications.

In conclusion, Jockey is a great combination of the advanced video interpretation APIs from Twelve Labs and the adaptable agent framework from LangGraph. This combination creates new opportunities for engagement and intelligent video processing.Â

The post Meet Jockey: A Conversational Video Agent Powered by LangGraph and Twelve Labs API appeared first on MarkTechPost.

Source: Read MoreÂ