In solving real-world data science problems, model selection is crucial. Tree ensemble models like XGBoost are traditionally favored for classification and regression for tabular data. Despite their success, deep learning models have recently emerged, claiming superior performance on certain tabular datasets. While deep neural networks excel in fields like image, audio, and text processing, their application to tabular data presents challenges due to data sparsity, mixed feature types, and lack of transparency. Although new deep learning approaches for tabular data have been proposed, inconsistent benchmarking and evaluation make it unclear if they truly outperform established models like XGBoost.

Researchers from the IT AI Group at Intel rigorously compared deep learning models to XGBoost for tabular data to determine their efficacy. Evaluating performance across various datasets, they found that XGBoost consistently outperformed deep learning models, even on datasets originally used to showcase the deep models. Additionally, XGBoost required significantly less hyperparameter tuning. However, combining deep models with XGBoost in an ensemble yielded the best results, surpassing both standalone XGBoost and deep models. This study highlights that, despite advancements in deep learning, XGBoost remains a superior and efficient choice for tabular data problems.

Traditionally, Gradient-Boosted Decision Trees (GBDT), like XGBoost, LightGBM, and CatBoost, dominate tabular data applications due to their strong performance. However, recent studies have introduced deep learning models tailored for tabular data, such as TabNet, NODE, DNF-Net, and 1D-CNN, which show promise in outperforming traditional methods. These models include differentiable trees and attention-based approaches, yet GBDTs remain competitive. Ensemble learning, combining multiple models, can further enhance performance. The researchers evaluated these deep models and GBDTs across diverse datasets, finding that XGBoost generally excels, but combining deep models with XGBoost yields the best outcomes.

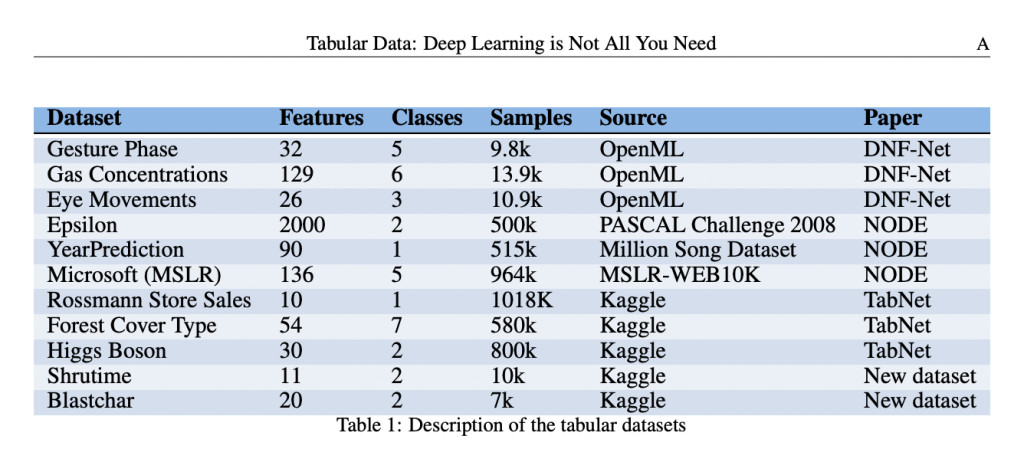

The study thoroughly compared deep learning models and traditional algorithms like XGBoost across 11 varied tabular datasets. The deep learning models examined included NODE, DNF-Net, and TabNet, and they were evaluated alongside XGBoost and ensemble approaches. These datasets, selected from prominent repositories and Kaggle competitions, displayed a broad range of characteristics in terms of features, classes, and sample sizes. The evaluation criteria encompassed accuracy, efficiency in training and inference, and the time needed for hyperparameter tuning. Findings revealed that XGBoost consistently outperformed the deep learning models on most datasets not part of the models’ original training sets. Specifically, XGBoost achieved superior performance on 8 of 11 datasets, demonstrating its versatility across different domains. Conversely, deep learning models showed their best performance only on datasets they were originally designed for, implying a tendency to overfit their initial training data.

Furthermore, the study examined the efficacy of combining deep learning models with XGBoost in ensemble methods. It was observed that ensembles integrating both deep models and XGBoost often yielded superior results compared to individual models or ensembles of classical machine learning models like SVM and CatBoost. This synergy highlights the complementary strengths of deep learning and tree-based models, where deep networks capture complex patterns, and XGBoost provides robust, generalized performance. Despite the computational advantages of deep models, XGBoost proved significantly faster and more efficient in hyperparameter optimization, converging to optimal performance with fewer iterations and computational resources. Overall, the findings underscore the need for careful consideration of model selection and the benefits of combining different algorithmic approaches to leverage their unique strengths for various tabular data challenges.

The study evaluated the performance of deep learning models on tabular datasets and found them to be generally less effective than XGBoost on datasets outside their original papers. An ensemble of deep models and XGBoost performed better than any single model or classical ensemble, highlighting the strengths of combining methods. XGBoost was easier to optimize and more efficient, making it preferable under time constraints. However, integrating deep models can enhance performance. Future research should test models on diverse datasets and focus on developing deep models that are easier to optimize and can better compete with XGBoost.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Beyond Deep Learning: Evaluating and Enhancing Model Performance for Tabular Data with XGBoost and Ensembles appeared first on MarkTechPost.

Source: Read MoreÂ