It is observed that LLMs often struggle to retrieve relevant information from the middle of long input contexts, exhibiting a “lost-in-the-middle†behavior. The research paper addresses the critical issue of the performance of large language models (LLMs) when handling longer-context inputs. Specifically, LLMs like GPT-3.5 Turbo and Mistral 7B often struggle with accurately retrieving information and maintaining reasoning capabilities across extensive textual data. This limitation hampers their effectiveness in tasks that require processing and reasoning over long passages, such as multi-document question answering (MDQA) and flexible length question answering (FLenQA).Â

Current methods to enhance the performance of LLMs in long-context settings typically involve finetuning on real-world datasets. However, these datasets often include outdated or irrelevant information, which can lead to hallucinations and other inaccuracies. Traditional datasets such as MDQA and FLenQA have shown that LLMs tend to exhibit a “lost-in-the-middle†behavior, where their performance is optimal at the beginning or end of the input context but deteriorates for information in the middle.

A team of researchers from the University of Wisconsin-Madison proposes a novel finetuning approach utilizing a carefully designed synthetic dataset to address these challenges. This dataset comprises numerical key-value retrieval tasks designed to enhance the LLMs’ ability to handle long contexts more effectively. By using synthetic data that avoids the pitfalls of outdated or irrelevant information, the researchers aim to improve LLMs’ information retrieval and reasoning capabilities without introducing hallucinations.

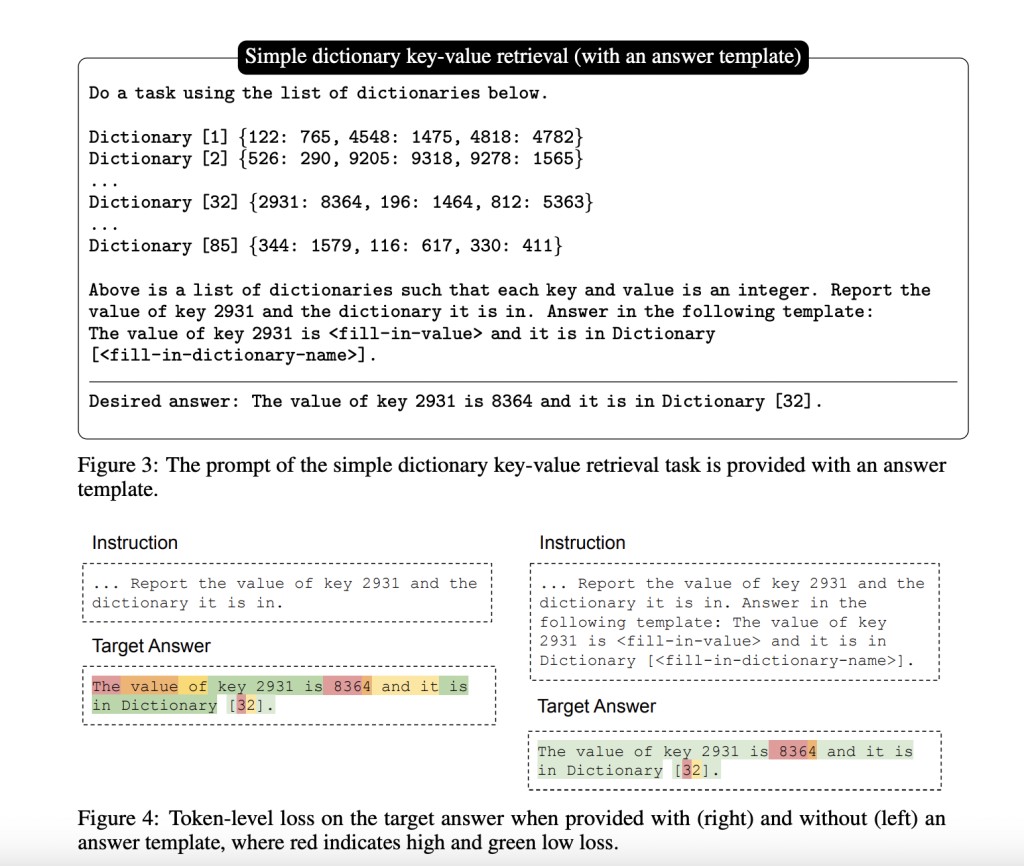

The proposed synthetic dataset consists of simple dictionary key-value retrieval tasks, where each task involves multiple dictionaries with a few keys each. For instance, the dataset for Mistral 7B includes 350 samples, each containing 85 dictionaries, resulting in prompts with roughly 3900 tokens. Finetuning is conducted on the answer part of these tasks, masking out other elements to focus the model’s learning process.

Experiments demonstrate that this approach significantly enhances the performance of LLMs in long-context tasks. For example, finetuning GPT-3.5 Turbo on the synthetic data resulted in a 10.5% improvement on the 20 documents MDQA benchmark at the tenth position. Moreover, this method mitigates the “lost-in-the-middle†phenomenon and reduces the primacy bias, leading to more accurate information retrieval across the entire input context. The performance of models finetuned on the synthetic data was compared against those finetuned on real-world datasets, with the synthetic approach showing superior results in maintaining consistent accuracy across different context positions.Â

The study introduces an innovative approach to finetuning LLMs using synthetic data, significantly enhancing their performance in long-context settings. The proposed method demonstrates substantial improvements over traditional finetuning techniques by addressing the “lost-in-the-middle†phenomenon and reducing primacy bias. This research highlights the potential of synthetic datasets in overcoming the limitations of real-world data, paving the way for more effective and reliable LLMs in handling extensive textual information.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Researchers at the University of Wisconsin-Madison Propose a Finetuning Approach Utilizing a Carefully Designed Synthetic Dataset Comprising Numerical Key-Value Retrieval Tasks appeared first on MarkTechPost.

Source: Read MoreÂ