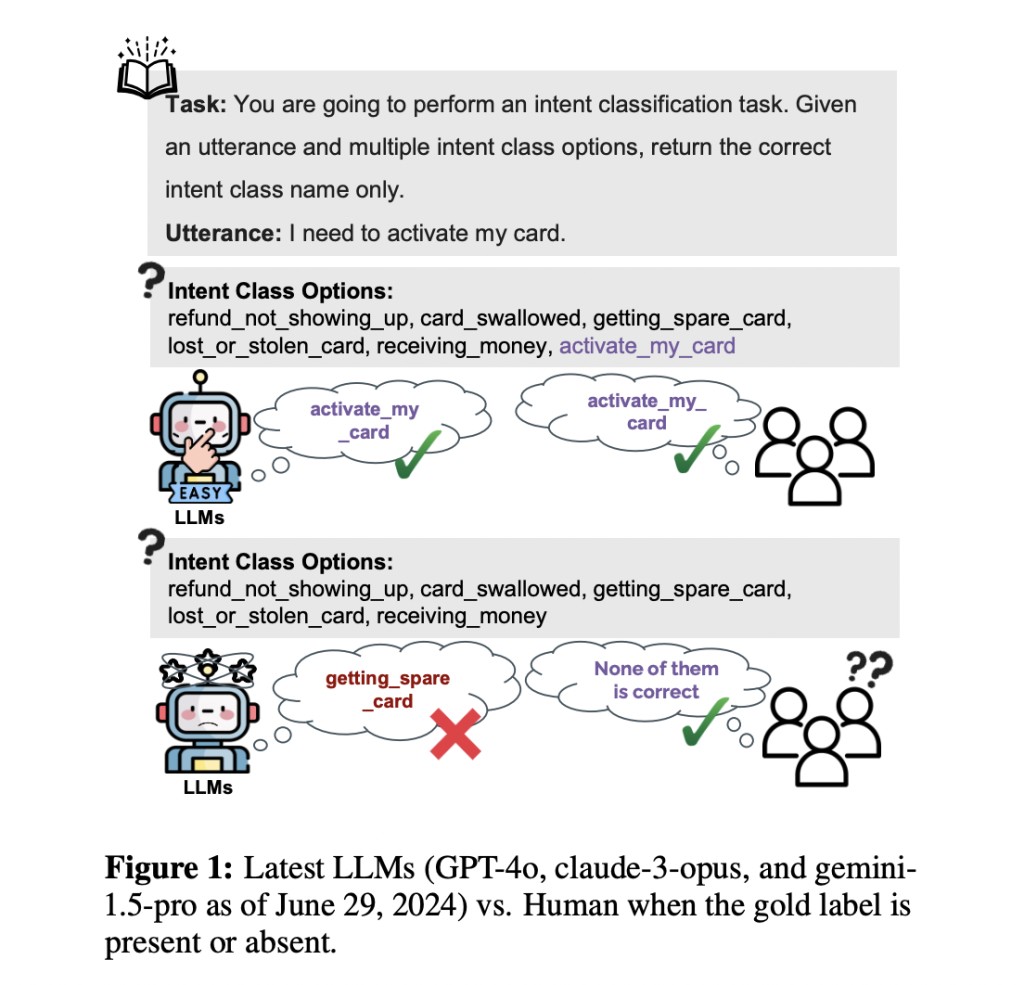

Large Language Models (LLMs) have shown impressive performance in a range of tasks in recent years, especially classification tasks. These models demonstrate amazing performance when given gold labels or options that include the right answer. A significant limitation is that if these gold labels are purposefully left out, LLMs would still choose among the possibilities, even if none of them are correct. This raises significant concerns regarding these models’ actual comprehension and intelligence in classification scenarios.

In the context of LLMs, this absence of uncertainty presents two primary concerns:

Versatility and Label Processing: LLMs can work with any set of labels, even ones whose accuracy is debatable. To avoid misleading users, they should ideally imitate human behavior by recognizing accurate labels or pointing out when they are absent. Due to their reliance on predetermined labels, traditional classifiers are not as flexible.

Discriminative vs. Generative Capabilities: Because LLMs are mainly intended to be generative models, they frequently forgo discriminative capabilities. High-performance metrics indicate that classification tasks are easy. However, the existing benchmarks might not accurately reflect human-like behavior, which could overestimate the usefulness of LLMs.

In recent research, three common categorization tasks have been provided as benchmarks to help with further research.

BANK77: An intent classification task.

MC-TEST: A multiple-choice question-answering task.

EQUINFER: A recently developed task that determines which of four options, based on surrounding paragraphs in scientific papers, is the correct equation.

This set of benchmarks has been named KNOW-NO, as it covers classification problems with different label sizes, lengths, and scopes, including instance-level and task-level label spaces.

A new metric named OMNIACCURACY has also been presented to assess the LLMs’ performance with greater accuracy. This statistic evaluates LLMs’ categorization skills by combining their results from two KNOW-NO framework dimensions, which are as follows.

Accuracy-W/-GOLD: This measures the conventional accuracy when the right label is provided.

ACCURACY-W/O-GOLD: This measures accuracy when the correct label is not available.

OMNIACCURACY seeks to better approximate human-level discrimination intelligence in classification tasks by demonstrating the LLMs’ capacity to manage both situations in which correct labels are present and those in which they are not.

The team has summarized their primary contributions as follows.

When correct answers are absent from classification tasks, this study is the first to draw attention to the limitations of LLMs.Â

CLASSIFY-W/O-GOLD has been introduced, which is a new framework to assess LLMs and describe this task accordingly.

The KNOW-NO Benchmark has been presented, which comprises one newly-created task and two well-known categorization tasks. The purpose of this benchmark is to assess LLMs in the CLASSIFY-W/O-GOLD scenario.

OMNIACCURACY metric has been suggested, which combines outcomes when proper labels are present and absent in order to evaluate LLM performance in classification tasks. It provides a more in-depth assessment of the models’ capabilities, guaranteeing a clear comprehension of how well they function in many situations.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Understanding the Limitations of Large Language Models (LLMs): New Benchmarks and Metrics for Classification Tasks appeared first on MarkTechPost.

Source: Read MoreÂ