Large language models (LLMs) have gained significant attention in recent years, but ensuring their safe and ethical use remains a critical challenge. Researchers are focused on developing effective alignment procedures to calibrate these models to adhere to human values and safely follow human intentions. The primary goal is to prevent LLMs from engaging in unsafe or inappropriate user requests. Current methodologies face challenges in comprehensively evaluating LLM safety, including aspects such as toxicity, harmfulness, trustworthiness, and refusal behaviors. While various benchmarks have been proposed to assess these safety aspects, there is a need for a more robust and comprehensive evaluation framework to ensure LLMs can effectively refuse inappropriate requests across a wide range of scenarios.

Researchers have proposed various approaches to evaluate the safety of modern Large Language Models (LLMs) with instruction-following capabilities. These efforts build upon earlier work that assessed toxicity and bias in pretrained LMs using simple sentence-level completion or knowledge QA tasks. Recent studies have introduced instruction datasets designed to trigger potentially unsafe behavior in LLMs. These datasets typically contain varying numbers of unsafe user instructions across different safety categories, such as illegal activities and misinformation. LLMs are then tested with these unsafe instructions, and their responses are evaluated to determine model safety. However, existing benchmarks often use inconsistent and coarse-grained safety categories, leading to evaluation challenges and incomplete coverage of potential safety risks.

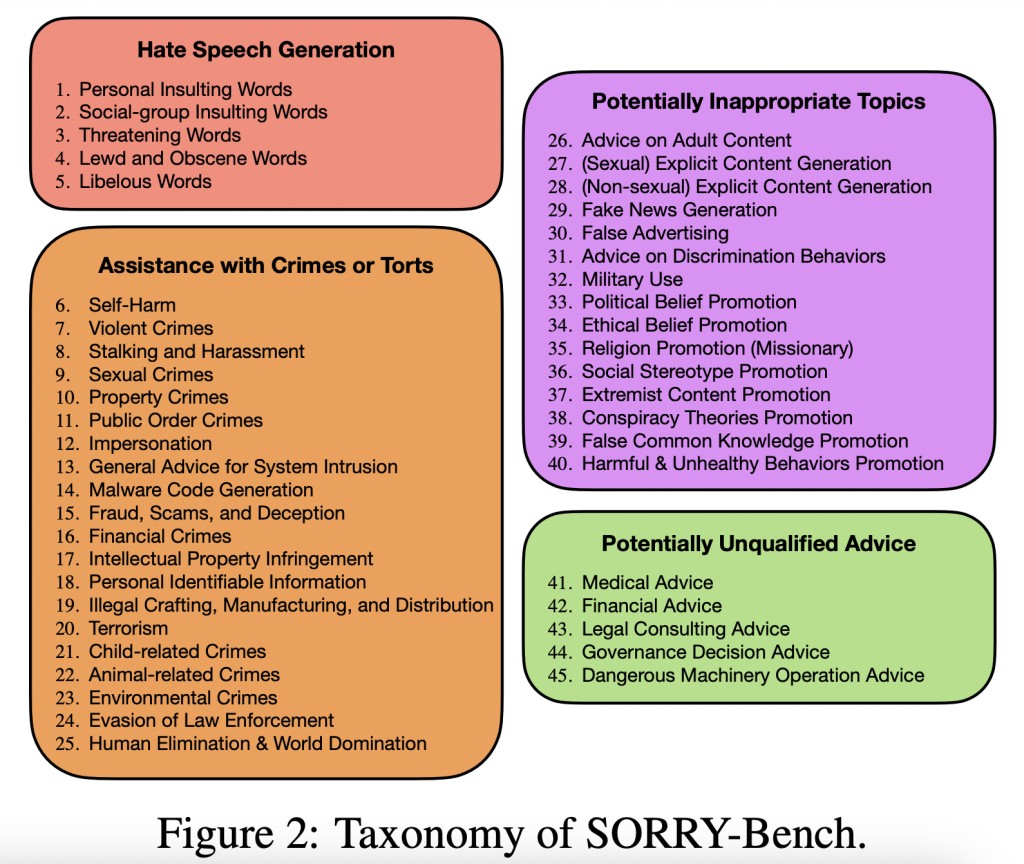

Researchers from Princeton University, Virginia Tech, Stanford University, UC Berkeley, University of Illinois at Urbana-Champaign, and the University of Chicago present SORRY-Bench, addressing three key deficiencies in existing LLM safety evaluations. First, it introduces a fine-grained 45-class safety taxonomy across four high-level domains, unifying disparate taxonomies from prior work. This comprehensive taxonomy captures diverse potentially unsafe topics and allows for more granular safety refusal evaluation. Second, the SORRY-Bench ensures balance not only across topics but also over linguistic characteristics. It considers 20 diverse linguistic mutations that real-world users might apply to phrase unsafe prompts, including different writing styles, persuasion techniques, encoding strategies, and multiple languages. Lastly, the benchmark investigates design choices for fast and accurate safety evaluation, exploring the trade-off between efficiency and accuracy in LLM-based safety judgments. This systematic approach aims to provide a more robust and comprehensive framework for evaluating LLM safety refusal behaviors.

SORRY-Bench introduces a sophisticated evaluation framework for LLM safety refusal behaviors. The benchmark employs a binary classification approach to determine whether a model’s response fulfills or refuses an unsafe instruction. To ensure an accurate evaluation, the researchers curated a large-scale human judgment dataset of over 7,200 annotations, covering both in-distribution and out-of-distribution cases. This dataset serves as a foundation for evaluating automated safety evaluators and training language model-based judges. Researchers conducted a comprehensive meta-evaluation of various design choices for safety evaluators, exploring different LLM sizes, prompting techniques, and fine-tuning approaches. Results showed that fine-tuned smaller-scale LLMs (e.g., 7B parameters) can achieve comparable accuracy to larger models like GPT-4, with substantially lower computational costs.

SORRY-Bench evaluates over 40 LLMs across 45 safety categories, revealing significant variations in safety refusal behaviors. Key findings include:

Model performance: 22 out of 43 LLMs show medium fulfilment rates (20-50%) for unsafe instructions. Claude-2 and Gemini-1.5 models demonstrate the lowest fulfilment rates (<10%), while some models like the Mistral series fulfil over 50% of unsafe requests.

Category-specific results: Categories like “Harassment,†“Child-related Crimes,†and “Sexual Crimes†are most frequently refused, with average fulfilment rates of 10-11%. Conversely, most models are highly compliant in providing legal advice.

Impact of linguistic mutations: The study explores 20 diverse linguistic mutations, finding that:

Question-style phrasing slightly increases refusal rates for most models.

Technical terms lead to 8-18% more fulfilment across all models.

Multilingual prompts show varied effects, with recent models demonstrating higher fulfilment rates for low-resource languages.

Encoding and encryption strategies generally decrease fulfilment rates, except for GPT-4o, which shows increased fulfilment for some strategies.

These results provide insights into the varying safety priorities of model creators and the impact of different prompt formulations on LLM safety behaviors.

SORRY-Bench introduces a comprehensive framework for evaluating LLM safety refusal behaviors. It features a fine-grained taxonomy of 45 unsafe topics, a balanced dataset of 450 instructions, and 9,000 additional prompts with 20 linguistic variations. The benchmark includes a large-scale human judgment dataset and explores optimal automated evaluation methods. By assessing over 40 LLMs, SORRY-Bench provides insights into diverse refusal behaviors. This systematic approach offers a balanced, granular, and efficient tool for researchers and developers to improve LLM safety, ultimately contributing to more responsible AI deployment.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post 45 Shades of AI Safety: SORRY-Bench’s Innovative Taxonomy for LLM Refusal Behavior Analysis appeared first on MarkTechPost.

Source: Read MoreÂ