Applications that requires high resiliency across AWS Regions requires a suitable database that can support fast data replication across Regions to increase application availability in the unlikely event of Region outages. Setting up a multi-Region disaster recovery is complex, and requires careful design and implementation to avoid any data loss or data drop. Amazon Aurora Global Database simplifies this setup. In this post, we show how to use Aurora Global Database to build a resilient multi-Region application and the best practices for this setup.

Disaster recovery strategy overview

Commercial and government customers deploy their applications across data centers for various reasons. Disaster recovery (DR) is a common requirement; to learn about different DR strategies, refer to Disaster recovery options in the cloud. In this post, we discuss the warm standby strategy with Aurora Global Database. With this option, you can run your read/write workloads in your primary Region and have the option of running read workloads in a secondary Region.

For mission-critical applications with near-zero downtime requirements, the ability to tolerate a Region-wide outage is important. Aurora Global Database is designed for globally distributed applications, allowing a single Amazon Aurora database to span across multiple Regions. It uses storage-based replication with typical latency of less than 1 second, and you can promote one of the secondary Regions as primary to handle read and write workloads in typically a minute.

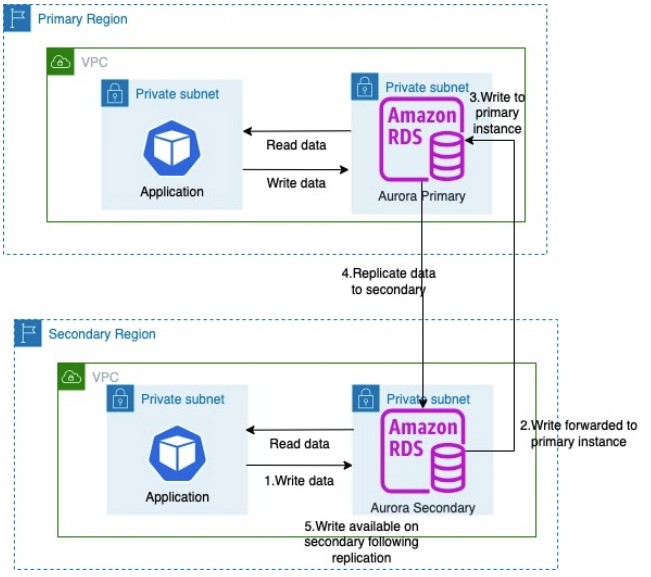

Aurora Global Database supports a global write strategy. With this feature, you can enable a secondary Region database to accept writes. Writes from the secondary Region are forwarded to the primary Region. The following diagram illustrates the architecture for a global write strategy.

The workflow includes the following steps:

An application writes the data to the Aurora secondary cluster.

The secondary cluster forwards the data to the Aurora primary cluster using write forwarding.

The primary cluster stores the data in a database.

The primary cluster replicates updates to the secondary cluster.

Data updates are now available in the secondary cluster.

Aurora Global Database allows a single Aurora database to span multiple Regions, providing disaster recovery from Region-wide outages and enabling fast local reads for globally distributed applications. With write forwarding, your applications can send read and write requests to a reader in a secondary Region, and Aurora Global Database takes care of forwarding the write requests to the writer in the primary Region. You can also use different consistency levels that meet your application needs. Aurora Global Database addresses the difficulty of applications maintaining multiple database endpoints for reads and writes. Applications running in a Region can read and write to the database in that Region only.

With a global database, you can scale database reads and minimize latency by reading data closer to users for globally distributed applications. This model lays the foundation for a global business continuity plan with a Recovery Point Objective (RPO) of typically 1 second and a Recovery Time Objective (RTO) of typically 1 minute.

Solution overview

In this post, we use a book reviews processing application to demonstrate the global write strategy with a cross-Region setup with Aurora Global Database. This application will process the book reviews data stored in Amazon Simple Storage Service (Amazon S3) in its respective Regions and write the data to database. This application uses serverless architecture services like Amazon S3, AWS Lambda, and AWS Step Functions. All the components of this application are deployed in two Regions (us-east-1 and us-east-2). The book reviews application consists of the following components:

Book reviews state machine – The Step Functions state machine helps orchestrate the book review processing, invoking Lambda functions to read the book review files in Amazon S3 in chunks, and process and persist the reviews in the database.

Book reviews preprocessing – This Lambda function retrieves the book review files available for processing from the S3 bucket in that Region.

Book reviews database processing – This Lambda function processes the JSON file with the book reviews and persists them in an Aurora database. Database writes are sent to the Aurora database cluster in that Region.

Book reviews postprocessing – This Lambda function moves the processed file from the book-review/reviews prefix to book-review/completed to mark the file processing as complete.

The following diagram illustrates the solution architecture.

Refer to the Prerequisites section in the GitHub repo for the software and the tools needed to implement the solution. Also understand that this solution incurs cost and make sure to clean up resources once you done with it.

Create an Aurora global database

Aurora is a fully managed relational database service that is compatible with MySQL and PostgreSQL. In this post, we use the Amazon Aurora MySQL-Compatible Edition database engine. However, the solution works for Amazon Aurora PostgreSQL-Compatible Edition as well. We use us-east-1 and us-east-2 as our Regions. You can choose any other Region that is supported by Aurora Global Database. For more information about creating a global database, see Getting started with Amazon Aurora global databases.

Configure resources in the primary Region

In the primary Region, you set up an Amazon Elastic Compute Cloud (Amazon EC2) security group and the primary Aurora database. Complete the following steps:

On the Amazon EC2 console, create a security group for the primary Aurora database with the ingress option to allow TCP MySQL and Aurora traffic on 3306 port from the VPC CIDR range.

On the Amazon RDS console, create a new Aurora database.

Select Standard create.

For Engine type, select Aurora (MySQL Compatible).

Choose the latest available SQL engine version.

For DB cluster identifier, enter a name for your cluster.

For Credentials management, select Self managed.

Select Auto generate password.

Make a note of this password; you use it later to set up the database and tables, and to configure AWS Secrets Manager to securely store the password.

Under Cluster storage configuration, select Aurora Standard.

Choose the db.r5.large instance type or another instance type of your choice.

Use the default options in the remaining sections.

In the Connectivity section, choose the security group you created in Step 1.

Choose Create database.

This step might take up to 10 minutes to complete; move to the next step after the database is created in the primary Region.

Configure resources in the secondary Region

In the secondary Region, you create a security group for the secondary Aurora database and configure a secret in Secrets Manager. Complete the following steps:

On the Amazon EC2 console, switch to your secondary Region and create a security group for the secondary Aurora database with the ingress option to allow TCP MySQL and Aurora traffic on 3306 port from the VPC CIDR in that Region.

Switch to the primary Region and open Amazon RDS console.

Choose Databases in the navigation pane.

Select the Regional cluster you created earlier and on the Actions menu, choose Add AWS Region.

Under AWS Region, select the secondary Region where you want to create the Aurora cluster

In the Instance configuration section, select the same instance type as the primary.

Under Connectivity, select the security group created in the secondary Region for Existing VPC security groups

Under Read replica write forwarding, select Turn on global write forwarding.

Choose Add Region to create the secondary database.

For more information, refer to Getting started with Amazon Aurora global databases.

Create database secrets in Secrets Manager

Complete the following steps to create a Secrets Manager secret:

In the primary Region, open the Secrets Manager console and choose Store a new secret to create a secret.

Select Credentials for Amazon RDS database.

Enter the user name as admin, and enter a password.

Choose the database you created.

Choose Next.

Enter a secret name (for example, aurora/global-db-blog-secret).

Choose Next to complete the configuration.

Repeat the steps in the secondary Region.

Create a database schema and reviews table

Connect to the primary Aurora database from AWS Cloud9 or an EC2 bastion host. Because the database isn’t public, you must have to access it from within a virtual private cloud (VPC). For instructions on setting up an instance, refer to Creating and connecting to a MySQL DB instance. In the following code, replace <endpoint> with the primary database endpoint. You can find the endpoint details on the Amazon RDS console in the Connectivity & security section. When you’re connected, enter the following commands to create a reviews database and table:

Deploy data processing microservices

You can clone the GitHub repo and deploy the application from either your local system with access to AWS or from AWS Cloud9. Follow the deployment instructions to complete the deployment.

Provide your primary and secondary Regions and VPC IDs from those Regions in the following code:

Navigate to layer directory and run the following command to build the pymysql layer required for the Lambda function:

Run the following AWS Cloud Development Kit (AWS CDK) commands to deploy the application using your preferred primary Region:

The preceding commands may take up to 10 minutes to complete.

After the application deployment is complete, proceed with the deployment in the secondary Region:

The following AWS resources will be created in the primary and secondary Regions:

S3 bucket (name starting with bookreviewstack-bookreviews)

Three Lambda functions

Step Functions state machine

AWS Identity and Access Management (IAM) roles and policies

VPC endpoints

Test the solution

To test the solution, you use the application to process JSON files and process concurrent writes to the database from both Regions.

Upload test data to the S3 bucket

Run the script test_data_setup.sh to setup the test data in the S3 buckets. Make sure to update the script to replace <region1-s3bucket> and <region2-s3bucket> with the S3 bucket names from the primary and secondary Regions.

Launch the Step Functions job

Sign in to the Step Functions console in us-east-1 and run the job book-reviews-statemachine. Switch to the secondary Region and run the Step Functions job. This starts processing the book reviews in both Regions.

The workflow consists of the following steps:

bookreviews-preprocess-lambda lists the files in the book-reviews/reviews prefix in the S3 bucket in that Region.

bookreviews-dbprocessing-lambda processes the file, reads all the book review entries in the file, and writes the reviews in the database. This step iterates until all the files are processed.

bookreviews-postprocess-lambda moves the processed file from the book-review/reviews prefix to book-review/completed to mark the file as complete and avoid duplicate processing.

bookreviews-has-more-files? looks for files in the book-reviews/reviews prefix. If it finds any files, the process repeats from Step 1.

The following screenshot illustrates the state machine workflow.

When the job is complete, the workflow looks like the following screenshot.

Monitoring

You can set up Amazon CloudWatch metrics and dashboards to monitor the Aurora replication across Regions. For instructions, refer to Monitor Amazon Aurora Global Database replication at scale using Amazon CloudWatch Metrics Insights. After you set up the dashboard, you can monitor Aurora global replication lag.

During our test, the Step Functions jobs in both Regions completed under 1 minute and 14 seconds and processed over 600,000 records. We observed the Aurora global replication lag as under 200 milliseconds during this test. The following screenshot illustrates these metrics.

Recommendations

The write forwarding feature avoids write conflicts, because the writes are forwarded from the secondary to primary Region and the updates are replicated back to the replicas depending on the consistency level you set for write forwarding. Use the REPEATBLE READ isolation level with the write forwarding feature.

Applications should enable the set aurora_replica_read_consistency = ‘session’ property to enable writes to the secondary cluster.

When building an application that uses a write global strategy, avoid duplicate processing in both Regions using Region-specific keys to isolate the processing within a Region for better performance and consistency. The Region key could be something as simple as adding a column ‘region_indicator‘ to capture the Region in which the data is inserted.

You don’t have to process everything in both Regions. For example, daily batch jobs reading data from the database should have access to data processed in both Regions. In this case, you can schedule a daily batch job to run from one Region instead of both Regions. You can plan to run the job in other Regions in disaster recovery scenarios.

As described in the earlier sections, a write global strategy forwards all the writes to the primary Region database. Review the data latency and performance requirements of your application before using this feature. We don’t recommend using this feature for active-active application processing across Regions.

For further reading, please refer to Amazon Aurora High Availability and Disaster Recovery Features for Global Resiliency whitepaper.

Clean up

When you’re finished experimenting with this solution, clean up your resources in both Regions by running cdk destroy to remove all resources created from the AWS CDK, and delete the Aurora clusters, secrets from Secrets Manager, and security groups. This helps you avoid continuing costs in your account.

Conclusion

You can use Aurora Global Database to improve application resiliency and disaster recovery SLAs to meet your RPO and RTO requirements with high confidence. In this post, we demonstrated how to use Aurora Global Database to build a resilient web application and use a write global strategy with write forwarding.

The write forwarding configuration saves you from implementing your own mechanism to send write operations from a secondary Region to the primary Region. Aurora handles the cross-Region networking setup. Aurora also transmits all necessary session and transactional context for each statement. The data is always changed first on the primary cluster and then replicated to the secondary clusters in the Aurora global database. This way, the primary cluster is the source of truth and always has an up-to-date copy of all your data.

For more information about Aurora, visit Amazon Aurora resources to find video resources and blog posts, and refer to Amazon Aurora FAQs. Share your thoughts with us in the comments section, or in the issues section of the project’s GitHub repository.

About the Authors

Sathya Balakrishnan is a Senior Cloud Consultant with the Professional Services team at AWS. He works with US federal financial clients, specializing in data and AI/ML solutions.. He is passionate about building pragmatic solutions to solve customers’ business problems. In his spare time, he enjoys watching movies and hiking with his family.

Rajesh Vachepally is a Senior Cloud Consultant with the Professional Services team at AWS. He works with customers and partners in their journey to the AWS Cloud with a focus on application migration and modernization programs.

Source: Read More