Media and entertainment companies serve multilingual audiences with a wide range of content catering to diverse audience segments. These enterprises have access to massive amounts of data collected over their many years of operations. Much of this data is unstructured text and images. Conventional approaches to analyzing unstructured data for generating new content rely on the use of keyword or synonym matching. These approaches don’t capture the full semantic context of a document, making them less effective for users’ search, content creation, and several other downstream tasks.

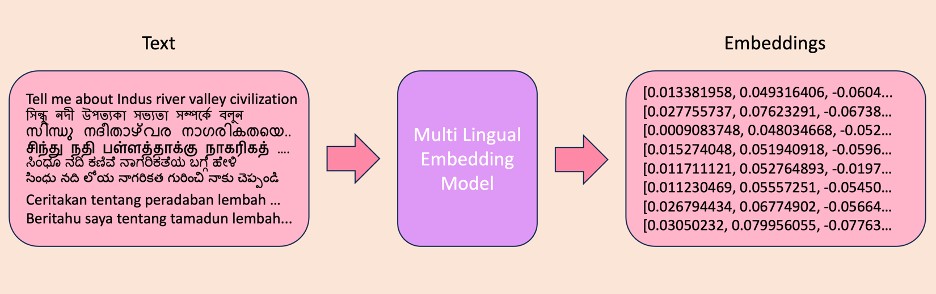

Text embeddings use machine learning (ML) capabilities to capture the essence of unstructured data. These embeddings are generated by language models that map natural language text into their numerical representations and, in the process, encode contextual information in the natural language document. Generating text embeddings is the first step to many natural language processing (NLP) applications powered by large language models (LLMs) such as Retrieval Augmented Generation (RAG), text generation, entity extraction, and several other downstream business processes.

Converting text to embeddings using cohere multilingual embedding model

Despite the rising popularity and capabilities of LLMs, the language most often used to converse with the LLM, often through a chat-like interface, is English. And although progress has been made in adapting open source models to comprehend and respond in Indian languages, such efforts fall short of the English language capabilities displayed among larger, state-of-the-art LLMs. This makes it difficult to adopt such models for RAG applications based on Indian languages.

In this post, we showcase a RAG application that can search and query across multiple Indian languages using the Cohere Embed – Multilingual model and Anthropic Claude 3 on Amazon Bedrock. This post focuses on Indian languages, but you can use the approach with other languages that are supported by the LLM.

Solution overview

We use the Flores dataset [1], a benchmark dataset for machine translation between English and low-resource languages. This also serves as a parallel corpus, which is a collection of texts that have been translated into one or more languages.

With the Flores dataset, we can demonstrate that the embeddings and, subsequently, the documents retrieved from the retriever, are relevant for the same question being asked in multiple languages. However, given the sparsity of the dataset (approximately 1,000 lines per language from more than 200 languages), the nature and number of questions that can be asked against the dataset is limited.

After you have downloaded the data, load the data into the pandas data frame for processing. For this demo, we are restricting ourselves to Bengali, Kannada, Malayalam, Tamil, Telugu, Hindi, Marathi, and English. If you are looking to adopt this approach for other languages, make sure the language is supported by both the embedding model and the LLM that’s being used in the RAG setup.

Load the data with the following code:

import pandas as pd

df_ben = pd.read_csv(‘./data/Flores/dev/dev.ben_Beng’, sep=’t’)

df_kan = pd.read_csv(‘./data/Flores/dev/dev.kan_Knda’, sep=’t’)

df_mal = pd.read_csv(‘./data/Flores/dev/dev.mal_Mlym’, sep=’t’)

df_tam = pd.read_csv(‘./data/Flores/dev/dev.tam_Taml’, sep=’t’)

df_tel = pd.read_csv(‘./data/Flores/dev/dev.tel_Telu’, sep=’t’)

df_hin = pd.read_csv(‘./data/Flores/dev/dev.hin_Deva’, sep=’t’)

df_mar = pd.read_csv(‘./data/Flores/dev/dev.mar_Deva’, sep=’t’)

df_eng = pd.read_csv(‘./data/Flores/dev/dev.eng_Latn’, sep=’t’)

# Choose fewer/more languages if needed

df_all_Langs = pd.concat([df_ben, df_kan, df_mal, df_tam, df_tel, df_hin, df_mar,df_eng], axis=1)

df_all_Langs.columns = [‘Bengali’, ‘Kannada’, ‘Malayalam’, ‘Tamil’, ‘Telugu’, ‘Hindi’, ‘Marathi’,’English’]

df_all_Langs.shape #(996,8)

df = df_all_Langs

stacked_df = df.stack().reset_index() # for ease of handling

# select only the required columns, rename them

stacked_df = stacked_df.iloc[:,[1,2]]

stacked_df.columns = [‘language’,’text’]

The Cohere multilingual embedding model

Cohere is a leading enterprise artificial intelligence (AI) platform that builds world-class LLMs and LLM-powered solutions that allow computers to search, capture meaning, and converse in text. They provide ease of use and strong security and privacy controls.

The Cohere Embed – Multilingual model generates vector representations of documents for over 100 languages and is available on Amazon Bedrock. With Amazon Bedrock, you can access the embedding model through an API call, which eliminates the need to manage the underlying infrastructure and makes sure sensitive information remains securely managed and protected.

The multilingual embedding model groups text with similar meanings by assigning them positions in the semantic vector space that are close to each other. Developers can process text in multiple languages without switching between different models. This makes processing more efficient and improves performance for multilingual applications.

Text embeddings turn unstructured data into a structured form. This allows you to objectively compare, dissect, and derive insights from all these documents. Cohere’s new embedding models have a new required input parameter, input_type, which must be set for every API call and include one of the following four values, which align towards the most frequent use cases for text embeddings:

input_type=â€search_document†– Use this for texts (documents) you want to store in your vector database

input_type=â€search_query†– Use this for search queries to find the most relevant documents in your vector database

input_type=â€classification†– Use this if you use the embeddings as input for a classification system

input_type=â€clustering†– Use this if you use the embeddings for text clustering

Using these input types provides the highest possible quality for the respective tasks. If you want to use the embeddings for multiple use cases, we recommend using input_type=”search_document”.

Prerequisites

To use the Claude 3 Sonnet LLM and the Cohere multilingual embeddings model on this dataset, ensure that you have access to the models in your AWS account under Amazon Bedrock, Model Access section and then proceed with installing the following packages. The following code has been tested to work with the Amazon SageMaker Data Science 3.0 Image, backed by an ml.t3.medium instance.

! apt-get update

! apt-get install build-essential -y # for the hnswlib package below

! pip install hnswlib

Create a search index

With all of the prerequisites in place, you can now convert the multilingual corpus into embeddings and store those in hnswlib, a header-only C++ Hierarchical Navigable Small Worlds (HNSW) implementation with Python bindings, insertions, and updates. HNSWLib is an in-memory vector store that can be saved to a file, which should be sufficient for the small dataset we are working with. Use the following code:

import hnswlib

import os

import json

import botocore

import boto3

boto3_bedrock = boto3.client(‘bedrock’)

bedrock_runtime = boto3.client(‘bedrock-runtime’)

# Create a search index

index = hnswlib.Index(space=’ip’, dim=1024)

index.init_index(max_elements=10000, ef_construction=512, M=64)

all_text = stacked_df[‘text’].to_list()

all_text_lang = stacked_df[‘language’].to_list()

Embed and index documents

To embed and store the small multilingual dataset, use the Cohere embed-multilingual-v3.0 model, which creates embeddings with 1,024 dimensions, using the Amazon Bedrock runtime API:

modelId=”cohere.embed-multilingual-v3″

contentType= “application/json”

accept = “*/*”

df_chunk_size = 80

chunk_embeddings = []

for i in range(0,len(all_text), df_chunk_size):

chunk = all_text[i:i+df_chunk_size]

body=json.dumps(

{“texts”:chunk,”input_type”:”search_document”} # search documents

)

response = bedrock_runtime.invoke_model(body=body,

modelId=modelId,

accept=accept,

contentType=contentType)

response_body = json.loads(response.get(‘body’).read())

index.add_items(response_body[’embeddings’])

Verify that the embeddings work

To test the solution, write a function that takes a query as input, embeds it, and finds the top N documents most closely related to it:

# Retrieval of closest N docs to query

def retrieval(query, num_docs_to_return=10):

modelId=”cohere.embed-multilingual-v3″

contentType= “application/json”

accept = “*/*”

body=json.dumps(

{“texts”:[query],”input_type”:”search_query”} # search query

)

response = bedrock_runtime.invoke_model(body=body,

modelId=modelId,

accept=accept,

contentType=contentType)

response_body = json.loads(response.get(‘body’).read())

doc_ids = index.knn_query(response_body[’embeddings’],

k=num_docs_to_return)[0][0]

print(f”Query: {query} n”)

retrieved_docs = []

for doc_id in doc_ids:

# Append results

retrieved_docs.append(all_text[doc_id]) # original vernacular language docs

# Print results

print(f”Original Flores Text {all_text[doc_id]}”)

print(“-“*30)

print(“END OF RESULTS nn”)

return retrieved_docs

You can explore what the RAG stack does with a couple of queries in different languages, such as Hindi:

queries = [

“मà¥à¤à¥‡ सिंधॠनदी घाटी सà¤à¥à¤¯à¤¤à¤¾ के बारे में बताइऔ,”

]

# translation: tell me about Indus Valley Civilization

for query in queries:

retrieval(query)

The index returns documents relevant to the search query from across languages:

Query: मà¥à¤à¥‡ सिंधॠनदी घाटी सà¤à¥à¤¯à¤¤à¤¾ के बारे में बताइà¤

Original Flores Text सिंधॠघाटी सà¤à¥à¤¯à¤¤à¤¾ उतà¥à¤¤à¤°-पशà¥à¤šà¤¿à¤® à¤à¤¾à¤°à¤¤à¥€à¤¯ उपमहादà¥à¤µà¥€à¤ª में कांसà¥à¤¯ यà¥à¤— की सà¤à¥à¤¯à¤¤à¤¾ थी जिसमें आस-पास के आधà¥à¤¨à¤¿à¤• पाकिसà¥à¤¤à¤¾à¤¨ और उतà¥à¤¤à¤° पशà¥à¤šà¤¿à¤® à¤à¤¾à¤°à¤¤ और उतà¥à¤¤à¤°-पूरà¥à¤µ अफ़गानिसà¥à¤¤à¤¾à¤¨ के कà¥à¤› कà¥à¤·à¥‡à¤¤à¥à¤° शामिल थे.

——————————

Original Flores Text सिंधॠनदी के घाटों में पनपी सà¤à¥à¤¯à¤¤à¤¾ के कारण यह इसके नाम पर बनी है.

——————————

Original Flores Text यदà¥à¤¯à¤ªà¤¿ कà¥à¤› विदà¥à¤µà¤¾à¤¨à¥‹à¤‚ का अनà¥à¤®à¤¾à¤¨ है कि चूंकि सà¤à¥à¤¯à¤¤à¤¾ अब सूख चà¥à¤•à¥€ सरसà¥à¤µà¤¤à¥€ नदी के घाटियों में विदà¥à¤¯à¤®à¤¾à¤¨ थी, इसलिठइसे सिंधà¥-सरसà¥à¤µà¤¤à¥€ सà¤à¥à¤¯à¤¤à¤¾ कहा जाना चाहिà¤, जबकि 1920 के दशक में हड़पà¥à¤ªà¤¾ की पहली खà¥à¤¦à¤¾à¤ˆ के बाद से कà¥à¤› इसे हड़पà¥à¤ªà¤¾ सà¤à¥à¤¯à¤¤à¤¾ कहते हैं।

——————————

Original Flores Text సింధౠనది పరీవాహక à°ªà±à°°à°¾à°‚తాలà±à°²à±‹ నాగరికత విలసిలà±à°²à°¿à°‚ది.

——————————

Original Flores Text सिंधू संसà¥à¤•à¥ƒà¤¤à¥€ ही वायवà¥à¤¯ à¤à¤¾à¤°à¤¤à¥€à¤¯ उपखंडातील कांसà¥à¤¯ यà¥à¤— संसà¥à¤•à¥ƒà¤¤à¥€ होती जà¥à¤¯à¤¾à¤®à¤§à¥à¤¯à¥‡ आधà¥à¤¨à¤¿à¤• काळातील पाकिसà¥à¤¤à¤¾à¤¨, वायवà¥à¤¯ à¤à¤¾à¤°à¤¤ आणि ईशानà¥à¤¯ अफगाणिसà¥à¤¤à¤¾à¤¨à¤¾à¤¤à¥€à¤² काही पà¥à¤°à¤¦à¥‡à¤¶à¤¾à¤‚चा समावेश होता.

——————————

Original Flores Text সিনà§à¦§à§ সà¦à§à¦¯à¦¤à¦¾ হল উতà§à¦¤à¦°-পশà§à¦šà¦¿à¦® à¦à¦¾à¦°à¦¤à§€à¦¯à¦¼ উপমহাদেশের à¦à¦•à¦Ÿà¦¿ তামà§à¦°à¦¯à§à¦—ের সà¦à§à¦¯à¦¤à¦¾ যা আধà§à¦¨à¦¿à¦•-পাকিসà§à¦¤à¦¾à¦¨à§‡à¦° অধিকাংশ ও উতà§à¦¤à¦°-পশà§à¦šà¦¿à¦® à¦à¦¾à¦°à¦¤ à¦à¦¬à¦‚ উতà§à¦¤à¦°-পূরà§à¦¬ আফগানিসà§à¦¤à¦¾à¦¨à§‡à¦° কিছৠঅঞà§à¦šà¦²à¦•à§‡ ঘিরে রয়েছে।

————————-

…..

You can now use these documents retrieved from the index as context while calling the Anthropic Claude 3 Sonnet model on Amazon Bedrock. In production settings with datasets that are several orders of magnitude larger than the Flores dataset, we can make the search results from the index even more relevant by using Cohere’s Rerank models.

Use the system prompt to outline how you want the LLM to process your query:

# Retrieval of docs relevant to the query

def context_retrieval(query, num_docs_to_return=10):

modelId=”cohere.embed-multilingual-v3″

contentType= “application/json”

accept = “*/*”

body=json.dumps(

{“texts”:[query],”input_type”:”search_query”} # search query

)

response = bedrock_runtime.invoke_model(body=body,

modelId=modelId,

accept=accept,

contentType=contentType)

response_body = json.loads(response.get(‘body’).read())

doc_ids = index.knn_query(response_body[’embeddings’],

k=num_docs_to_return)[0][0]

retrieved_docs = []

for doc_id in doc_ids:

retrieved_docs.append(all_text[doc_id])

return ” “.join(retrieved_docs)

def query_rag_bedrock(query, model_id = ‘anthropic.claude-3-sonnet-20240229-v1:0’):

system_prompt = ”’

You are a helpful emphathetic multilingual assitant.

Identify the language of the user query, and respond to the user query in the same language.

For example

if the user query is in English your response will be in English,

if the user query is in Malayalam, your response will be in Malayalam,

if the user query is in Tamil, your response will be in Tamil

and so on…

if you cannot identify the language: Say you cannot idenitify the language

You will use only the data provided within the <context> </context> tags, that matches the user’s query’s language, to answer the user’s query

If there is no data provided within the <context> </context> tags, Say that you do not have enough information to answer the question

Restrict your response to a paragraph of less than 400 words avoid bullet points

”’

max_tokens = 1000

messages = [{“role”: “user”, “content”: f”’

query : {query}

<context>

{context_retrieval(query)}

</context>

”’}]

body=json.dumps(

{

“anthropic_version”: “bedrock-2023-05-31”,

“max_tokens”: max_tokens,

“system”: system_prompt,

“messages”: messages

}

)

response = bedrock_runtime.invoke_model(body=body, modelId=model_id)

response_body = json.loads(response.get(‘body’).read())

return response_body[‘content’][0][‘text’]

Let’s pass in the same query in multiple Indian languages:

queries = [“tell me about the indus river valley civilization”,

“मà¥à¤à¥‡ सिंधॠनदी घाटी सà¤à¥à¤¯à¤¤à¤¾ के बारे में बताइऔ,

“मला सिंधू नदीचà¥à¤¯à¤¾ संसà¥à¤•à¥ƒà¤¤à¥€à¤¬à¤¦à¥à¤¦à¤² सांगा”,

“సింధౠనది నాగరికత à°—à±à°°à°¿à°‚à°šà°¿ చెపà±à°ªà°‚à°¡à°¿”,

“ಸಿಂಧೂ ನದಿ ಕಣಿವೆ ನಾಗರಿಕತೆಯ ಬಗà³à²—ೆ ಹೇಳಿ”,

“সিনà§à¦§à§ নদী উপতà§à¦¯à¦•à¦¾ সà¦à§à¦¯à¦¤à¦¾ সমà§à¦ªà¦°à§à¦•à§‡ বলà§à¦¨”,

“சிநà¯à®¤à¯ நதி பளà¯à®³à®¤à¯à®¤à®¾à®•à¯à®•à¯ நாகரிகதà¯à®¤à¯ˆà®ªà¯ பறà¯à®±à®¿ சொல௔,

“സിനàµà´§àµ നദീതാഴàµà´µà´° നാഗരികതയെകàµà´•àµà´±à´¿à´šàµà´šàµ പറയàµà´•”]

for query in queries:

print(query_rag_bedrock(query))

print(‘_’*20)

The query is in English, so I will respond in English.

The Indus Valley Civilization, also known as the Harappan Civilization, was a Bronze Age civilization that flourished in the northwestern regions of the Indian subcontinent, primarily in the basins of the Indus River and its tributaries. It encompassed parts of modern-day Pakistan, northwest India, and northeast Afghanistan. While some scholars suggest calling it the Indus-Sarasvati Civilization due to its presence in the now-dried-up Sarasvati River basin, the name “Indus Valley Civilization” is derived from its development along the Indus River valley. This ancient civilization dates back to around 3300–1300 BCE and was one of the earliest urban civilizations in the world. It was known for its well-planned cities, advanced drainage systems, and a writing system that has not yet been deciphered.

____________________

सिंधॠघाटी सà¤à¥à¤¯à¤¤à¤¾ à¤à¤• पà¥à¤°à¤¾à¤šà¥€à¤¨ नगर सà¤à¥à¤¯à¤¤à¤¾ थी जो उतà¥à¤¤à¤°-पशà¥à¤šà¤¿à¤® à¤à¤¾à¤°à¤¤à¥€à¤¯ उपमहादà¥à¤µà¥€à¤ª में फैली हà¥à¤ˆ थी। यह लगà¤à¤— 3300 से 1300 ईसा पूरà¥à¤µ की अवधि तक विकसित रही। इस सà¤à¥à¤¯à¤¤à¤¾ के केंदà¥à¤° वरà¥à¤¤à¤®à¤¾à¤¨ पाकिसà¥à¤¤à¤¾à¤¨ के सिंध और पंजाब पà¥à¤°à¤¾à¤‚तों में सà¥à¤¥à¤¿à¤¤ थे, लेकिन इसके अवशेष à¤à¤¾à¤°à¤¤ के राजसà¥à¤¥à¤¾à¤¨, गà¥à¤œà¤°à¤¾à¤¤, मधà¥à¤¯ पà¥à¤°à¤¦à¥‡à¤¶, महाराषà¥à¤Ÿà¥à¤° और उतà¥à¤¤à¤° पà¥à¤°à¤¦à¥‡à¤¶ में à¤à¥€ मिले हैं। सà¤à¥à¤¯à¤¤à¤¾ का नाम सिंधॠनदी से लिया गया है कà¥à¤¯à¥‹à¤‚कि इसके पà¥à¤°à¤®à¥à¤– सà¥à¤¥à¤² इस नदी के किनारे सà¥à¤¥à¤¿à¤¤ थे। हालांकि, कà¥à¤› विदà¥à¤µà¤¾à¤¨à¥‹à¤‚ का अनà¥à¤®à¤¾à¤¨ है कि सरसà¥à¤µà¤¤à¥€ नदी के किनारे à¤à¥€ इस सà¤à¥à¤¯à¤¤à¤¾ के सà¥à¤¥à¤² विदà¥à¤¯à¤®à¤¾à¤¨ थे इसलिठइसे सिंधà¥-सरसà¥à¤µà¤¤à¥€ सà¤à¥à¤¯à¤¤à¤¾ à¤à¥€ कहा जाता है। यह à¤à¤• महतà¥à¤µà¤ªà¥‚रà¥à¤£ शहरी समाज था जिसमें विकसित योजना बनाने की कà¥à¤·à¤®à¤¤à¤¾, नगरीय संरचना और सà¥à¤µà¤šà¥à¤› जलापूरà¥à¤¤à¤¿ आदि पà¥à¤°à¤®à¥à¤– विशेषताà¤à¤‚ थीं।

____________________

सिंधू संसà¥à¤•à¥ƒà¤¤à¥€ मà¥à¤¹à¤£à¤œà¥‡ सिंधू नदीचà¥à¤¯à¤¾ पटà¥à¤Ÿà¥€à¤•à¥‡à¤¤à¥€à¤² पà¥à¤°à¤¾à¤šà¥€à¤¨ संसà¥à¤•à¥ƒà¤¤à¥€ होती. ही संसà¥à¤•à¥ƒà¤¤à¥€ सà¥à¤®à¤¾à¤°à¥‡ ई.पू. ३३०० ते ई.पू. १३०० या कालखंडात फà¥à¤²à¤£à¤¾à¤°à¥€ होती. ती à¤à¤¾à¤°à¤¤à¤¾à¤¤à¥€à¤² कांसà¥à¤¯à¤¯à¥à¤—ीन संसà¥à¤•à¥ƒà¤¤à¥€à¤‚पैकी à¤à¤• मोठी होती. या संसà¥à¤•à¥ƒà¤¤à¥€à¤šà¥‡ अवशेष आजचà¥à¤¯à¤¾ पाकिसà¥à¤¤à¤¾à¤¨, à¤à¤¾à¤°à¤¤ आणि अफगाणिसà¥à¤¤à¤¾à¤¨à¤®à¤§à¥à¤¯à¥‡ आढळून आले आहेत. या संसà¥à¤•à¥ƒà¤¤à¥€à¤¤ नगररचना, नागरी सोयी सà¥à¤µà¤¿à¤§à¤¾à¤‚चा विकास à¤à¤¾à¤²à¤¾ होता. जलवाहिनी, नगरदेवालय इतà¥à¤¯à¤¾à¤¦à¥€ अदà¥à¤à¥à¤¤ बाबी या संसà¥à¤•à¥ƒà¤¤à¥€à¤¤ होतà¥à¤¯à¤¾. सिंधू संसà¥à¤•à¥ƒà¤¤à¥€à¤¤ लिपीसà¥à¤¦à¥à¤§à¤¾ विकसित à¤à¤¾à¤²à¥€ होती परंतॠती अजूनही वाचणà¥à¤¯à¤¾à¤¸ आलेली नाही. सिंधू संसà¥à¤•à¥ƒà¤¤à¥€ ही à¤à¤¾à¤°à¤¤à¤¾à¤¤à¥€à¤² पहिली शहरी संसà¥à¤•à¥ƒà¤¤à¥€ मानली जाते.

____________________

సింధౠనది నాగరికత à°—à±à°°à°¿à°‚à°šà°¿ చెపà±à°ªà±à°¤à±‚, à°ˆ నాగరికత సింధౠనది పరిసర à°ªà±à°°à°¾à°‚తాలà±à°²à±‹ ఉనà±à°¨à°¦à°¨à°¿ చెపà±à°ªà°µà°šà±à°šà±. దీనిని సింధà±-సరసà±à°µà°¤à°¿ నాగరికత అనీ, హరపà±à°ª నాగరికత అనీ కూడా పిలà±à°¸à±à°¤à°¾à°°à±. ఇది ఉతà±à°¤à°°-ఆరà±à°¯ à°à°¾à°°à°¤à°¦à±‡à°¶à°‚, ఆధà±à°¨à°¿à°• పాకిసà±à°¤à°¾à°¨à±, ఉతà±à°¤à°°-పశà±à°šà°¿à°® à°à°¾à°°à°¤à°¦à±‡à°¶à°‚ మరియౠఉతà±à°¤à°°-ఆరà±à°¥à°¿à°• à°…à°«à±à°—ానిసà±à°¤à°¾à°¨à± కౠచెందిన తామà±à°°à°¯à±à°—పౠనాగరికత. సరసà±à°µà°¤à°¿ నది పరీవాహక à°ªà±à°°à°¾à°‚తాలà±à°²à±‹à°¨à±‚ నాగరికత ఉందని కొందరౠపండితà±à°²à± à°…à°à°¿à°ªà±à°°à°¾à°¯à°ªà°¡à±à°¡à°¾à°°à±. దీని మొదటి à°¸à±à°¥à°²à°¾à°¨à±à°¨à°¿ 1920లలో హరపà±à°ªà°¾à°²à±‹ à°¤à±à°°à°µà±à°µà°¾à°°à±. à°ˆ నాగరికతలో à°ªà±à°°à°¶à°¸à±à°¤à°®à±ˆà°¨ బసà±à°¤à±€à°²à±, నగరాలà±, మలిచà±à°šà°¿ à°°à°‚à°—à±à°²à°¤à±‹ నిరà±à°®à°¿à°‚à°šà°¿à°¨ à°à°µà°¨à°¾à°²à±, పటà±à°Ÿà°£ నిరà±à°®à°¾à°£à°¾à°²à± ఉనà±à°¨à°¾à°¯à°¿.

____________________

ಸಿಂಧೂ ಕಣಿವೆ ನಾಗರಿಕತೆಯೠವಾಯà³à²µà³à²¯ à²à²¾à²°à²¤à²¦ ಉಪಖಂಡದಲà³à²²à²¿ ಕಂಚಿನ ಯà³à²—ದ ನಾಗರಿಕತೆಯಾಗಿದà³à²¦à³, ಪà³à²°à²¾à²šà³€à²¨ à²à²¾à²°à²¤à²¦ ಇತಿಹಾಸದಲà³à²²à²¿ ಮà³à²–à³à²¯à²µà²¾à²¦ ಪಾತà³à²°à²µà²¨à³à²¨à³ ವಹಿಸಿದೆ. ಈ ನಾಗರಿಕತೆಯೠಆಧà³à²¨à²¿à²•-ದಿನದ ಪಾಕಿಸà³à²¤à²¾à²¨ ಮತà³à²¤à³ ವಾಯà³à²µà³à²¯ à²à²¾à²°à²¤à²¦ à²à³‚ಪà³à²°à²¦à³‡à²¶à²—ಳನà³à²¨à³ ಹಾಗೂ ಈಶಾನà³à²¯ ಅಫà³à²˜à²¾à²¨à²¿à²¸à³à²¤à²¾à²¨à²¦ ಕೆಲವೠಪà³à²°à²¦à³‡à²¶à²—ಳನà³à²¨à³ ಒಳಗೊಂಡಿರà³à²µà³à²¦à²°à²¿à²‚ದ ಅದಕà³à²•à³† ಸಿಂಧೂ ನಾಗರಿಕತೆ ಎಂದೠಹೆಸರಿಸಲಾಗಿದೆ. ಸಿಂಧೂ ನದಿಯ ಪà³à²°à²¦à³‡à²¶à²—ಳಲà³à²²à²¿ ಈ ನಾಗರಿಕತೆಯೠವಿಕಸಿತಗೊಂಡಿದà³à²¦à²°à²¿à²‚ದ ಅದಕà³à²•à³† ಸಿಂಧೂ ನಾಗರಿಕತೆ ಎಂದೠಹೆಸರಿಸಲಾಗಿದೆ. ಈಗ ಬತà³à²¤à²¿ ಹೋದ ಸರಸà³à²µà²¤à²¿ ನದಿಯ ಪà³à²°à²¦à³‡à²¶à²—ಳಲà³à²²à²¿ ಸಹ ನಾಗರೀಕತೆಯ ಅಸà³à²¤à²¿à²¤à³à²µà²µà²¿à²¦à³à²¦à²¿à²°à²¬à²¹à³à²¦à³†à²‚ದೠಕೆಲವೠಪà³à²°à²¾à²œà³à²žà²°à³ ಶಂಕಿಸà³à²¤à³à²¤à²¾à²°à³†. ಆದà³à²¦à²°à²¿à²‚ದ ಈ ನಾಗರಿಕತೆಯನà³à²¨à³ ಸಿಂಧೂ-ಸರಸà³à²µà²¤à²¿ ನಾಗರಿಕತೆ ಎಂದೠಸೂಕà³à²¤à²µà²¾à²—ಿ ಕರೆ

____________________

সিনà§à¦§à§ নদী উপতà§à¦¯à¦•à¦¾ সà¦à§à¦¯à¦¤à¦¾ ছিল à¦à¦•à¦Ÿà¦¿ পà§à¦°à¦¾à¦šà§€à¦¨ তামà§à¦°à¦¯à§à¦—ীয় সà¦à§à¦¯à¦¤à¦¾ যা বরà§à¦¤à¦®à¦¾à¦¨ পাকিসà§à¦¤à¦¾à¦¨ à¦à¦¬à¦‚ উতà§à¦¤à¦°-পশà§à¦šà¦¿à¦® à¦à¦¾à¦°à¦¤ ও উতà§à¦¤à¦°-পূরà§à¦¬ আফগানিসà§à¦¤à¦¾à¦¨à§‡à¦° কিছৠঅঞà§à¦šà¦²à¦•à§‡ নিয়ে গঠিত ছিল। à¦à¦‡ সà¦à§à¦¯à¦¤à¦¾à¦° নাম সিনà§à¦§à§ নদীর অববাহিকা অঞà§à¦šà¦²à§‡ à¦à¦Ÿà¦¿à¦° বিকাশের কারণে à¦à¦°à¦•à¦® দেওয়া হয়েছে। কিছৠপণà§à¦¡à¦¿à¦¤ মনে করেন যে সরসà§à¦¬à¦¤à§€ নদীর à¦à§‚মি-পà§à¦°à¦¦à§‡à¦¶à§‡à¦“ à¦à¦‡ সà¦à§à¦¯à¦¤à¦¾ বিদà§à¦¯à¦®à¦¾à¦¨ ছিল, তাই à¦à¦Ÿà¦¿à¦•à§‡ সিনà§à¦§à§-সরসà§à¦¬à¦¤à§€ সà¦à§à¦¯à¦¤à¦¾ বলা উচিত। আবার কেউ কেউ à¦à¦‡ সà¦à§à¦¯à¦¤à¦¾à¦•à§‡ হরপà§à¦ªà¦¾ পরবরà§à¦¤à§€ হরপà§à¦ªà¦¾à¦¨ সà¦à§à¦¯à¦¤à¦¾ নামেও অবিহিত করেন। যাই হোক, সিনà§à¦§à§ সà¦à§à¦¯à¦¤à¦¾ ছিল পà§à¦°à¦¾à¦šà§€à¦¨ তামà§à¦°à¦¯à§à¦—ের à¦à¦• উলà§à¦²à§‡à¦–যোগà§à¦¯ সà¦à§à¦¯à¦¤à¦¾ যা সিনà§à¦§à§ নদী উপতà§à¦¯à¦•à¦¾à¦° à¦à¦²à¦¾à¦•à¦¾à¦¯à¦¼ বিকশিত হয়েছিল।

____________________

சிநà¯à®¤à¯ நதிப௠பளà¯à®³à®¤à¯à®¤à®¾à®•à¯à®•à®¿à®²à¯ தோனà¯à®±à®¿à®¯ நாகரிகம௠சிநà¯à®¤à¯ நாகரிகம௠எனà¯à®±à®´à¯ˆà®•à¯à®•à®ªà¯à®ªà®Ÿà¯à®•à®¿à®±à®¤à¯. சிநà¯à®¤à¯ நதியின௠படà¯à®•à¯ˆà®•à®³à®¿à®²à¯ இநà¯à®¤ நாகரிகம௠மலரà¯à®¨à¯à®¤à®¤à®¾à®²à¯ இபà¯à®ªà¯†à®¯à®°à¯ வழஙà¯à®•à®ªà¯à®ªà®Ÿà¯à®Ÿà®¤à¯. ஆனாலà¯, தறà¯à®ªà¯‹à®¤à¯ வறணà¯à®Ÿà¯à®ªà¯‹à®© சரஸà¯à®µà®¤à®¿ நதிப௠பகà¯à®¤à®¿à®¯à®¿à®²à¯à®®à¯ இநà¯à®¨à®¾à®•à®°à®¿à®•à®®à¯ இரà¯à®¨à¯à®¤à®¿à®°à¯à®•à¯à®•à®²à®¾à®®à¯ என சில அறிஞரà¯à®•à®³à¯ கரà¯à®¤à¯à®µà®¤à®¾à®²à¯, சிநà¯à®¤à¯ சரஸà¯à®µà®¤à®¿ நாகரிகம௠எனà¯à®±à¯ அழைகà¯à®•à®ªà¯à®ªà®Ÿ வேணà¯à®Ÿà¯à®®à¯ எனà¯à®±à¯ வாதிடà¯à®•à®¿à®©à¯à®±à®©à®°à¯. மேலà¯à®®à¯, இநà¯à®¨à®¾à®•à®°à®¿à®•à®¤à¯à®¤à®¿à®©à¯ à®®à¯à®¤à®²à¯ தளமான ஹரபà¯à®ªà®¾à®µà®¿à®©à¯ பெயரால௠ஹரபà¯à®ªà®¾ நாகரிகம௠எனà¯à®±à¯à®®à¯ அழைகà¯à®•à®ªà¯à®ªà®Ÿà¯à®•à®¿à®±à®¤à¯. இநà¯à®¤ நாகரிகம௠வெணà¯à®•à®²à®¯à¯à®• நாகரிகமாக கரà¯à®¤à®ªà¯à®ªà®Ÿà¯à®•à®¿à®±à®¤à¯. இத௠தறà¯à®•à®¾à®² பாகிஸà¯à®¤à®¾à®©à®¿à®©à¯ பெரà¯à®®à¯à®ªà®•à¯à®¤à®¿, வடமேறà¯à®•à¯ இநà¯à®¤à®¿à®¯à®¾ மறà¯à®±à¯à®®à¯ வடகிழகà¯à®•à¯ ஆபà¯à®•à®¾à®©à®¿à®¸à¯à®¤à®¾à®©à®¿à®©à¯ சில பகà¯à®¤à®¿à®•à®³à¯ˆ உளà¯à®³à®Ÿà®•à¯à®•à®¿à®¯à®¤à¯.

____________________

സിനàµà´§àµ നദീതട സംസàµà´•à´¾à´°à´‚ അഥവാ ഹാരപàµà´ªàµ» സംസàµà´•à´¾à´°à´‚ ആധàµà´¨à´¿à´• പാകിസàµà´¥à´¾àµ», വടകàµà´•àµ പടിഞàµà´žà´¾à´±àµ» ഇനàµà´¤àµà´¯, വടകàµà´•àµ à´•à´¿à´´à´•àµà´•àµ» à´…à´«àµà´—ാനിസàµà´¥à´¾àµ» à´Žà´¨àµà´¨à´¿à´µà´¿à´Ÿà´™àµà´™à´³à´¿àµ½ നിലനിനàµà´¨ ഒരൠവെങàµà´•à´² à´¯àµà´— സംസàµà´•à´¾à´°à´®à´¾à´¯à´¿à´°àµà´¨àµà´¨àµ. à´ˆ സംസàµà´•à´¾à´°à´¤àµà´¤à´¿à´¨àµà´±àµ† à´…à´Ÿà´¿à´¸àµà´¥à´¾à´¨à´‚ സിനàµà´§àµ നദിയàµà´Ÿàµ† തടങàµà´™à´³à´¾à´¯à´¤à´¿à´¨à´¾à´²à´¾à´£àµ ഇതിനൠസിനàµà´§àµ നദീതട സംസàµà´•à´¾à´°à´‚ à´Žà´¨àµà´¨ പേരൠലà´à´¿à´šàµà´šà´¤àµ. à´šà´¿à´² പണàµà´¡à´¿à´¤àµ¼ ഇപàµà´ªàµ‹àµ¾ വറàµà´±à´¿à´ªàµà´ªàµ‹à´¯ സരസàµà´µà´¤à´¿ നദിയàµà´Ÿàµ† തടങàµà´™à´³à´¿à´²àµà´‚ à´ˆ സംസàµà´•à´¾à´°à´‚ നിലനിനàµà´¨à´¿à´°àµà´¨àµà´¨à´¤à´¿à´¨à´¾àµ½ സിനàµà´§àµ-സരസàµà´µà´¤à´¿ നദീതട സംസàµà´•à´¾à´°à´®àµ†à´¨àµà´¨àµ വിളികàµà´•àµà´¨àµà´¨à´¤àµ ശരിയായിരികàµà´•àµà´®àµ†à´¨àµà´¨àµ à´…à´à´¿à´ªàµà´°à´¾à´¯à´ªàµà´ªàµ†à´Ÿàµà´¨àµà´¨àµ. à´Žà´¨àµà´¨à´¾àµ½ ചിലർ 1920കളിൽ ആദàµà´¯à´®à´¾à´¯à´¿ ഉതàµà´–നനം നടതàµà´¤à´¿à´¯ ഹാരപàµà´ª à´Žà´¨àµà´¨ à´¸àµà´¥à´²à´¤àµà´¤àµ† പേരൠപàµà´°à´•à´¾à´°à´‚ à´ˆ സംസàµà´•à´¾à´°à´¤àµà´¤àµ† ഹാരപàµà´ªàµ» സംസàµà´•à´¾à´°à´®àµ†à´¨àµà´¨àµ വിളികàµà´•àµà´¨àµà´¨àµ.

Conclusion

This post presented a walkthrough for using Cohere’s multilingual embedding model along with Anthropic Claude 3 Sonnet on Amazon Bedrock. In particular, we showed how the same question asked in multiple Indian languages, is getting answered using relevant documents retrieved from a vector store

Cohere’s multilingual embedding model supports over 100 languages. It removes the complexity of building applications that require working with a corpus of documents in different languages. The Cohere Embed model is trained to deliver results in real-world applications. It handles noisy data as inputs, adapts to complex RAG systems, and delivers cost-efficiency from its compression-aware training method.

Start building with Cohere’s multilingual embedding model and Anthropic Claude 3 Sonnet on Amazon Bedrock today.

References

[1] Flores Dataset: https://github.com/facebookresearch/flores/tree/main/flores200

About the Author

Rony K Roy is a Sr. Specialist Solutions Architect, Specializing in AI/ML. Rony helps partners build AI/ML solutions on AWS.

Source: Read MoreÂ