In the rapidly advancing field of Artificial Intelligence (AI), it is crucial to assess the outputs of models accurately. State-of-the-art AI systems, such as those built on the GPT-4 architecture, are trained via Reinforcement Learning with Human Feedback (RLHF). Because it is typically quicker and simpler for humans to evaluate AI-generated outputs than it is to create perfect examples, this approach uses human judgments to direct the training process. However, even specialists find it difficult to assess the accuracy and quality of these outputs consistently as AI models get more complex.Â

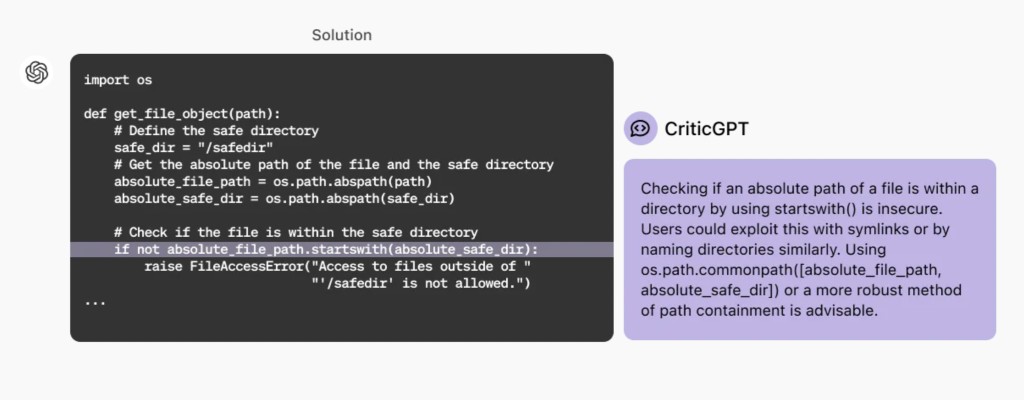

To overcome this, OpenAI researchers have introduced CriticGPT, a very important tool that helps human trainers spot errors in ChatGPT’s responses. CriticGPT’s primary purpose is to produce thorough criticisms that draw attention to mistakes, especially in code outputs. This model has been created to overcome the inherent limitations of human review in RLHF. It offers a scalable supervision mechanism that improves the precision and dependability of AI systems.

CriticGPT has proven to be remarkably effective in enhancing the assessment procedure. In experiments, human reviewers who examined ChatGPT’s code outputs with CriticGPT performed 60% better than those who did not receive such assistance. This major advancement highlights CriticGPT’s ability to increase human-AI cooperation and produce more thorough and accurate evaluations of AI outputs.

In light of these great results, attempts are being made to incorporate CriticGPT-like models into the RLHF labeling pipeline. Through this integration, AI trainers will have access to explicit AI support, which will facilitate the evaluation of advanced AI system outputs. This is an important development because it tackles one of the core issues of RLHF, which is that human trainers find it harder to identify small errors in increasingly complex AI models.

Through RLHF, ChatGPT is powered by the GPT-4 series, which is intended to be informative and engaging. AI trainers play a crucial role in this process, evaluating various ChatGPT responses in relation to one another in order to gather comparative data. While ChatGPT’s accuracy increases with continued reasoning and model behavior breakthroughs, its errors become increasingly subtle. This evolution makes identifying errors more difficult, making the comparison process at the heart of RLHF more difficult.

CriticGPT can write in-depth critiques pointing out errors in ChatGPT’s responses. CriticGPT improves the assessment process’s overall correctness and dependability by helping AI trainers spot minute mistakes. Because it guarantees that sophisticated AI models stay in line with their intended behaviors and goals, this enhancement is very significant.

The team has summarized their primary contributions as follows.

The team has offered the first instance of a simple, scalable oversight technique that greatly assists humans in more thoroughly detecting problems in real-world RLHF data.

Within the ChatGPT and CriticGPT training pools, the team has discovered that critiques produced by CriticGPT catch more inserted bugs and are preferred above those written by human contractors.

Compared to human contractors working alone, this research indicates that teams consisting of critic models and human contractors generate more thorough criticisms. When compared to reviews generated exclusively by models, this partnership lowers the incidence of hallucinations.

This study provides Force Sampling Beam Search (FSBS), an inference-time sampling and scoring technique. This strategy well balances the trade-off between minimizing bogus concerns and discovering genuine faults in LLM-generated critiques.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

Create, edit, and augment tabular data with the first compound AI system, Gretel Navigator, now generally available! [Advertisement]

The post OpenAI Introduces CriticGPT: A New Artificial Intelligence AI Model based on GPT-4 to Catch Errors in ChatGPT’s Code Output appeared first on MarkTechPost.

Source: Read MoreÂ