Video editing, a field of study that has garnered significant academic interest due to its interdisciplinary nature, impact on communication, and evolving technological landscape, often relies on diffusion models. These models, known for their robust generating capabilities and widespread application in video editing, are currently undergoing rapid maturation. However, a crucial challenge in video-to-video jobs is maintaining consistent timing. Video sequences that lack adequate temporal consistency are typically the result of diffusion models that have not undergone specific processing.

Many studies have been written to tackle the problem of temporal consistency in diffusion models. However, even once this problem is handled, there are still downstream tasks, like handwriting, that diffusion-based algorithms struggle to adapt to. In this context, methods based on canonical texts shine. These techniques are highly versatile, creating a single image that represents all the video information. Altering this image is the same as editing the full movie, reassuring the audience about their wide applicability in a range of video editing jobs.

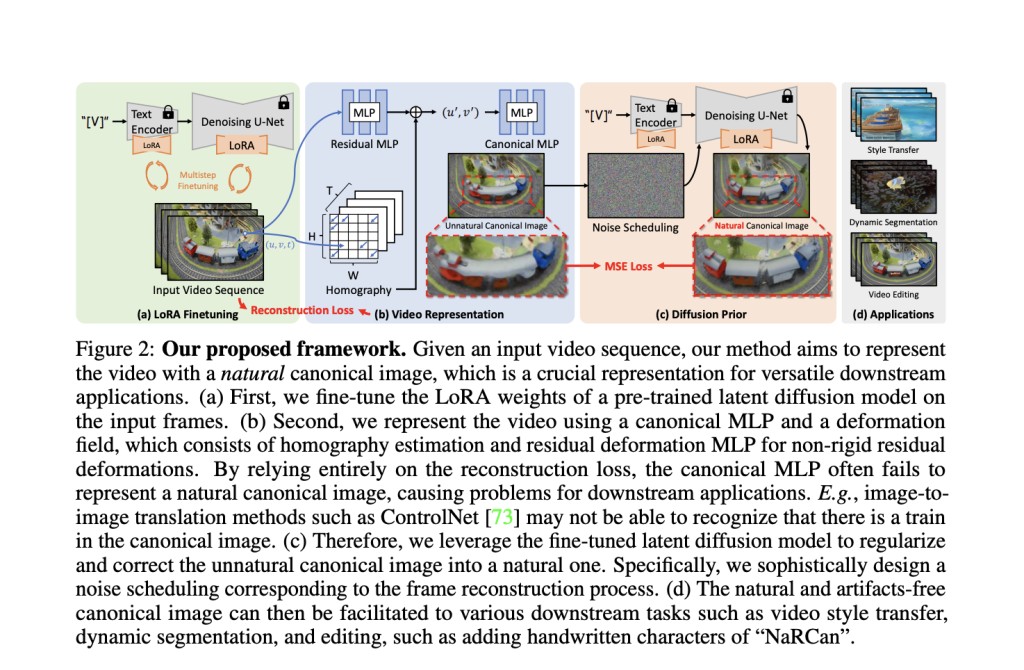

Many research papers show that current canonical-based approaches don’t use any limitations to guarantee a high-quality, natural canonical image. In this context,  National Yang-Ming Chiao Tung University researchers introduce NaRCan, a novel architecture for hybrid deformation field networks. This innovative approach guarantees the production of high-quality, natural canonical pictures in all situations by incorporating diffusion priors into their training pipeline, sparking curiosity about its potential.

The method improves the model’s capability to manage complicated video dynamics by using ‘homography ‘, a technique for representing global motion, and ‘multi-layer perceptrons (MLPs) ‘, a type of neural network, to record local residual deformations. This model’s advantage over existing canonical-based methods is that it incorporates a diffusion to the early stages of training. This guarantees that the generated images maintain a high-quality natural appearance, making the canonical images suitable for various downstream tasks in video editing. In addition, we implement a noise and diffusion prior update scheduling method and fine-tune low-rank adaptation (LoRA), which speeds up training by a factor of fourteen.Â

The team rigorously compares their edited films to those produced by other approaches, such as CoDeF, MeDM, and Hashing-nvd, in the primary area of interest, text-guided video editing. For the user study, 36 people were shown two versions of the videos: one with the original and one with the text prompt that was used to change them. The results are clear. The proposed method consistently generates coherent and high-quality edited video sequences, outperforming existing approaches in diverse video editing tasks, according to extensive experimental results. This performance instills confidence in its superior capabilities, reassuring the users about its effectiveness.

The team highlights that their training pipeline incorporates diffusion loss, which adds more time to the training process. They acknowledge that sometimes, diffusion loss cannot direct the model to produce high-quality, realistic images when video sequences undergo drastic changes. This complexity underscores the challenge of finding an optimal trade-off between computational efficiency, efficacy, and model flexibility under different scenarios, providing the users with a deeper understanding of the intricacies of video editing.Â

Check out the Paper and Demo. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

Create, edit, and augment tabular data with the first compound AI system, Gretel Navigator, now generally available! [Advertisement]

The post NaRCan: A Video Editing AI Framework Integrating Diffusion Priors and LoRA Fine-Tuning to Produce High-Quality Natural Canonical Images appeared first on MarkTechPost.

Source: Read MoreÂ