AI has a myriad of uses, but one of its most concerning applications is the creation of deep fake media and misinformation.

A new study from Google DeepMind and Jigsaw, a Google technology incubator that monitors societal threats, analyzed misuse of AI between January 2023 and March 2024.

It assessed some 200 real-world incidents of AI misuse, revealing that creating and disseminating deceptive deep fake media, particularly those targeting politicians and public figures, is the most common form of malicious AI use.

Deep fakes, synthetic media generated using AI algorithms to create highly realistic but fake images, videos, and audio, have become more lifelike and pervasive.Â

Incidents like when explicit fake images of Taylor Swift appeared on X showed that such images can reach millions of people before deletion.Â

But most insidious are deep fakes targeted at political issues, such as the Israel-Palestine conflict. In some cases, not even the fact checkers charged with labeling them as “AI-generated†can reliably detect their authenticity.Â

The DeepMind study collected data from a diverse array of sources, including social media platforms like X and Reddit, online blogs, and media reports.Â

Each incident was analyzed to determine the specific type of AI technology misused, the intended purpose behind the abuse, and the level of technical expertise required to carry out the malicious activity.

Deep fakes are the dominant form of AI misuse

The findings paint an alarming picture of the current landscape of malicious AI use:

Deep fakes emerged as the dominant form of AI misuse, accounting for nearly twice as many incidents as the next most prevalent category.

The second most frequently observed type of AI abuse was using language models and chatbots to generate and disseminate disinformation online. By automating the creation of misleading content, bad actors can flood social media and other platforms with fake news and propaganda at an unprecedented scale.

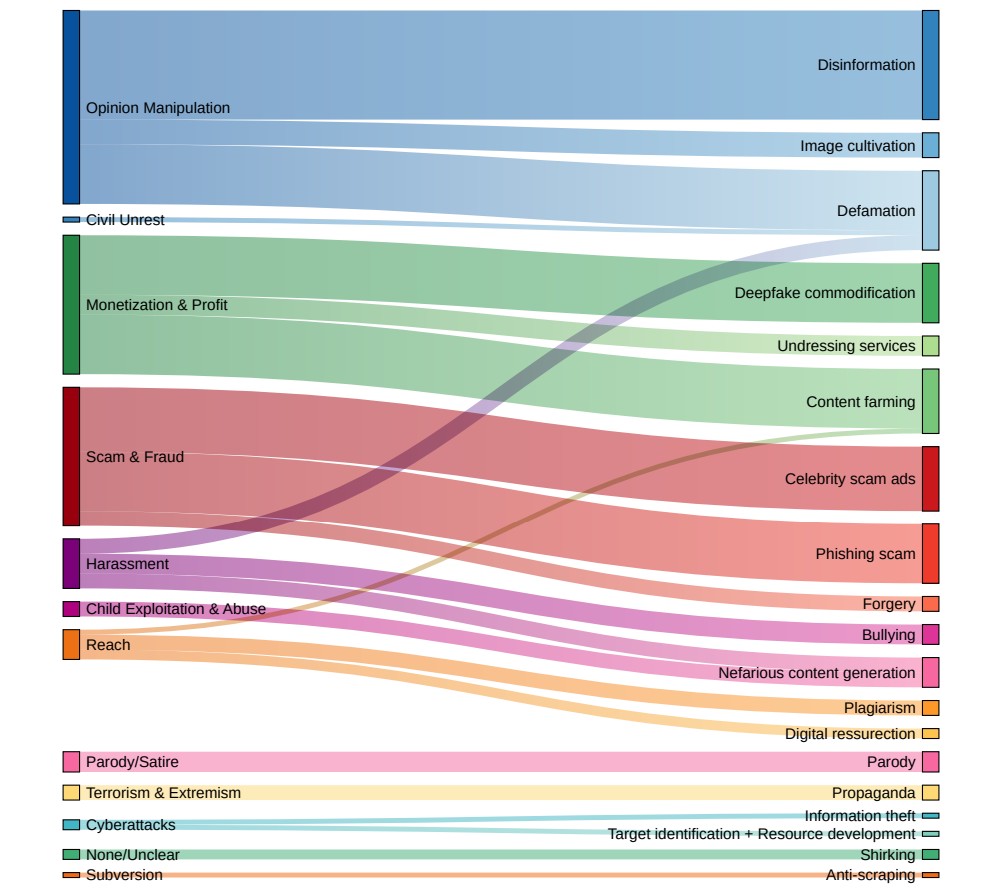

Influencing public opinion and political narratives was the primary motivation behind over a quarter (27%) of the AI misuse cases analyzed. This finding underscores the grave threat that deepfakes and AI-generated disinformation pose to democratic processes and the integrity of elections worldwide.

Financial gain was identified as the second most common driver of malicious AI activity, with unscrupulous actors offering paid services for creating deep fakes, including non-consensual explicit imagery, and leveraging generative AI to mass-produce fake content for profit.

The majority of AI misuse incidents involved readily accessible tools and services that required minimal technical expertise to operate. This low barrier to entry greatly expands the pool of potential malicious actors, making it easier than ever for individuals and groups to engage in AI-powered deception and manipulation.

Mapping AI misuse to intent. Source: DeepMind.

Nahema Marchal, the study’s lead author and a DeepMind researcher, explained the evolving landscape of AI misuse to the Financial Times: “There had been a lot of understandable concern around quite sophisticated cyber attacks facilitated by these tools,†continuing, “We saw were fairly common misuses of GenAI [such as deep fakes that] might go under the radar a little bit more.â€

Policymakers, technology companies, and researchers must work together to develop comprehensive strategies for detecting and countering deepfakes, AI-generated disinformation, and other forms of AI misuse.

But the truth is, they’ve already tried – and largely failed. Just recently, we’ve observed more incidents of children getting caught up in deep fake incidents, showing that the societal harm they inflict can be grave.Â

Currently, tech companies can’t reliably detect deep fakes at scale, and they’ll only grow more realistic and tougher to detect in time.Â

And once text-to-video systems like OpenAI’s Sora land, there’ll be a whole new dimension of deep fakes to handle.Â

The post DeepMind study exposes deep fakes as leading form of AI misuse appeared first on DailyAI.

Source: Read MoreÂ