This is the second post of a two-part series about integrating machine learning (ML) predictions of your Amazon Aurora database and Amazon SageMaker. In Part 1, you learned how to build a customer churn ML model with Amazon SageMaker Autopilot, set up the Amazon Aurora machine learning (Aurora ML) and SageMaker integration, and invoke the SageMaker endpoint from an Aurora cluster with a SQL function to retrieve model predictions for an entire database table in real time.

In this post, we discuss how to implement Aurora ML performance optimizations to perform real-time inference against a SageMaker endpoint at a large scale. More specifically, we simulate an OLTP workload against the database, where multiple clients are making simultaneous calls against the database and are putting the SageMaker endpoint under stress to respond to thousands of requests in a short time window. Moreover, we show how to use SQL triggers to create an automatic orchestration pipeline for your predictive workload without using additional services.

This solution enables your business users to have up-to-date data, including your explanatory variables as well as real-time forecasted values returned by the ML model, in business intelligence reports and dashboards; without the need for additional extract, transform, and load (ETL) workloads.

The goal of the post is to demonstrate how to implement Aurora ML and SageMaker integration end to end, perform its stress testing, and analyze various performance metrics of this solution. The sample code and AWS CloudFormation template are available in the following GitHub repo.

Solution overview

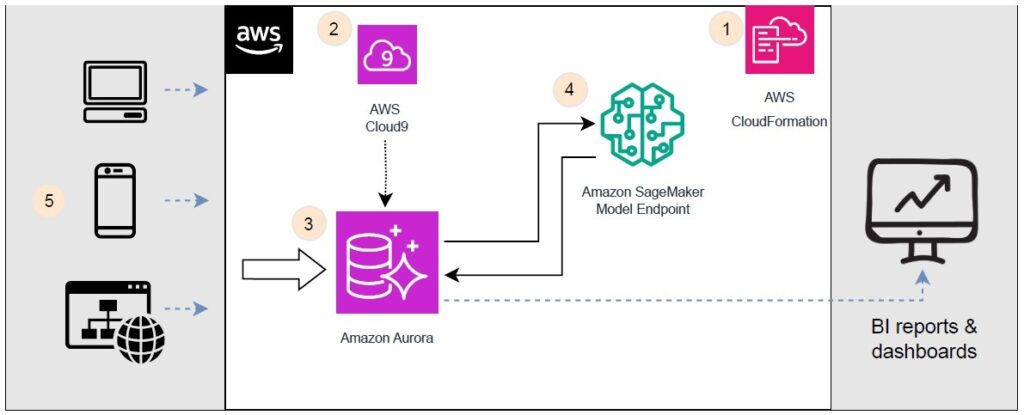

The following graph presents the overall solution.

The solution contains the following steps:

Deploy the infrastructure using AWS CloudFormation.

Use AWS Cloud9 to connect to the Aurora cluster in order to set up the workflow.

Orchestrate a predictive workflow that will respond to INSERT statements and persist info back in a table at the Aurora cluster.

Return model predictions from the SageMaker endpoint to the incoming Aurora queries.

Stress test the entire system by performing a large-scale OLTP simulation.

Prerequisites

The following are prerequisites for completing the walkthrough in this post:

An AWS account.

Basic knowledge of SQL and database concepts.

Familiarity with the concepts explained in Part 1.

Note that this solution has been deployed and tested in the N. Virginia (us-east-1) Region. For a complete list of Region availability and supported versions of Aurora ML, refer to Aurora machine learning.

Deploy the infrastructure using AWS CloudFormation

Use the provided CloudFormation template to provision the required resources:

Aurora DB cluster with one instance – This database is provisioned from a snapshot that already has all the configurations in place to enable Aurora ML. Some additional resources related to Aurora deployed by this template include parameter groups, an AWS Identity and Access Management (IAM) role, a subnet group, as well as username and password stored in AWS Secrets Manager.

SageMaker endpoint – For this post, you use an already trained model from Part 1 of this series, which is provided in the template along with a model and endpoint config. A SageMaker IAM role is also created following these recommendations to ensure least privilege access.

AWS Cloud9 instance – You use this to establish a connection with the database and simulate an OLTP workload. You can also use any EC2 instance with accordingly configured security group. However, AWS Cloud9 comes with an handy IDE integration which improves the overall developer experience.

Networking configuration – This includes the security group as well as VPC endpoints.

When you launch the CloudFormation template, provide the following input parameters:

DefaultVpcId – Use the default (public VPC ID).

DefaultRouteTableId – Use the default route table associated with the previously selected VPC (required for VPC endpoint setup). Go to VPC, Your VPCs, click on the VPC you selected before and copy the Route table ID, which usually is something like rtb-xxxx.

SubnetId1 and SubnetId2 – Use any two different subnets of the selected VPC.

DBUsername – Provide your database master username. The password will be generated automatically through the CloudFormation template. Both the username and password are persisted in AWS Secrets Manager. You use these details later when setting up a database connection from the AWS Cloud9 instance.

After you deploy the template, you need to perform an additional configuration step to allow the AWS Cloud9 instance to connect with your database. In the VPC security groups, you need to allow the connection on the MySQL default port on the Aurora cluster security group. This will ensure communication between both services. The following screenshot shows an example of editing a security group’s inbound rules.

Connect to the Aurora cluster and set up the workflow

On the AWS Cloud9 console, launch the AWS Cloud9 interactive development environment (IDE). Open a terminal and run the following command to establish a connection with the database (mysql client is already preinstalled):

You will find the writer endpoint on the Amazon RDS Console after opening the cluster object details (see the following screenshot). The database user name needs to be the same one that you provided when you launched the CloudFormation template. After you run the command, you are prompted to provide the database password which you can copy from AWS Secrets Manager under ‘/rds/database-aurora-ml-master-password’.

When you’re logged in, you can start creating the necessary database components for this use case. Note that the database already contains the mltest schema where you will work.

First, create the churn_inserts table, which holds both the explanatory variables used to invoke the model as well as the model prediction. The idea is that every time a client inserts data into this table, the DB cluster will automatically invoke the SageMaker endpoint for each row of data and persist the prediction from the response in the same row. Keep in mind that the data types in the following schema must correspond with the data types used for training the machine learning model in the first place (part 1). If you modify the data types of the source data, you will have to retrain the machine learning model accordingly.

Create a predict_churn SQL function that invokes the deployed SageMaker endpoint. An important consideration is the MAX_BATCH_SIZE setting at the end of the function. The Aurora cluster will try to batch as many incoming requests together to perform model invocations more efficiently and not overload the endpoint.

After you create the function, test if the integration works correctly by running a SELECT statement on top of a single row of data. In the following example, the prediction output says 0 (1=TRUE, 0=FALSE), which means that the customer won’t churn:

Now you can create an orchestration mechanism for the solution. To do so, you use the MySQL TRIGGER command. In this example, the trigger will automatically invoke the earlier created predict_churn function upon every new row INSERT operation. It will store the input variable values alongside the model prediction in the churn_inserts table. The advantage of this solution is that you don’t need to build an external mechanism to detect a new insert, do the model invocation, and store the result back to Aurora. Bear in mind that a failure of a trigger would result in an entire operation rolled back, including the INSERT statement. Monitoring failed triggers can be achieved through the activation of logs, however, this is beyond the scope of this post.

Let’s confirm that the trigger works correctly by performing an insert:

ML prediction happens automatically thanks to the trigger and the result (forecast) is visible via a SQL statement right after.

Perform stress testing

In this section, you check how performant this solution is for real-time inferencing use cases. The goal is to simulate an app behavior where many simultaneously connected clients perform thousands of inserts at the same time. To do so, you run the aurora-oltp-test.py script. The Aurora cluster will need to manage the load and orchestration and the SageMaker endpoint will need to respond to a sudden spike in prediction requests. The SageMaker endpoint uses the ml.m5.large instance type (2 vCPUs and 8 GiB memory) without any auto scaling. For more information about SageMaker load testing best practices, refer to Best practices for load testing Amazon SageMaker real-time inference endpoints.

Let’s discuss in more detail the main steps of the aurora-oltp-test.py script:

At the top of the script, there are a few objects required for the ​​generate_random_sql_insert function. The purpose of the function is to introduce a bit of variety into the data that the model will be making predictions on.

The main part of the script starts below that function:

First, each client makes a connection to the database using the pymysql.connect function.

A random number of requests will be made by the client. It will be any number between 500–1,000.

Lastly, the script performs an insert into the churn_inserts table using the generate_random_sql_insert function.

Let’s now run the OLTP stress test:

Upload the aurora-oltp-test.py script to the AWS Cloud9 instance.

Install a required Python package in the terminal before running the script:

Provide your database details for the MySQL connection in the script.

Trigger the script from the terminal using the following command:

This will spin multiple Python processes running the script in the background. You can control the number of maximum clients by changing the second number 100 with a different value. This test will simulate 100 simultaneous connections to the database, each of them making between 500–1,000 inserts. The generated nohup.out file contains all the logs from the spanned processes.

Analyze the results

The experiment performed a total of approximately 73,000 INSERT operations and SageMaker endpoint predictions in approximately 18 minutes. You can confirm that with a simple SELECT statement:

Aurora ML also offers more detailed monitoring metrics with the following command:

The following screenshot shows an example of the detailed Aurora ML monitoring information.

SageMaker endpoint performance metrics

You can inspect your endpoint’s metrics by going to the SageMaker console and choosing Endpoints under Inference in the navigation pane. After you navigate to your model endpoint, you will see detailed monitoring metrics on the Monitor tab. Let’s analyze the impact of the workload on the model endpoint.

Let’s first look at the latency and CPU utilization metrics. Model latency spiked at the beginning and eventually stabilized at about 65,000 microseconds.

Model CPU utilization spiked to almost 180% due to multi-model hosting—the model is in fact an ensemble of three individual models, which can be inspected in the CloudFormation template. This requires further investigation of container-specific metrics.

You will need to inspect the Amazon CloudWatch metrics and search for the model endpoint name in order to inspect container-level metrics. After inspection, one of the containers has much higher latency.

Further examination of container-level CPU utilization shows that the same container is running at almost 100%. This indicates that this ensemble’s component is slowing down the overall model latency.

Database performance metrics

You can inspect your database’s metrics on the Amazon RDS console by navigating to the Monitoring tab for your cluster. Let’s analyze the impact of the workload on various metrics.

Let’s look at the most relevant metrics to the performed stress test. CPU utilization peaked at around 30%, which suggests that the DB cluster wasn’t overwhelmed by the workload.

Moreover, insert throughput peaked at around 70–75 inserts per second.

The insert latency equaled about 70 milliseconds for most of the workflow duration. These values are lower than the IOPS that the database can offer, which confirms that the database is not the workflow bottleneck. Refer to this Planning I/O in Amazon Aurora blog to plan and monitor I/O in Amazon Aurora.

Optimization suggestions

Based on the metrics analysis, we can conclude that the workflow experienced a bottleneck on the SageMaker side. This is due to the fact that one of the model ensemble components reached 100% CPU utilization, which hindered the overall performance latency. In ensemble modeling, this means that the latency of the overall ensemble model is at least as high as the latency of the slowest component. On the other hand, the DB cluster still had enough capacity left to process a much higher number of requests.

Consider making the following adjustments to improve this workflow’s performance:

Simplify the ensemble model to lower the latency of the endpoint’s slowest component. The simplest way to achieve that would be to choose a simpler model form trained by AWS SageMaker Autopilot. Refer to the Efficiently train, tune, and deploy custom ensembles using Amazon SageMaker blog post to learn how to efficiently train, tune and deploy custom ensembles using Amazon SageMaker.

Use a more powerful SageMaker instance type with more CPUs. Refer to the full list of available inference instances.

Apply a SageMaker auto scaling configuration to your endpoint to quickly scale up the number of instances. You can follow the design patterns of the Optimize your machine learning deployments with auto scaling on Amazon SageMaker blog post to apply SageMaker auto scaling.

Clean up

To prevent unwanted charges to your AWS account, we recommend deleting the AWS resources that you used in this post. You can do that by deleting the CloudFormation stack via the AWS CloudFormation console and all resources will be de-provisioned.

Conclusion

In this post, we showed how to implement Aurora ML to perform real-time inference automatically and at scale. Via a SQL trigger, new inserts in your Aurora database will launch an automatic pipeline that calls the SageMaker endpoint for inference and populates the Aurora database with the results, all in real time. Use this solution to enable your business users to analyze the data in your facts table, as well as the real-time forecasted values returned by the ML model, in the same facts table. We also provided some guidelines to perform stress testing and best practices on how to optimize the overall solution.

Check out Up your game: Increase player retention with ML-powered matchmaking using Amazon Aurora ML and Amazon SageMaker blog post to further deepen on your knowledge on how to add real-time predictions (that is, optimal matchmaking) to your Amazon Aurora database.

About the authors

Konrad Semsch is a Senior ML Solutions Architect at the Amazon Web Services Data Lab team. He helps customers use machine learning to solve their business challenges with AWS. He enjoys inventing and simplifying to enable customers with simple and pragmatic solutions for their AI/ML projects. He is most passionate about MLOps and traditional data science. Outside of work, he is a big fan of windsurfing and kitesurfing.

Rodrigo Merino is an AI/ML and GenAI Solutions Architect Manager at Amazon Web Services. With several years of experience deploying emerging technologies, from generative AI to IoT, Rodrigo helps customers across industries to accelerate their AI/ML and GenAI journey by helping them train and build models on AWS and operationalize end-to-end machine learning solutions.

Source: Read More